Quickstart: Get started using Azure OpenAI Assistants (Preview)

Azure OpenAI Assistants (Preview) allows you to create AI assistants tailored to your needs through custom instructions and augmented by advanced tools like code interpreter, and custom functions.

Prerequisites

An Azure subscription - Create one for free.

Access granted to Azure OpenAI in the desired Azure subscription.

Currently, access to this service is granted only by application. You can apply for access to Azure OpenAI by completing the form at https://aka.ms/oai/access. Open an issue on this repo to contact us if you have an issue.

An Azure OpenAI resource with a compatible model in a supported region.

We recommend reviewing the Responsible AI transparency note and other Responsible AI resources to familiarize yourself with the capabilities and limitations of the Azure OpenAI Service.

Go to the Azure OpenAI Studio

Navigate to Azure OpenAI Studio at https://oai.azure.com/ and sign-in with credentials that have access to your OpenAI resource. During or after the sign-in workflow, select the appropriate directory, Azure subscription, and Azure OpenAI resource.

From the Azure OpenAI Studio landing page launch the Assistant's playground from the left-hand navigation Playground > Assistants (Preview)

Playground

The Assistants playground allows you to explore, prototype, and test AI Assistants without needing to run any code. From this page, you can quickly iterate and experiment with new ideas.

Assistant setup

Use the Assistant setup pane to create a new AI assistant or to select an existing assistant.

| Name | Description |

|---|---|

| Assistant name | Your deployment name that is associated with a specific model. |

| Instructions | Instructions are similar to system messages this is where you give the model guidance about how it should behave and any context it should reference when generating a response. You can describe the assistant's personality, tell it what it should and shouldn't answer, and tell it how to format responses. You can also provide examples of the steps it should take when answering responses. |

| Deployment | This is where you set which model deployment to use with your assistant. |

| Functions | Create custom function definitions for the models to formulate API calls and structure data outputs based on your specifications |

| Code interpreter | Code interpreter provides access to a sandboxed Python environment that can be used to allow the model to test and execute code. |

| Files | You can upload up to 20 files, with a max file size of 512 MB to use with tools. |

Tools

An individual assistant can access up to 128 tools including code interpreter, as well as any custom tools you create via functions.

Chat session

Chat session also known as a thread within the Assistant's API is where the conversation between the user and assistant occurs. Unlike traditional chat completion calls there is no limit to the number of messages in a thread. The assistant will automatically compress requests to fit the input token limit of the model.

This also means that you are not controlling how many tokens are passed to the model during each turn of the conversation. Managing tokens is abstracted away and handled entirely by the Assistants API.

Select the Clear chat button to delete the current conversation history.

Underneath the text input box there are two buttons:

- Add a message without run.

- Add and run.

Logs

Logs provide a detailed snapshot of what the assistant API activity.

Show panels

By default there are three panels: assistant setup, chat session, and Logs. Show panels allows you to add, remove, and rearrange the panels. If you ever close a panel and need to get it back, use Show panels to restore the lost panel.

Create your first assistant

From the assistant setup drop-down, select New

Give your Assistant a name

Enter the following instructions "You are an AI assistant that can write code to help answer math questions"

Select a deployment of

gpt-4 (1106-preview). The dropdown will display your custom deployment names so if your model/deployment names are different you should choose a deployment where the underlying model is equivalent to the 1106-preview model.Select the toggle enabling code interpreter.

Select Save.

Enter a question for the assistant to answer: "I need to solve the equation

3x + 11 = 14. Can you help me?"Select the Add and run button

The solution to the equation (3x + 11 = 14) is (x = 1).While we can see that answer is correct, to confirm that the model used code interpreter to get to this answer, and that the code it wrote is valid rather than just repeating an answer from the model's training data we'll ask another question.

Enter the follow-up question: "Show me the code you ran to get this solution."

Certainly! Here's the Python code I ran to find the solution for the equation (3x + 11 = 14):from sympy import symbols, Eq, solve # Define the variable x = symbols('x') # Define the equation equation = Eq(3*x + 11, 14) # Solve the equation solution = solve(equation, x) solution

You could also consult the logs in the right-hand panel to confirm that code interpreter was used and to validate the code that was run to generate the response. It is important to remember that while code interpreter gives the model the capability to respond to more complex math questions by converting the questions into code and running in a sandboxed Python environment, you still need to validate the response to confirm that the model correctly translated your question into a valid representation in code.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource or resource group. Deleting the resource group also deletes any other resources associated with it.

See also

- Learn more about how to use Assistants with our How-to guide on Assistants.

- Azure OpenAI Assistants API samples

Important

Some of the features described in this article might only be available in preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

Prerequisites

An Azure subscription - Create one for free.

Access granted to Azure OpenAI in the desired Azure subscription.

Currently, access to this service is granted only by application. You can apply for access to Azure OpenAI by completing the form at https://aka.ms/oai/access. Open an issue on this repo to contact us if you have an issue.

An Azure AI hub resource with a model deployed. For more information about model deployment, see the resource deployment guide.

An Azure AI project in Azure AI Studio.

Go to the Azure AI Studio (Preview)

Sign in to Azure AI Studio.

Go to your project or create a new project in Azure AI Studio.

From your project overview, select Assistants, located under Project playground.

The Assistants playground allows you to explore, prototype, and test AI Assistants without needing to run any code. From this page, you can quickly iterate and experiment with new ideas.

The playground provides several options to configure your Assistant. In the following steps, you will use the Assistant setup pane to create a new AI assistant.

Name Description Assistant name Your deployment name that is associated with a specific model. Instructions Instructions are similar to system messages this is where you give the model guidance about how it should behave and any context it should reference when generating a response. You can describe the assistant's personality, tell it what it should and shouldn't answer, and tell it how to format responses. You can also provide examples of the steps it should take when answering responses. Deployment This is where you set which model deployment to use with your assistant. Functions Create custom function definitions for the models to formulate API calls and structure data outputs based on your specifications. Not used in this quickstart. Code interpreter Code interpreter provides access to a sandboxed Python environment that can be used to allow the model to test and execute code. Files You can upload up to 20 files, with a max file size of 512 MB to use with tools. Not used in this quickstart.

Create your first Assistant

Select your deployment from the Deployments dropdown.

From the Assistant setup drop-down, select New.

Give your Assistant a name.

Enter the following instructions "You are an AI assistant that can write code to help answer math questions"

Select a model deployment. We recommend testing with one of the latest gpt-4 models.

Select the toggle enabling code interpreter.

Select Save.

Enter a question for the assistant to answer: "I need to solve the equation

3x + 11 = 14. Can you help me?"Select the Add and run button

The solution to the equation (3x + 11 = 14) is (x = 1).While we can see that answer is correct, to confirm that the model used code interpreter to get to this answer, and that the code it wrote is valid rather than just repeating an answer from the model's training data we'll ask another question.

Enter the follow-up question: "Show me the code you ran to get this solution."

Sure. The code is very straightforward# calculation x = (14 - 11) / 3 xFirst, we subtract 11 from 14, then divide the result by 3. This gives us the value of x which is 1.0.

You could also consult the logs in the right-hand panel to confirm that code interpreter was used and to validate the code that was run to generate the response. It is important to remember that while code interpreter gives the model the capability to respond to more complex math questions by converting the questions into code and running in a sandboxed Python environment, you still need to validate the response to confirm that the model correctly translated your question into a valid representation in code.

Key concepts

While using the Assistants playground, keep the following concepts in mind.

Tools

An individual assistant can access up to 128 tools including code interpreter, as well as any custom tools you create via functions.

Chat session

Chat session also known as a thread within the Assistant's API is where the conversation between the user and assistant occurs. Unlike traditional chat completion calls there is no limit to the number of messages in a thread. The assistant will automatically compress requests to fit the input token limit of the model.

This also means that you are not controlling how many tokens are passed to the model during each turn of the conversation. Managing tokens is abstracted away and handled entirely by the Assistants API.

Select the Clear chat button to delete the current conversation history.

Underneath the text input box there are two buttons:

- Add a message without run.

- Add and run.

Logs

Logs provide a detailed snapshot of what the assistant API activity.

Show panels

By default there are three panels: assistant setup, chat session, and Logs. Show panels allows you to add, remove, and rearrange the panels. If you ever close a panel and need to get it back, use Show panels to restore the lost panel.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource or resource group. Deleting the resource group also deletes any other resources associated with it.

Alternatively you can delete the assistant, or thread via the Assistant's API.

See also

- Learn more about how to use Assistants with our How-to guide on Assistants.

- Azure OpenAI Assistants API samples

Reference documentation | Library source code | Package (PyPi) |

Prerequisites

An Azure subscription - Create one for free

Access granted to Azure OpenAI in the desired Azure subscription

Currently, access to this service is granted only by application. You can apply for access to Azure OpenAI by completing the form at https://aka.ms/oai/access. Open an issue on this repo to contact us if you have an issue.

The following Python libraries: os, openai (Version 1.x is required)

Azure CLI used for passwordless authentication in a local development environment, create the necessary context by signing in with the Azure CLI.

An Azure OpenAI resource with a compatible model in a supported region.

We recommend reviewing the Responsible AI transparency note and other Responsible AI resources to familiarize yourself with the capabilities and limitations of the Azure OpenAI Service.

An Azure OpenAI resource with the

gpt-4 (1106-preview)model deployed was used testing this example.

Passwordless authentication is recommended

For passwordless authentication, you need to

- Use the azure-identity package.

- Assign the

Cognitive Services Userrole to your user account. This can be done in the Azure portal under Access control (IAM) > Add role assignment. - Sign in with the Azure CLI such as

az login.

Set up

- Install the OpenAI Python client library with:

pip install openai

- For the recommended passwordless authentication:

pip install azure-identity

Note

- File search can ingest up to 10,000 files per assistant - 500 times more than before. It is fast, supports parallel queries through multi-threaded searches, and features enhanced reranking and query rewriting.

- Vector store is a new object in the API. Once a file is added to a vector store, it's automatically parsed, chunked, and embedded, made ready to be searched. Vector stores can be used across assistants and threads, simplifying file management and billing.

- We've added support for the

tool_choiceparameter which can be used to force the use of a specific tool (like file search, code interpreter, or a function) in a particular run.

Note

This library is maintained by OpenAI. Refer to the release history to track the latest updates to the library.

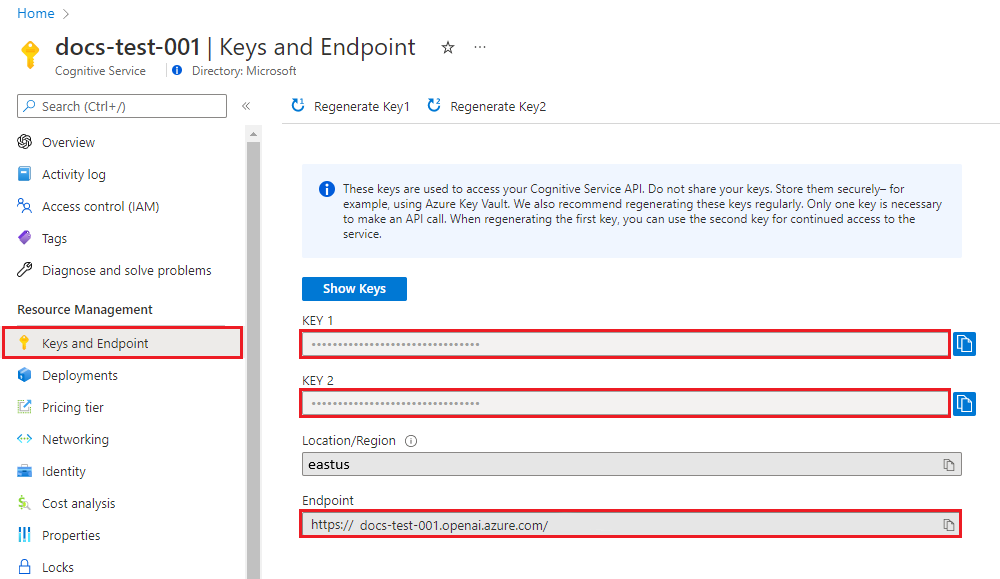

Retrieve key and endpoint

To successfully make a call against the Azure OpenAI service, you'll need the following:

| Variable name | Value |

|---|---|

ENDPOINT |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the value in Azure OpenAI Studio > Playground > View code. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

DEPLOYMENT-NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Model Deployments in the Azure portal or alternatively under Management > Deployments in Azure OpenAI Studio. |

Go to your resource in the Azure portal. The Keys and Endpoint can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Create and assign persistent environment variables for your key and endpoint.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

Create an assistant

In our code we are going to specify the following values:

| Name | Description |

|---|---|

| Assistant name | Your deployment name that is associated with a specific model. |

| Instructions | Instructions are similar to system messages this is where you give the model guidance about how it should behave and any context it should reference when generating a response. You can describe the assistant's personality, tell it what it should and shouldn't answer, and tell it how to format responses. You can also provide examples of the steps it should take when answering responses. |

| Model | This is where you set which model deployment name to use with your assistant. The retrieval tool requires gpt-35-turbo (1106) or gpt-4 (1106-preview) model. Set this value to your deployment name, not the model name unless it is the same. |

| Code interpreter | Code interpreter provides access to a sandboxed Python environment that can be used to allow the model to test and execute code. |

Tools

An individual assistant can access up to 128 tools including code interpreter, as well as any custom tools you create via functions.

Create the Python app

Sign in to Azure with az login then create and run an assistant with the following recommended passwordless Python example:

import os

from azure.identity import DefaultAzureCredential, get_bearer_token_provider

from openai import AzureOpenAI

token_provider = get_bearer_token_provider(DefaultAzureCredential(), "https://cognitiveservices.azure.com/.default")

client = AzureOpenAI(

azure_ad_token_provider=token_provider,

azure_endpoint=os.environ["AZURE_OPENAI_ENDPOINT"],

api_version="2024-05-01-preview",

)

# Create an assistant

assistant = client.beta.assistants.create(

name="Math Assist",

instructions="You are an AI assistant that can write code to help answer math questions.",

tools=[{"type": "code_interpreter"}],

model="gpt-4-1106-preview" # You must replace this value with the deployment name for your model.

)

# Create a thread

thread = client.beta.threads.create()

# Add a user question to the thread

message = client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content="I need to solve the equation `3x + 11 = 14`. Can you help me?"

)

# Run the thread and poll for the result

run = client.beta.threads.runs.create_and_poll(

thread_id=thread.id,

assistant_id=assistant.id,

instructions="Please address the user as Jane Doe. The user has a premium account.",

)

print("Run completed with status: " + run.status)

if run.status == "completed":

messages = client.beta.threads.messages.list(thread_id=thread.id)

print(messages.to_json(indent=2))

To use the service API key for authentication, you can create and run an assistant with the following Python example:

import os

from openai import AzureOpenAI

client = AzureOpenAI(

api_key=os.environ["AZURE_OPENAI_API_KEY"],

azure_endpoint=os.environ["AZURE_OPENAI_ENDPOINT"],

api_version="2024-05-01-preview",

)

# Create an assistant

assistant = client.beta.assistants.create(

name="Math Assist",

instructions="You are an AI assistant that can write code to help answer math questions.",

tools=[{"type": "code_interpreter"}],

model="gpt-4-1106-preview" # You must replace this value with the deployment name for your model.

)

# Create a thread

thread = client.beta.threads.create()

# Add a user question to the thread

message = client.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content="I need to solve the equation `3x + 11 = 14`. Can you help me?"

)

# Run the thread and poll for the result

run = client.beta.threads.runs.create_and_poll(

thread_id=thread.id,

assistant_id=assistant.id,

instructions="Please address the user as Jane Doe. The user has a premium account.",

)

print("Run completed with status: " + run.status)

if run.status == "completed":

messages = client.beta.threads.messages.list(thread_id=thread.id)

print(messages.to_json(indent=2))

Output

Run completed with status: completed

{

"data": [

{

"id": "msg_4SuWxTubHsHpt5IlBTO5Hyw9",

"assistant_id": "asst_cYqL1RuwLyFV3HU1gkaE2k0K",

"attachments": [],

"content": [

{

"text": {

"annotations": [],

"value": "The solution to the equation \\(3x + 11 = 14\\) is \\(x = 1\\)."

},

"type": "text"

}

],

"created_at": 1716397091,

"metadata": {},

"object": "thread.message",

"role": "assistant",

"run_id": "run_hFgBPbUtO8ZNTnNPC8PgpH1S",

"thread_id": "thread_isb7spwRycI5ueT9E7357aOm"

},

{

"id": "msg_Z32w2E7kY5wEWhZqQWxIbIUB",

"assistant_id": null,

"attachments": [],

"content": [

{

"text": {

"annotations": [],

"value": "I need to solve the equation `3x + 11 = 14`. Can you help me?"

},

"type": "text"

}

],

"created_at": 1716397025,

"metadata": {},

"object": "thread.message",

"role": "user",

"run_id": null,

"thread_id": "thread_isb7spwRycI5ueT9E7357aOm"

}

],

"object": "list",

"first_id": "msg_4SuWxTubHsHpt5IlBTO5Hyw9",

"last_id": "msg_Z32w2E7kY5wEWhZqQWxIbIUB",

"has_more": false

}

Understanding your results

In this example we create an assistant with code interpreter enabled. When we ask the assistant a math question it translates the question into python code and executes the code in sandboxed environment in order to determine the answer to the question. The code the model creates and tests to arrive at an answer is:

from sympy import symbols, Eq, solve

# Define the variable

x = symbols('x')

# Define the equation

equation = Eq(3*x + 11, 14)

# Solve the equation

solution = solve(equation, x)

solution

It is important to remember that while code interpreter gives the model the capability to respond to more complex queries by converting the questions into code and running that code iteratively in the Python sandbox until it reaches a solution, you still need to validate the response to confirm that the model correctly translated your question into a valid representation in code.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource or resource group. Deleting the resource group also deletes any other resources associated with it.

See also

- Learn more about how to use Assistants with our How-to guide on Assistants.

- Azure OpenAI Assistants API samples

Reference documentation | Source code | Package (NuGet)

Prerequisites

An Azure subscription - Create one for free

Access granted to Azure OpenAI in the desired Azure subscription

Currently, access to this service is granted only by application. You can apply for access to Azure OpenAI by completing the form at https://aka.ms/oai/access. Open an issue on this repo to contact us if you have an issue.

The .NET 8 SDK

An Azure OpenAI resource with a compatible model in a supported region.

We recommend reviewing the Responsible AI transparency note and other Responsible AI resources to familiarize yourself with the capabilities and limitations of the Azure OpenAI Service.

An Azure OpenAI resource with the

gpt-4 (1106-preview)model deployed was used testing this example.

Set up

Create a new .NET Core application

In a console window (such as cmd, PowerShell, or Bash), use the dotnet new command to create a new console app with the name azure-openai-quickstart. This command creates a simple "Hello World" project with a single C# source file: Program.cs.

dotnet new console -n azure-openai-assistants-quickstart

Change your directory to the newly created app folder. You can build the application with:

dotnet build

The build output should contain no warnings or errors.

...

Build succeeded.

0 Warning(s)

0 Error(s)

...

Install the OpenAI .NET client library with:

dotnet add package Azure.AI.OpenAI.Assistants --prerelease

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the value in the Azure OpenAI Studio > Playground > Code View. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Keys & Endpoint section can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

Create an assistant

In our code we are going to specify the following values:

| Name | Description |

|---|---|

| Assistant name | Your deployment name that is associated with a specific model. |

| Instructions | Instructions are similar to system messages this is where you give the model guidance about how it should behave and any context it should reference when generating a response. You can describe the assistant's personality, tell it what it should and shouldn't answer, and tell it how to format responses. You can also provide examples of the steps it should take when answering responses. |

| Model | This is where you set which model deployment name to use with your assistant. The retrieval tool requires gpt-35-turbo (1106) or gpt-4 (1106-preview) model. Set this value to your deployment name, not the model name unless it is the same. |

| Code interpreter | Code interpreter provides access to a sandboxed Python environment that can be used to allow the model to test and execute code. |

Tools

An individual assistant can access up to 128 tools including code interpreter, as well as any custom tools you create via functions.

Create and run an assistant with the following:

using Azure;

using Azure.AI.OpenAI.Assistants;

string endpoint = Environment.GetEnvironmentVariable("AZURE_OPENAI_ENDPOINT") ?? throw new ArgumentNullException("AZURE_OPENAI_ENDPOINT");

string key = Environment.GetEnvironmentVariable("AZURE_OPENAI_API_KEY") ?? throw new ArgumentNullException("AZURE_OPENAI_API_KEY");

AssistantsClient client = new AssistantsClient(new Uri(endpoint), new AzureKeyCredential(key));

// Create an assistant

Assistant assistant = await client.CreateAssistantAsync(

new AssistantCreationOptions("gpt-4-1106-preview") // Replace this with the name of your model deployment

{

Name = "Math Tutor",

Instructions = "You are a personal math tutor. Write and run code to answer math questions.",

Tools = { new CodeInterpreterToolDefinition() }

});

// Create a thread

AssistantThread thread = await client.CreateThreadAsync();

// Add a user question to the thread

ThreadMessage message = await client.CreateMessageAsync(

thread.Id,

MessageRole.User,

"I need to solve the equation `3x + 11 = 14`. Can you help me?");

// Run the thread

ThreadRun run = await client.CreateRunAsync(

thread.Id,

new CreateRunOptions(assistant.Id)

);

// Wait for the assistant to respond

do

{

await Task.Delay(TimeSpan.FromMilliseconds(500));

run = await client.GetRunAsync(thread.Id, run.Id);

}

while (run.Status == RunStatus.Queued

|| run.Status == RunStatus.InProgress);

// Get the messages

PageableList<ThreadMessage> messagesPage = await client.GetMessagesAsync(thread.Id);

IReadOnlyList<ThreadMessage> messages = messagesPage.Data;

// Note: messages iterate from newest to oldest, with the messages[0] being the most recent

foreach (ThreadMessage threadMessage in messages.Reverse())

{

Console.Write($"{threadMessage.CreatedAt:yyyy-MM-dd HH:mm:ss} - {threadMessage.Role,10}: ");

foreach (MessageContent contentItem in threadMessage.ContentItems)

{

if (contentItem is MessageTextContent textItem)

{

Console.Write(textItem.Text);

}

Console.WriteLine();

}

}

This will print an output as follows:

2024-03-05 03:38:17 - user: I need to solve the equation `3x + 11 = 14`. Can you help me?

2024-03-05 03:38:25 - assistant: The solution to the equation \(3x + 11 = 14\) is \(x = 1\).

New messages can be created on the thread before re-running, which will see the assistant use the past messages as context within the thread.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource or resource group. Deleting the resource group also deletes any other resources associated with it.

See also

- Learn more about how to use Assistants with our How-to guide on Assistants.

- Azure OpenAI Assistants API samples

Reference documentation | Library source code | Package (npm) |

Prerequisites

An Azure subscription - Create one for free

Access granted to Azure OpenAI in the desired Azure subscription

Currently, access to this service is granted only by application. You can apply for access to Azure OpenAI by completing the form at https://aka.ms/oai/access. Open an issue on this repo to contact us if you have an issue.

Azure CLI used for passwordless authentication in a local development environment, create the necessary context by signing in with the Azure CLI.

An Azure OpenAI resource with a compatible model in a supported region.

We recommend reviewing the Responsible AI transparency note and other Responsible AI resources to familiarize yourself with the capabilities and limitations of the Azure OpenAI Service.

An Azure OpenAI resource with the

gpt-4 (1106-preview)model deployed was used testing this example.

Passwordless authentication is recommended

For passwordless authentication, you need to

- Use the

@azure/identitypackage. - Assign the

Cognitive Services Userrole to your user account. This can be done in the Azure portal under Access control (IAM) > Add role assignment. - Sign in with the Azure CLI such as

az login.

Set up

Install the OpenAI Assistants client library for JavaScript with:

npm install openaiFor the recommended passwordless authentication:

npm install @azure/identity

Retrieve key and endpoint

To successfully make a call against the Azure OpenAI service, you'll need the following:

| Variable name | Value |

|---|---|

ENDPOINT |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the value in Azure OpenAI Studio > Playground > View code. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

DEPLOYMENT-NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Model Deployments in the Azure portal or alternatively under Management > Deployments in Azure OpenAI Studio. |

Go to your resource in the Azure portal. The Keys and Endpoint can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Create and assign persistent environment variables for your key and endpoint.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

Add additional environment variables for the deployment name and API version:

AZURE_OPENAI_DEPLOYMENT_NAME: Your deployment name as shown in the Azure portal.OPENAI_API_VERSION: Learn more about API Versions.

Create and assign persistent environment variables for your key and endpoint.

setx AZURE_OPENAI_DEPLOYMENT_NAME "REPLACE_WITH_YOUR_DEPLOYMENT_NAME"

setx OPENAI_API_VERSION "REPLACE_WITH_YOUR_API_VERSION"

Create an assistant

In our code we are going to specify the following values:

| Name | Description |

|---|---|

| Assistant name | Your deployment name that is associated with a specific model. |

| Instructions | Instructions are similar to system messages this is where you give the model guidance about how it should behave and any context it should reference when generating a response. You can describe the assistant's personality, tell it what it should and shouldn't answer, and tell it how to format responses. You can also provide examples of the steps it should take when answering responses. |

| Model | This is the deployment name. |

| Code interpreter | Code interpreter provides access to a sandboxed Python environment that can be used to allow the model to test and execute code. |

Tools

An individual assistant can access up to 128 tools including code interpreter, as well as any custom tools you create via functions.

Sign in to Azure with az login then create and run an assistant with the following recommended passwordless TypeScript module (index.ts):

import "dotenv/config";

import { AzureOpenAI } from "openai";

import {

Assistant,

AssistantCreateParams,

AssistantTool,

} from "openai/resources/beta/assistants";

import { Message, MessagesPage } from "openai/resources/beta/threads/messages";

import { Run } from "openai/resources/beta/threads/runs/runs";

import { Thread } from "openai/resources/beta/threads/threads";

// Add `Cognitive Services User` to identity for Azure OpenAI resource

import {

DefaultAzureCredential,

getBearerTokenProvider,

} from "@azure/identity";

// Get environment variables

const azureOpenAIEndpoint = process.env.AZURE_OPENAI_ENDPOINT as string;

const azureOpenAIDeployment = process.env

.AZURE_OPENAI_DEPLOYMENT_NAME as string;

const openAIVersion = process.env.OPENAI_API_VERSION as string;

// Check env variables

if (!azureOpenAIEndpoint || !azureOpenAIDeployment || !openAIVersion) {

throw new Error(

"Please ensure to set AZURE_OPENAI_DEPLOYMENT_NAME and AZURE_OPENAI_ENDPOINT in your environment variables."

);

}

// Get Azure SDK client

const getClient = (): AzureOpenAI => {

const credential = new DefaultAzureCredential();

const scope = "https://cognitiveservices.azure.com/.default";

const azureADTokenProvider = getBearerTokenProvider(credential, scope);

const assistantsClient = new AzureOpenAI({

endpoint: azureOpenAIEndpoint,

apiVersion: openAIVersion,

azureADTokenProvider,

});

return assistantsClient;

};

const assistantsClient = getClient();

const options: AssistantCreateParams = {

model: azureOpenAIDeployment, // Deployment name seen in Azure AI Studio

name: "Math Tutor",

instructions:

"You are a personal math tutor. Write and run JavaScript code to answer math questions.",

tools: [{ type: "code_interpreter" } as AssistantTool],

};

const role = "user";

const message = "I need to solve the equation `3x + 11 = 14`. Can you help me?";

// Create an assistant

const assistantResponse: Assistant =

await assistantsClient.beta.assistants.create(options);

console.log(`Assistant created: ${JSON.stringify(assistantResponse)}`);

// Create a thread

const assistantThread: Thread = await assistantsClient.beta.threads.create({});

console.log(`Thread created: ${JSON.stringify(assistantThread)}`);

// Add a user question to the thread

const threadResponse: Message =

await assistantsClient.beta.threads.messages.create(assistantThread.id, {

role,

content: message,

});

console.log(`Message created: ${JSON.stringify(threadResponse)}`);

// Run the thread and poll it until it is in a terminal state

const runResponse: Run = await assistantsClient.beta.threads.runs.createAndPoll(

assistantThread.id,

{

assistant_id: assistantResponse.id,

},

{ pollIntervalMs: 500 }

);

console.log(`Run created: ${JSON.stringify(runResponse)}`);

// Get the messages

const runMessages: MessagesPage =

await assistantsClient.beta.threads.messages.list(assistantThread.id);

for await (const runMessageDatum of runMessages) {

for (const item of runMessageDatum.content) {

// types are: "image_file" or "text"

if (item.type === "text") {

console.log(`Message content: ${JSON.stringify(item.text?.value)}`);

}

}

}

To use the service key for authentication, you can create and run an assistant with the following TypeScript module (index.ts):

import "dotenv/config";

import { AzureOpenAI } from "openai";

import {

Assistant,

AssistantCreateParams,

AssistantTool,

} from "openai/resources/beta/assistants";

import { Message, MessagesPage } from "openai/resources/beta/threads/messages";

import { Run } from "openai/resources/beta/threads/runs/runs";

import { Thread } from "openai/resources/beta/threads/threads";

// Get environment variables

const azureOpenAIKey = process.env.AZURE_OPENAI_KEY as string;

const azureOpenAIEndpoint = process.env.AZURE_OPENAI_ENDPOINT as string;

const azureOpenAIDeployment = process.env

.AZURE_OPENAI_DEPLOYMENT_NAME as string;

const openAIVersion = process.env.OPENAI_API_VERSION as string;

// Check env variables

if (!azureOpenAIKey || !azureOpenAIEndpoint || !azureOpenAIDeployment || !openAIVersion) {

throw new Error(

"Please set AZURE_OPENAI_KEY and AZURE_OPENAI_ENDPOINT and AZURE_OPENAI_DEPLOYMENT_NAME in your environment variables."

);

}

// Get Azure SDK client

const getClient = (): AzureOpenAI => {

const assistantsClient = new AzureOpenAI({

endpoint: azureOpenAIEndpoint,

apiVersion: openAIVersion,

apiKey: azureOpenAIKey,

});

return assistantsClient;

};

const assistantsClient = getClient();

const options: AssistantCreateParams = {

model: azureOpenAIDeployment, // Deployment name seen in Azure AI Studio

name: "Math Tutor",

instructions:

"You are a personal math tutor. Write and run JavaScript code to answer math questions.",

tools: [{ type: "code_interpreter" } as AssistantTool],

};

const role = "user";

const message = "I need to solve the equation `3x + 11 = 14`. Can you help me?";

// Create an assistant

const assistantResponse: Assistant =

await assistantsClient.beta.assistants.create(options);

console.log(`Assistant created: ${JSON.stringify(assistantResponse)}`);

// Create a thread

const assistantThread: Thread = await assistantsClient.beta.threads.create({});

console.log(`Thread created: ${JSON.stringify(assistantThread)}`);

// Add a user question to the thread

const threadResponse: Message =

await assistantsClient.beta.threads.messages.create(assistantThread.id, {

role,

content: message,

});

console.log(`Message created: ${JSON.stringify(threadResponse)}`);

// Run the thread and poll it until it is in a terminal state

const runResponse: Run = await assistantsClient.beta.threads.runs.createAndPoll(

assistantThread.id,

{

assistant_id: assistantResponse.id,

},

{ pollIntervalMs: 500 }

);

console.log(`Run created: ${JSON.stringify(runResponse)}`);

// Get the messages

const runMessages: MessagesPage =

await assistantsClient.beta.threads.messages.list(assistantThread.id);

for await (const runMessageDatum of runMessages) {

for (const item of runMessageDatum.content) {

// types are: "image_file" or "text"

if (item.type === "text") {

console.log(`Message content: ${JSON.stringify(item.text?.value)}`);

}

}

}

Output

Assistant created: {"id":"asst_zXaZ5usTjdD0JGcNViJM2M6N","createdAt":"2024-04-08T19:26:38.000Z","name":"Math Tutor","description":null,"model":"daisy","instructions":"You are a personal math tutor. Write and run JavaScript code to answer math questions.","tools":[{"type":"code_interpreter"}],"fileIds":[],"metadata":{}}

Thread created: {"id":"thread_KJuyrB7hynun4rvxWdfKLIqy","createdAt":"2024-04-08T19:26:38.000Z","metadata":{}}

Message created: {"id":"msg_o0VkXnQj3juOXXRCnlZ686ff","createdAt":"2024-04-08T19:26:38.000Z","threadId":"thread_KJuyrB7hynun4rvxWdfKLIqy","role":"user","content":[{"type":"text","text":{"value":"I need to solve the equation `3x + 11 = 14`. Can you help me?","annotations":[]},"imageFile":{}}],"assistantId":null,"runId":null,"fileIds":[],"metadata":{}}

Created run

Run created: {"id":"run_P8CvlouB8V9ZWxYiiVdL0FND","object":"thread.run","status":"queued","model":"daisy","instructions":"You are a personal math tutor. Write and run JavaScript code to answer math questions.","tools":[{"type":"code_interpreter"}],"metadata":{},"usage":null,"assistantId":"asst_zXaZ5usTjdD0JGcNViJM2M6N","threadId":"thread_KJuyrB7hynun4rvxWdfKLIqy","fileIds":[],"createdAt":"2024-04-08T19:26:39.000Z","expiresAt":"2024-04-08T19:36:39.000Z","startedAt":null,"completedAt":null,"cancelledAt":null,"failedAt":null}

Message content: "The solution to the equation \\(3x + 11 = 14\\) is \\(x = 1\\)."

Message content: "Yes, of course! To solve the equation \\( 3x + 11 = 14 \\), we can follow these steps:\n\n1. Subtract 11 from both sides of the equation to isolate the term with x.\n2. Then, divide by 3 to find the value of x.\n\nLet me calculate that for you."

Message content: "I need to solve the equation `3x + 11 = 14`. Can you help me?"

It is important to remember that while the code interpreter gives the model the capability to respond to more complex queries by converting the questions into code and running that code iteratively in JavaScript until it reaches a solution, you still need to validate the response to confirm that the model correctly translated your question into a valid representation in code.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource or resource group. Deleting the resource group also deletes any other resources associated with it.

Sample code

See also

- Learn more about how to use Assistants with our How-to guide on Assistants.

- Azure OpenAI Assistants API samples

Prerequisites

An Azure subscription - Create one for free

Access granted to Azure OpenAI in the desired Azure subscription

Currently, access to this service is granted only by application. You can apply for access to the Azure OpenAI service by completing the form at https://aka.ms/oai/access. Open an issue on this repo to contact us if you have an issue.

An Azure OpenAI resource with a compatible model in a supported region.

We recommend reviewing the Responsible AI transparency note and other Responsible AI resources to familiarize yourself with the capabilities and limitations of the Azure OpenAI Service.

An Azure OpenAI resource with the

gpt-4 (1106-preview)model deployed was used testing this example.

Set up

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you'll need the following:

| Variable name | Value |

|---|---|

ENDPOINT |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the value in Azure OpenAI Studio > Playground > Code View. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

DEPLOYMENT-NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Deployments in the Azure portal or alternatively under Management > Deployments in Azure OpenAI Studio. |

Go to your resource in the Azure portal. The Endpoint and Keys can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Create and assign persistent environment variables for your key and endpoint.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

REST API

Create an assistant

Note

With Azure OpenAI the model parameter requires model deployment name. If your model deployment name is different than the underlying model name then you would adjust your code to "model": "{your-custom-model-deployment-name}".

curl https://YOUR_RESOURCE_NAME.openai.azure.com/openai/assistants?api-version=2024-05-01-preview \

-H "api-key: $AZURE_OPENAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"instructions": "You are an AI assistant that can write code to help answer math questions.",

"name": "Math Assist",

"tools": [{"type": "code_interpreter"}],

"model": "gpt-4-1106-preview"

}'

Tools

An individual assistant can access up to 128 tools including code interpreter, as well as any custom tools you create via functions.

Create a thread

curl https://YOUR_RESOURCE_NAME.openai.azure.com/openai/threads \

-H "Content-Type: application/json" \

-H "api-key: $AZURE_OPENAI_API_KEY" \

-d ''

Add a user question to the thread

curl https://YOUR_RESOURCE_NAME.openai.azure.com/openai/threads/thread_abc123/messages \

-H "Content-Type: application/json" \

-H "api-key: $AZURE_OPENAI_API_KEY" \

-d '{

"role": "user",

"content": "I need to solve the equation `3x + 11 = 14`. Can you help me?"

}'

Run the thread

curl https://YOUR_RESOURCE_NAME.openai.azure.com/openai/threads/thread_abc123/runs \

-H "api-key: $AZURE_OPENAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"assistant_id": "asst_abc123",

}'

Retrieve the status of the run

curl https://YOUR_RESOURCE_NAME.openai.azure.com/openai/threads/thread_abc123/runs/run_abc123 \

-H "api-key: $AZURE_OPENAI_API_KEY" \

Assistant response

curl https://YOUR_RESOURCE_NAME.openai.azure.com/openai/threads/thread_abc123/messages \

-H "Content-Type: application/json" \

-H "api-key: $AZURE_OPENAI_API_KEY" \

Understanding your results

In this example we create an assistant with code interpreter enabled. When we ask the assistant a math question it translates the question into python code and executes the code in sandboxed environment in order to determine the answer to the question. The code the model creates and tests to arrive at an answer is:

from sympy import symbols, Eq, solve

# Define the variable

x = symbols('x')

# Define the equation

equation = Eq(3*x + 11, 14)

# Solve the equation

solution = solve(equation, x)

solution

It is important to remember that while code interpreter gives the model the capability to respond to more complex queries by converting the questions into code and running that code iteratively in the Python sandbox until it reaches a solution, you still need to validate the response to confirm that the model correctly translated your question into a valid representation in code.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource or resource group. Deleting the resource group also deletes any other resources associated with it.

See also

- Learn more about how to use Assistants with our How-to guide on Assistants.

- Azure OpenAI Assistants API samples

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for