Quickstart: Get started using GPT-35-Turbo and GPT-4 with Azure OpenAI Service

Use this article to get started using Azure OpenAI.

Prerequisites

An Azure subscription - Create one for free.

Access granted to Azure OpenAI in the desired Azure subscription.

Currently, access to this service is granted only by application. You can apply for access to Azure OpenAI by completing the form at https://aka.ms/oai/access. Open an issue on this repo to contact us if you have an issue.

An Azure OpenAI Service resource with either the

gpt-35-turboor thegpt-4models deployed. For more information about model deployment, see the resource deployment guide.

Tip

Try out the new unified Azure AI Studio (preview) which brings together capabilities from across multiple Azure AI services.

Go to Azure OpenAI Studio

Navigate to Azure OpenAI Studio at https://oai.azure.com/ and sign-in with credentials that have access to your OpenAI resource. During or after the sign-in workflow, select the appropriate directory, Azure subscription, and Azure OpenAI resource.

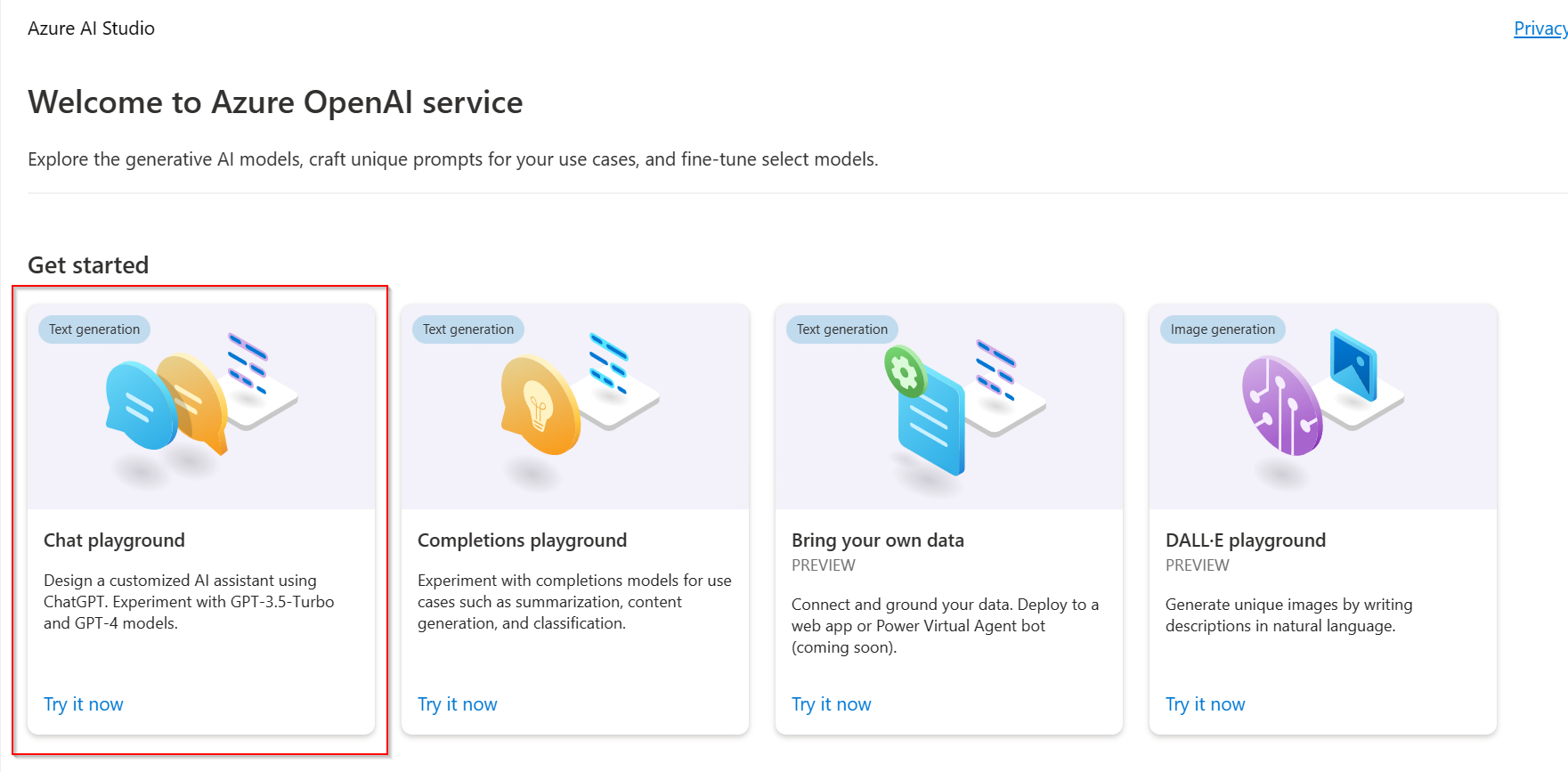

From the Azure OpenAI Studio landing page, select Chat playground.

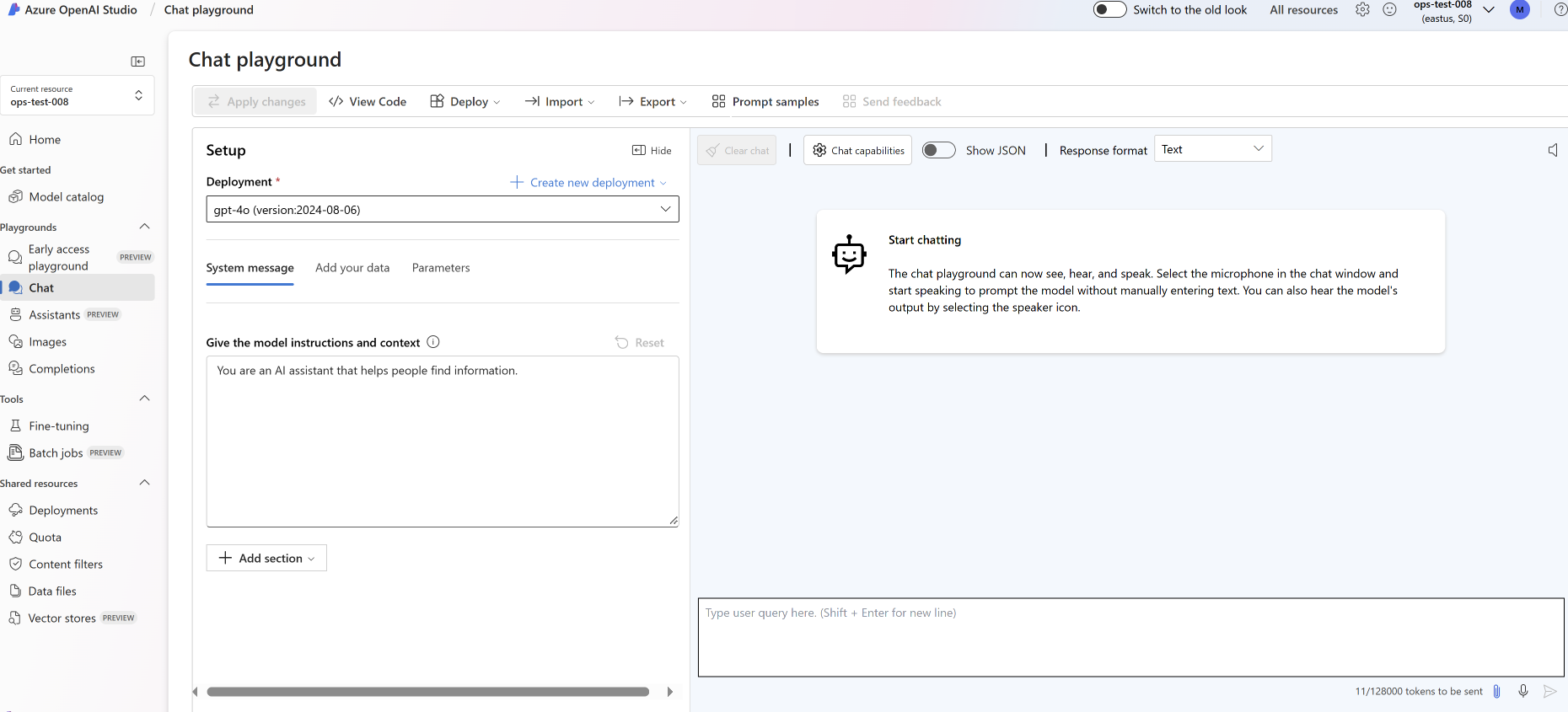

Playground

Start exploring OpenAI capabilities with a no-code approach through the Azure OpenAI Studio Chat playground. From this page, you can quickly iterate and experiment with the capabilities.

Assistant setup

You can use the Assistant setup dropdown to select a few pre-loaded System message examples to get started.

System messages give the model instructions about how it should behave and any context it should reference when generating a response. You can describe the assistant's personality, tell it what it should and shouldn't answer, and tell it how to format responses.

Add few-shot examples allows you to provide conversational examples that are used by the model for in-context learning.

At any time while using the Chat playground you can select View code to see Python, curl, and json code samples pre-populated based on your current chat session and settings selections. You can then take this code and write an application to complete the same task you're currently performing with the playground.

Chat session

Selecting the Send button sends the entered text to the completions API and the results are returned back to the text box.

Select the Clear chat button to delete the current conversation history.

Settings

| Name | Description |

|---|---|

| Deployments | Your deployment name that is associated with a specific model. |

| Temperature | Controls randomness. Lowering the temperature means that the model produces more repetitive and deterministic responses. Increasing the temperature results in more unexpected or creative responses. Try adjusting temperature or Top P but not both. |

| Max length (tokens) | Set a limit on the number of tokens per model response. The API supports a maximum of 4096 tokens shared between the prompt (including system message, examples, message history, and user query) and the model's response. One token is roughly four characters for typical English text. |

| Top probabilities | Similar to temperature, this controls randomness but uses a different method. Lowering Top P narrows the model’s token selection to likelier tokens. Increasing Top P lets the model choose from tokens with both high and low likelihood. Try adjusting temperature or Top P but not both. |

| Multi-turn conversations | Select the number of past messages to include in each new API request. This helps give the model context for new user queries. Setting this number to 10 results in five user queries and five system responses. |

| Stop sequences | Stop sequence make the model end its response at a desired point. The model response ends before the specified sequence, so it won't contain the stop sequence text. For GPT-35-Turbo, using <|im_end|> ensures that the model response doesn't generate a follow-up user query. You can include as many as four stop sequences. |

Show panels

By default there are three panels: assistant setup, chat session, and settings. Show panels allows you to add, remove, and rearrange the panels. If you ever close a panel and need to get it back, use Show panels to restore the lost panel.

Start a chat session

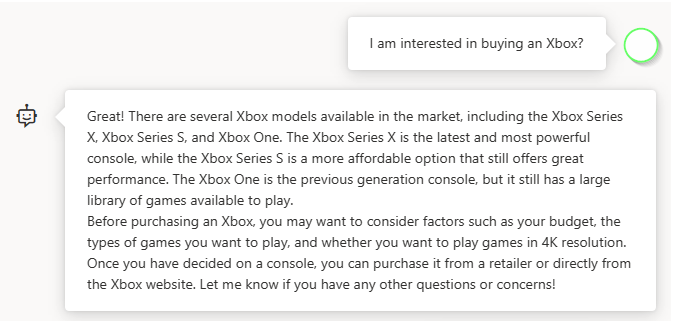

From the assistant setup drop-down, select Xbox customer support agent

You'll be prompted asking if you want to update the system message, select Continue.

In the chat session pane, enter the following question: "I'm interested in buying a new Xbox", and select Send.

You'll receive a response similar to:

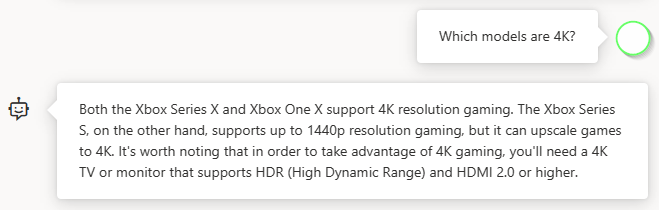

Enter a follow-up question like: "which models support 4K?"

Now that you have a basic conversation select View code from under Assistant setup and you'll have a replay of the code behind the entire conversation so far:

#Note: The openai-python library support for Azure OpenAI is in preview.

import os

import openai

openai.api_type = "azure"

openai.api_base = "https://docs-test-001.openai.azure.com/"

openai.api_version = "2024-02-01"

openai.api_key = os.getenv("OPENAI_API_KEY")

response = openai.ChatCompletion.create(

engine="gpt-35-turbo", # replace this value with the deployment name you chose when you deployed the associated model.

messages = [{"role":"system","content":"You are an Xbox customer support agent whose primary goal is to help users with issues they are experiencing with their Xbox devices. You are friendly and concise. You only provide factual answers to queries, and do not provide answers that are not related to Xbox."},{"role":"user","content":"How much is a PS5?"},{"role":"assistant","content":"I apologize, but I do not have information about the prices of other gaming devices such as the PS5. My primary focus is to assist with issues regarding Xbox devices. Is there a specific issue you are having with your Xbox device that I may be able to help with?"},{"role":"user","content":"I am interested in buying an Xbox?"},{"role":"assistant","content":"Great! There are several Xbox models available in the market, including the Xbox Series X, Xbox Series S, and Xbox One. The Xbox Series X is the latest and most powerful console, while the Xbox Series S is a more affordable option that still offers great performance. The Xbox One is the previous generation console, but it still has a large library of games available to play.\n\nBefore purchasing an Xbox, you may want to consider factors such as your budget, the types of games you want to play, and whether you want to play games in 4K resolution. Once you have decided on a console, you can purchase it from a retailer or directly from the Xbox website. Let me know if you have any other questions or concerns!"},{"role":"user","content":"Which models are 4K?"},{"role":"assistant","content":"Both the Xbox Series X and Xbox One X support 4K resolution gaming. The Xbox Series S, on the other hand, supports up to 1440p resolution gaming, but it can upscale games to 4K. It's worth noting that in order to take advantage of 4K gaming, you'll need a 4K TV or monitor that supports HDR (High Dynamic Range) and HDMI 2.0 or higher."}],

temperature=0,

max_tokens=350,

top_p=0.95,

frequency_penalty=0,

presence_penalty=0,

stop=None)

Understanding the prompt structure

If you examine the sample from View code you'll notice some unique tokens that weren't part of a typical GPT completion call. GPT-35-Turbo was trained to use special tokens to delineate different parts of the prompt. Content is provided to the model in between <|im_start|> and <|im_end|> tokens. The prompt begins with a system message that can be used to prime the model by including context or instructions for the model. After that, the prompt contains a series of messages between the user and the assistant.

The assistant's response to the prompt will then be returned below the <|im_start|>assistant token and will end with <|im_end|> denoting that the assistant has finished its response. You can also use the Show raw syntax toggle button to display these tokens within the chat session panel.

The GPT-35-Turbo & GPT-4 how-to guide provides an in-depth introduction into the new prompt structure and how to use the gpt-35-turbo model effectively.

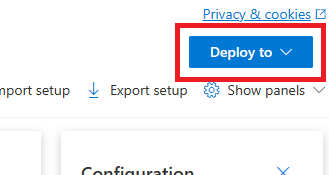

Deploy your model

Once you're satisfied with the experience in Azure OpenAI studio, you can deploy a web app directly from the Studio by selecting the Deploy to button.

This gives you the option to either deploy to a standalone web application, or a copilot in Copilot Studio (preview) if you're using your own data on the model.

As an example, if you choose to deploy a web app:

The first time you deploy a web app, you should select Create a new web app. Choose a name for the app, which will

become part of the app URL. For example, https://<appname>.azurewebsites.net.

Select your subscription, resource group, location, and pricing plan for the published app. To update an existing app, select Publish to an existing web app and choose the name of your previous app from the dropdown menu.

If you choose to deploy a web app, see the important considerations for using it.

Clean up resources

Once you're done testing out the Chat playground, if you want to clean up and remove an Azure OpenAI resource, you can delete the resource or resource group. Deleting the resource group also deletes any other resources associated with it.

Next steps

- Learn more about how to work with the new

gpt-35-turbomodel with the GPT-35-Turbo & GPT-4 how-to guide. - For more examples check out the Azure OpenAI Samples GitHub repository

Source code | Package (NuGet) | Samples| Retrieval Augmented Generation (RAG) enterprise chat template |

Prerequisites

- An Azure subscription - Create one for free

- Access granted to the Azure OpenAI service in the desired Azure subscription. Currently, access to this service is granted only by application. You can apply for access to Azure OpenAI Service by completing the form at https://aka.ms/oai/access.

- The .NET 7 SDK

- An Azure OpenAI Service resource with either the

gpt-35-turboor thegpt-4models deployed. For more information about model deployment, see the resource deployment guide.

Set up

Create a new .NET Core application

In a console window (such as cmd, PowerShell, or Bash), use the dotnet new command to create a new console app with the name azure-openai-quickstart. This command creates a simple "Hello World" project with a single C# source file: Program.cs.

dotnet new console -n azure-openai-quickstart

Change your directory to the newly created app folder. You can build the application with:

dotnet build

The build output should contain no warnings or errors.

...

Build succeeded.

0 Warning(s)

0 Error(s)

...

Install the OpenAI .NET client library with:

dotnet add package Azure.AI.OpenAI --prerelease

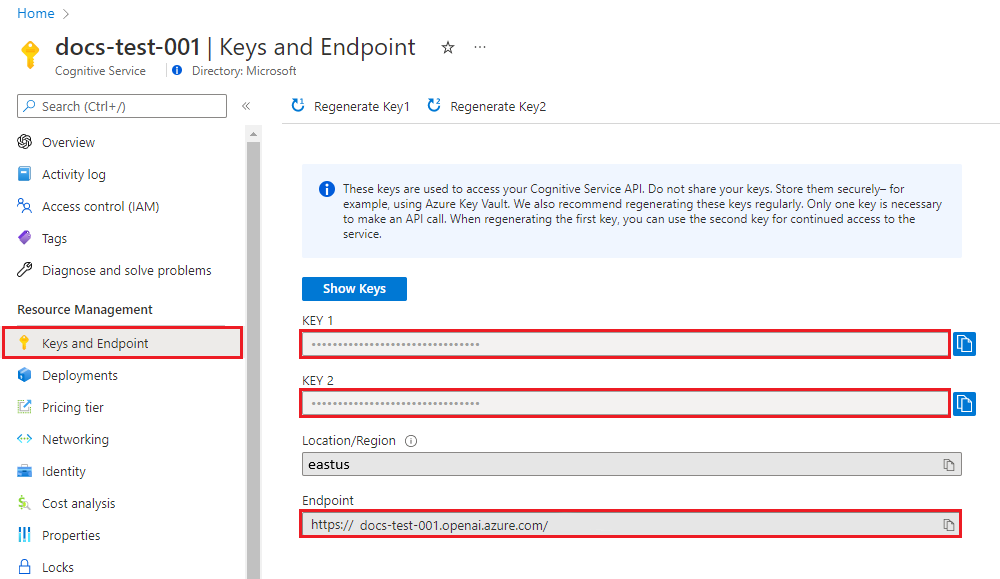

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the value in the Azure OpenAI Studio > Playground > Code View. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Keys & Endpoint section can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

Create a sample application

From the project directory, open the program.cs file and replace with the following code:

Without response streaming

using Azure;

using Azure.AI.OpenAI;

using static System.Environment;

string endpoint = GetEnvironmentVariable("AZURE_OPENAI_ENDPOINT");

string key = GetEnvironmentVariable("AZURE_OPENAI_API_KEY");

OpenAIClient client = new(new Uri(endpoint), new AzureKeyCredential(key));

var chatCompletionsOptions = new ChatCompletionsOptions()

{

DeploymentName = "gpt-35-turbo", //This must match the custom deployment name you chose for your model

Messages =

{

new ChatRequestSystemMessage("You are a helpful assistant."),

new ChatRequestUserMessage("Does Azure OpenAI support customer managed keys?"),

new ChatRequestAssistantMessage("Yes, customer managed keys are supported by Azure OpenAI."),

new ChatRequestUserMessage("Do other Azure AI services support this too?"),

},

MaxTokens = 100

};

Response<ChatCompletions> response = client.GetChatCompletions(chatCompletionsOptions);

Console.WriteLine(response.Value.Choices[0].Message.Content);

Console.WriteLine();

Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

dotnet run program.cs

Output

Yes, many of the Azure AI services support customer managed keys. Some examples include Text Analytics, Speech Services, and Translator. However, it's important to note that not all services support customer managed keys, so it's best to check the documentation for each individual service to see if it is supported.

This will wait until the model has generated its entire response before printing the results. Alternatively, if you want to asynchronously stream the response and print the results, you can replace the contents of program.cs with the code in the next example.

Async with streaming

using Azure;

using Azure.AI.OpenAI;

using static System.Environment;

string endpoint = GetEnvironmentVariable("AZURE_OPENAI_ENDPOINT");

string key = GetEnvironmentVariable("AZURE_OPENAI_API_KEY");

OpenAIClient client = new(new Uri(endpoint), new AzureKeyCredential(key));

var chatCompletionsOptions = new ChatCompletionsOptions()

{

DeploymentName= "gpt-35-turbo", //This must match the custom deployment name you chose for your model

Messages =

{

new ChatRequestSystemMessage("You are a helpful assistant."),

new ChatRequestUserMessage("Does Azure OpenAI support customer managed keys?"),

new ChatRequestAssistantMessage("Yes, customer managed keys are supported by Azure OpenAI."),

new ChatRequestUserMessage("Do other Azure AI services support this too?"),

},

MaxTokens = 100

};

await foreach (StreamingChatCompletionsUpdate chatUpdate in client.GetChatCompletionsStreaming(chatCompletionsOptions))

{

if (chatUpdate.Role.HasValue)

{

Console.Write($"{chatUpdate.Role.Value.ToString().ToUpperInvariant()}: ");

}

if (!string.IsNullOrEmpty(chatUpdate.ContentUpdate))

{

Console.Write(chatUpdate.ContentUpdate);

}

}

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- For more examples, check out the Azure OpenAI Samples GitHub repository

Source code | Package (Go)| Samples

Prerequisites

- An Azure subscription - Create one for free

- Access granted to the Azure OpenAI service in the desired Azure subscription. Currently, access to this service is granted only by application. You can apply for access to Azure OpenAI Service by completing the form at https://aka.ms/oai/access.

- Go 1.21.0 or higher installed locally.

- An Azure OpenAI Service resource with the

gpt-35-turbomodel deployed. For more information about model deployment, see the resource deployment guide.

Set up

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the value in the Azure OpenAI Studio > Playground > Code View. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Keys & Endpoint section can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

Create a sample application

Create a new file named chat_completions.go. Copy the following code into the chat_completions.go file.

package main

import (

"context"

"fmt"

"log"

"os"

"github.com/Azure/azure-sdk-for-go/sdk/ai/azopenai"

"github.com/Azure/azure-sdk-for-go/sdk/azcore/to"

)

func main() {

azureOpenAIKey := os.Getenv("AZURE_OPENAI_API_KEY")

//modelDeploymentID = deployment name, if model name and deployment name do not match change this value to name chosen when you deployed the model.

modelDeploymentID := "gpt-35-turbo"

// Ex: "https://<your-azure-openai-host>.openai.azure.com"

azureOpenAIEndpoint := os.Getenv("AZURE_OPENAI_ENDPOINT")

if azureOpenAIKey == "" || modelDeploymentID == "" || azureOpenAIEndpoint == "" {

fmt.Fprintf(os.Stderr, "Skipping example, environment variables missing\n")

return

}

keyCredential, err := azopenai.NewKeyCredential(azureOpenAIKey)

if err != nil {

// TODO: Update the following line with your application specific error handling logic

log.Fatalf("ERROR: %s", err)

}

client, err := azopenai.NewClientWithKeyCredential(azureOpenAIEndpoint, keyCredential, nil)

if err != nil {

// TODO: Update the following line with your application specific error handling logic

log.Fatalf("ERROR: %s", err)

}

// This is a conversation in progress.

// NOTE: all messages, regardless of role, count against token usage for this API.

messages := []azopenai.ChatMessage{

// You set the tone and rules of the conversation with a prompt as the system role.

{Role: to.Ptr(azopenai.ChatRoleSystem), Content: to.Ptr("You are a helpful assistant.")},

// The user asks a question

{Role: to.Ptr(azopenai.ChatRoleUser), Content: to.Ptr("Does Azure OpenAI support customer managed keys?")},

// The reply would come back from the Azure OpenAI model. You'd add it to the conversation so we can maintain context.

{Role: to.Ptr(azopenai.ChatRoleAssistant), Content: to.Ptr("Yes, customer managed keys are supported by Azure OpenAI")},

// The user answers the question based on the latest reply.

{Role: to.Ptr(azopenai.ChatRoleUser), Content: to.Ptr("Do other Azure AI services support this too?")},

// from here you'd keep iterating, sending responses back from the chat completions API

}

resp, err := client.GetChatCompletions(context.TODO(), azopenai.ChatCompletionsOptions{

// This is a conversation in progress.

// NOTE: all messages count against token usage for this API.

Messages: messages,

Deployment: modelDeploymentID,

}, nil)

if err != nil {

// TODO: Update the following line with your application specific error handling logic

log.Fatalf("ERROR: %s", err)

}

for _, choice := range resp.Choices {

fmt.Fprintf(os.Stderr, "Content[%d]: %s\n", *choice.Index, *choice.Message.Content)

}

}

Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Now open a command prompt and run:

go mod init chat_completions.go

Next run:

go mod tidy

go run chat_completions.go

Output

Content[0]: Yes, many Azure AI services also support customer managed keys. These services enable you to bring your own encryption keys for data at rest, which provides you with more control over the security of your data.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

For more examples, check out the Azure OpenAI Samples GitHub repository

Source code | Artifact (Maven) | Samples | Retrieval Augmented Generation (RAG) enterprise chat template | IntelliJ IDEA

Prerequisites

- An Azure subscription - Create one for free

- Access granted to the Azure OpenAI service in the desired Azure subscription. Currently, access to this service is granted only by application. You can apply for access to Azure OpenAI Service by completing the form at https://aka.ms/oai/access.

- The current version of the Java Development Kit (JDK)

- The Gradle build tool, or another dependency manager.

- An Azure OpenAI Service resource with either the

gpt-35-turboor thegpt-4models deployed. For more information about model deployment, see the resource deployment guide.

Set up

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the value in the Azure OpenAI Studio > Playground > Code View. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Keys & Endpoint section can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

Create a new Java application

Create a new Gradle project.

In a console window (such as cmd, PowerShell, or Bash), create a new directory for your app, and navigate to it.

mkdir myapp && cd myapp

Run the gradle init command from your working directory. This command will create essential build files for Gradle, including build.gradle.kts, which is used at runtime to create and configure your application.

gradle init --type basic

When prompted to choose a DSL, select Kotlin.

Install the Java SDK

This quickstart uses the Gradle dependency manager. You can find the client library and information for other dependency managers on the Maven Central Repository.

Locate build.gradle.kts and open it with your preferred IDE or text editor. Then copy in the following build configuration. This configuration defines the project as a Java application whose entry point is the class OpenAIQuickstart. It imports the Azure AI Vision library.

plugins {

java

application

}

application {

mainClass.set("OpenAIQuickstart")

}

repositories {

mavenCentral()

}

dependencies {

implementation(group = "com.azure", name = "azure-ai-openai", version = "1.0.0-beta.3")

implementation("org.slf4j:slf4j-simple:1.7.9")

}

Create a sample application

Create a Java file.

From your working directory, run the following command to create a project source folder:

mkdir -p src/main/javaNavigate to the new folder and create a file called OpenAIQuickstart.java.

Open OpenAIQuickstart.java in your preferred editor or IDE and paste in the following code.

import com.azure.ai.openai.OpenAIClient; import com.azure.ai.openai.OpenAIClientBuilder; import com.azure.ai.openai.models.ChatChoice; import com.azure.ai.openai.models.ChatCompletions; import com.azure.ai.openai.models.ChatCompletionsOptions; import com.azure.ai.openai.models.ChatMessage; import com.azure.ai.openai.models.ChatRole; import com.azure.ai.openai.models.CompletionsUsage; import com.azure.core.credential.AzureKeyCredential; import java.util.ArrayList; import java.util.List; public class GetChatCompletionsSample { public static void main(String[] args) { String azureOpenaiKey = System.getenv("AZURE_OPENAI_API_KEY");; String endpoint = System.getenv("AZURE_OPENAI_ENDPOINT");; String deploymentOrModelId = "gpt-35-turbo"; OpenAIClient client = new OpenAIClientBuilder() .endpoint(endpoint) .credential(new AzureKeyCredential(azureOpenaiKey)) .buildClient(); List<ChatMessage> chatMessages = new ArrayList<>(); chatMessages.add(new ChatMessage(ChatRole.SYSTEM, "You are a helpful assistant")); chatMessages.add(new ChatMessage(ChatRole.USER, "Does Azure OpenAI support customer managed keys?")); chatMessages.add(new ChatMessage(ChatRole.ASSISTANT, "Yes, customer managed keys are supported by Azure OpenAI?")); chatMessages.add(new ChatMessage(ChatRole.USER, "Do other Azure AI services support this too?")); ChatCompletions chatCompletions = client.getChatCompletions(deploymentOrModelId, new ChatCompletionsOptions(chatMessages)); System.out.printf("Model ID=%s is created at %s.%n", chatCompletions.getId(), chatCompletions.getCreatedAt()); for (ChatChoice choice : chatCompletions.getChoices()) { ChatMessage message = choice.getMessage(); System.out.printf("Index: %d, Chat Role: %s.%n", choice.getIndex(), message.getRole()); System.out.println("Message:"); System.out.println(message.getContent()); } System.out.println(); CompletionsUsage usage = chatCompletions.getUsage(); System.out.printf("Usage: number of prompt token is %d, " + "number of completion token is %d, and number of total tokens in request and response is %d.%n", usage.getPromptTokens(), usage.getCompletionTokens(), usage.getTotalTokens()); } }Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Navigate back to the project root folder, and build the app with:

gradle buildThen, run it with the

gradle runcommand:gradle run

Output

Model ID=chatcmpl-7JYnyE4zpd5gaIfTRH7hNpeVsvAw4 is created at 1684896378.

Index: 0, Chat Role: assistant.

Message:

Yes, most of the Azure AI services support customer managed keys. However, there may be some exceptions, so it is best to check the documentation of each specific service to confirm.

Usage: number of prompt token is 59, number of completion token is 36, and number of total tokens in request and response is 95.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- For more examples, check out the Azure OpenAI Samples GitHub repository

Source code | Artifacts (Maven) | Sample

Prerequisites

- An Azure subscription - Create one for free

- Access granted to the Azure OpenAI service in the desired Azure subscription. Currently, access to this service is granted only by application. You can apply for access to Azure OpenAI Service by completing the form at https://aka.ms/oai/access.

- The current version of the Java Development Kit (JDK)

- The Spring Boot CLI tool

- An Azure OpenAI Service resource with the

gpt-35-turbomodel deployed. For more information about model deployment, see the resource deployment guide.

Set up

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the value in the Azure OpenAI Studio > Playground > Code View. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Keys & Endpoint section can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

Note

Spring AI defaults the model name to gpt-35-turbo. It's only necessary to provide the SPRING_AI_AZURE_OPENAI_MODEL value if you've deployed a model with a different name.

export SPRING_AI_AZURE_OPENAI_API_KEY="REPLACE_WITH_YOUR_KEY_VALUE_HERE"

export SPRING_AI_AZURE_OPENAI_ENDPOINT="REPLACE_WITH_YOUR_ENDPOINT_HERE"

export SPRING_AI_AZURE_OPENAI_MODEL="REPLACE_WITH_YOUR_MODEL_NAME_HERE"

Create a new Spring application

Create a new Spring project.

In a Bash window, create a new directory for your app, and navigate to it.

mkdir ai-chat-demo && cd ai-chat-demo

Run the spring init command from your working directory. This command creates a standard directory structure for your Spring project including the main Java class source file and the pom.xml file used for managing Maven based projects.

spring init -a ai-chat-demo -n AIChat --force --build maven -x

The generated files and folders resemble the following structure:

ai-chat-demo/

|-- pom.xml

|-- mvn

|-- mvn.cmd

|-- HELP.md

|-- src/

|-- main/

| |-- resources/

| | |-- application.properties

| |-- java/

| |-- com/

| |-- example/

| |-- aichatdemo/

| |-- AiChatApplication.java

|-- test/

|-- java/

|-- com/

|-- example/

|-- aichatdemo/

|-- AiChatApplicationTests.java

Edit Spring application

Edit the pom.xml file.

From the root of the project directory, open the pom.xml file in your preferred editor or IDE and overwrite the file with the following content:

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <parent> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId> <version>3.2.0</version> <relativePath/> <!-- lookup parent from repository --> </parent> <groupId>com.example</groupId> <artifactId>ai-chat-demo</artifactId> <version>0.0.1-SNAPSHOT</version> <name>AIChat</name> <description>Demo project for Spring Boot</description> <properties> <java.version>17</java.version> </properties> <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter</artifactId> </dependency> <dependency> <groupId>org.springframework.experimental.ai</groupId> <artifactId>spring-ai-azure-openai-spring-boot-starter</artifactId> <version>0.7.0-SNAPSHOT</version> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-test</artifactId> <scope>test</scope> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-maven-plugin</artifactId> </plugin> </plugins> </build> <repositories> <repository> <id>spring-snapshots</id> <name>Spring Snapshots</name> <url>https://repo.spring.io/snapshot</url> <releases> <enabled>false</enabled> </releases> </repository> </repositories> </project>From the src/main/java/com/example/aichatdemo folder, open AiChatApplication.java in your preferred editor or IDE and paste in the following code:

package com.example.aichatdemo; import java.util.ArrayList; import java.util.List; import org.springframework.ai.client.AiClient; import org.springframework.ai.prompt.Prompt; import org.springframework.ai.prompt.messages.ChatMessage; import org.springframework.ai.prompt.messages.Message; import org.springframework.ai.prompt.messages.MessageType; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.boot.CommandLineRunner; import org.springframework.boot.SpringApplication; import org.springframework.boot.autoconfigure.SpringBootApplication; @SpringBootApplication public class AiChatApplication implements CommandLineRunner { private static final String ROLE_INFO_KEY = "role"; @Autowired private AiClient aiClient; public static void main(String[] args) { SpringApplication.run(AiChatApplication.class, args); } @Override public void run(String... args) throws Exception { System.out.println(String.format("Sending chat prompts to AI service. One moment please...\r\n")); final List<Message> msgs = new ArrayList<>(); msgs.add(new ChatMessage(MessageType.SYSTEM, "You are a helpful assistant")); msgs.add(new ChatMessage(MessageType.USER, "Does Azure OpenAI support customer managed keys?")); msgs.add(new ChatMessage(MessageType.ASSISTANT, "Yes, customer managed keys are supported by Azure OpenAI?")); msgs.add(new ChatMessage(MessageType.USER, "Do other Azure AI services support this too?")); final var resps = aiClient.generate(new Prompt(msgs)); System.out.println(String.format("Prompt created %d generated response(s).", resps.getGenerations().size())); resps.getGenerations().stream() .forEach(gen -> { final var role = gen.getInfo().getOrDefault(ROLE_INFO_KEY, MessageType.ASSISTANT.getValue()); System.out.println(String.format("Generated respose from \"%s\": %s", role, gen.getText())); }); } }Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Navigate back to the project root folder, and run the app by using the following command:

./mvnw spring-boot:run

Output

. ____ _ __ _ _

/\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \

( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \

\\/ ___)| |_)| | | | | || (_| | ) ) ) )

' |____| .__|_| |_|_| |_\__, | / / / /

=========|_|==============|___/=/_/_/_/

:: Spring Boot :: (v3.1.5)

2023-11-07T13:31:10.884-06:00 INFO 6248 --- [ main] c.example.aichatdemo.AiChatApplication : No active profile set, falling back to 1 default profile: "default"

2023-11-07T13:31:11.595-06:00 INFO 6248 --- [ main] c.example.aichatdemo.AiChatApplication : Started AiChatApplication in 0.994 seconds (process running for 1.28)

Sending chat prompts to AI service. One moment please...

Prompt created 1 generated response(s).

Generated respose from "assistant": Yes, other Azure AI services also support customer managed keys. Azure AI Services, Azure Machine Learning, and other AI services in Azure provide options for customers to manage and control their encryption keys. This allows customers to have greater control over their data and security.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

For more examples, check out the Azure OpenAI Samples GitHub repository

Note

This article has been updated to use the latest OpenAI npm package which now fully supports Azure OpenAI. If you are looking for code examples for the legacy Azure OpenAI JavaScript SDK they are currently still available in this repo.

Prerequisites

- An Azure subscription - Create one for free

- Access granted to the Azure OpenAI service in the desired Azure subscription. Currently, access to this service is granted only by application. You can apply for access to Azure OpenAI Service by completing the form at https://aka.ms/oai/access.

- LTS versions of Node.js

- An Azure OpenAI Service resource with either a

gpt-35-turboorgpt-4series models deployed. For more information about model deployment, see the resource deployment guide.

Set up

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the value in the Azure OpenAI Studio > Playground > Code View. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Keys & Endpoint section can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

Create a Node application

In a console window (such as cmd, PowerShell, or Bash), create a new directory for your app, and navigate to it.

Install the client library

Install the required packages for JavaScript with npm from within the context of your new directory:

npm install openai dotenv @azure/identity

Your app's package.json file will be updated with the dependencies.

Create a sample application

Open a command prompt where you want the new project, and create a new file named ChatCompletion.js. Copy the following code into the ChatCompletion.js file.

const { AzureOpenAI } = require("openai");

// Load the .env file if it exists

const dotenv = require("dotenv");

dotenv.config();

// You will need to set these environment variables or edit the following values

const endpoint = process.env["AZURE_OPENAI_ENDPOINT"] || "<endpoint>";

const apiKey = process.env["AZURE_OPENAI_API_KEY"] || "<api key>";

const apiVersion = "2024-05-01-preview";

const deployment = "gpt-4o"; //This must match your deployment name.

require("dotenv/config");

async function main() {

const client = new AzureOpenAI({ endpoint, apiKey, apiVersion, deployment });

const result = await client.chat.completions.create({

messages: [

{ role: "system", content: "You are a helpful assistant." },

{ role: "user", content: "Does Azure OpenAI support customer managed keys?" },

{ role: "assistant", content: "Yes, customer managed keys are supported by Azure OpenAI?" },

{ role: "user", content: "Do other Azure AI services support this too?" },

],

model: "",

});

for (const choice of result.choices) {

console.log(choice.message);

}

}

main().catch((err) => {

console.error("The sample encountered an error:", err);

});

module.exports = { main };

Run the script with the following command:

node.exe ChatCompletion.js

Output

== Chat Completions Sample ==

{

content: 'Yes, several other Azure AI services also support customer managed keys for enhanced security and control over encryption keys.',

role: 'assistant'

}

Microsoft Entra ID

Important

In the previous example we are demonstrating key-based authentication. Once you have tested with key-based authentication successfully, we recommend using the more secure Microsoft Entra ID for authentication which is demonstrated in the next code sample. Getting started with [Microsoft Entra ID] will require some additional prerequisites.

const { AzureOpenAI } = require("openai");

const { DefaultAzureCredential, getBearerTokenProvider } = require("@azure/identity");

// Set AZURE_OPENAI_ENDPOINT to the endpoint of your

// OpenAI resource. You can find this in the Azure portal.

// Load the .env file if it exists

require("dotenv/config");

async function main() {

console.log("== Chat Completions Sample ==");

const scope = "https://cognitiveservices.azure.com/.default";

const azureADTokenProvider = getBearerTokenProvider(new DefaultAzureCredential(), scope);

const deployment = "gpt-35-turbo";

const apiVersion = "2024-04-01-preview";

const client = new AzureOpenAI({ azureADTokenProvider, deployment, apiVersion });

const result = await client.chat.completions.create({

messages: [

{ role: "system", content: "You are a helpful assistant." },

{ role: "user", content: "Does Azure OpenAI support customer managed keys?" },

{ role: "assistant", content: "Yes, customer managed keys are supported by Azure OpenAI?" },

{ role: "user", content: "Do other Azure AI services support this too?" },

],

model: "",

});

for (const choice of result.choices) {

console.log(choice.message);

}

}

main().catch((err) => {

console.error("The sample encountered an error:", err);

});

module.exports = { main };

Note

If your receive the error: Error occurred: OpenAIError: The apiKey and azureADTokenProvider arguments are mutually exclusive; only one can be passed at a time. You may need to remove a pre-existing environment variable for the API key from your system. Even though the Microsoft Entra ID code sample is not explicitly referencing the API key environment variable, if one is present on the system executing this sample, this error will still be generated.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- Azure OpenAI Overview

- For more examples, check out the Azure OpenAI Samples GitHub repository

Library source code | Package (PyPi) | Retrieval Augmented Generation (RAG) enterprise chat template |

Prerequisites

- An Azure subscription - Create one for free

- Access granted to Azure OpenAI Service in the desired Azure subscription. Currently, access to this service is granted only by application. You can apply for access to Azure OpenAI Service by completing the form at https://aka.ms/oai/access.

- Python 3.8 or later version.

- The following Python libraries: os.

- An Azure OpenAI Service resource with either the

gpt-35-turboor thegpt-4models deployed. For more information about model deployment, see the resource deployment guide.

Set up

Install the OpenAI Python client library with:

pip install openai

Note

This library is maintained by OpenAI. Refer to the release history to track the latest updates to the library.

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the value in the Azure OpenAI Studio > Playground > Code View. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Keys & Endpoint section can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

Create a new Python application

Create a new Python file called quickstart.py. Then open it up in your preferred editor or IDE.

Replace the contents of quickstart.py with the following code.

You need to set the model variable to the deployment name you chose when you deployed the GPT-3.5-Turbo or GPT-4 models. Entering the model name will result in an error unless you chose a deployment name that is identical to the underlying model name.

import os

from openai import AzureOpenAI

client = AzureOpenAI(

azure_endpoint = os.getenv("AZURE_OPENAI_ENDPOINT"),

api_key=os.getenv("AZURE_OPENAI_API_KEY"),

api_version="2024-02-01"

)

response = client.chat.completions.create(

model="gpt-35-turbo", # model = "deployment_name".

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Does Azure OpenAI support customer managed keys?"},

{"role": "assistant", "content": "Yes, customer managed keys are supported by Azure OpenAI."},

{"role": "user", "content": "Do other Azure AI services support this too?"}

]

)

print(response.choices[0].message.content)

Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Run the application with the

pythoncommand on your quickstart file:python quickstart.py

Output

{

"choices": [

{

"finish_reason": "stop",

"index": 0,

"message": {

"content": "Yes, most of the Azure AI services support customer managed keys. However, not all services support it. You can check the documentation of each service to confirm if customer managed keys are supported.",

"role": "assistant"

}

}

],

"created": 1679001781,

"id": "chatcmpl-6upLpNYYOx2AhoOYxl9UgJvF4aPpR",

"model": "gpt-3.5-turbo-0301",

"object": "chat.completion",

"usage": {

"completion_tokens": 39,

"prompt_tokens": 58,

"total_tokens": 97

}

}

Yes, most of the Azure AI services support customer managed keys. However, not all services support it. You can check the documentation of each service to confirm if customer managed keys are supported.

Understanding the message structure

The GPT-35-Turbo and GPT-4 models are optimized to work with inputs formatted as a conversation. The messages variable passes an array of dictionaries with different roles in the conversation delineated by system, user, and assistant. The system message can be used to prime the model by including context or instructions on how the model should respond.

The GPT-35-Turbo & GPT-4 how-to guide provides an in-depth introduction into the options for communicating with these new models.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- Learn more about how to work with GPT-35-Turbo and the GPT-4 models with our how-to guide.

- For more examples, check out the Azure OpenAI Samples GitHub repository

Prerequisites

- An Azure subscription - Create one for free.

- Access granted to Azure OpenAI Service in the desired Azure subscription. Currently, access to this service is granted only by application. You can apply for access to Azure OpenAI Service by completing the form at https://aka.ms/oai/access.

- An Azure OpenAI Service resource with either the

gpt-35-turboor thegpt-4models deployed. For more information about model deployment, see the resource deployment guide.

Set up

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the value in the Azure OpenAI Studio > Playground > Code View. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Keys & Endpoint section can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

REST API

In a bash shell, run the following command. You will need to replace gpt-35-turbo with the deployment name you chose when you deployed the GPT-35-Turbo or GPT-4 models. Entering the model name will result in an error unless you chose a deployment name that is identical to the underlying model name.

curl $AZURE_OPENAI_ENDPOINT/openai/deployments/gpt-35-turbo/chat/completions?api-version=2024-02-01 \

-H "Content-Type: application/json" \

-H "api-key: $AZURE_OPENAI_API_KEY" \

-d '{"messages":[{"role": "system", "content": "You are a helpful assistant."},{"role": "user", "content": "Does Azure OpenAI support customer managed keys?"},{"role": "assistant", "content": "Yes, customer managed keys are supported by Azure OpenAI."},{"role": "user", "content": "Do other Azure AI services support this too?"}]}'

The format of your first line of the command with an example endpoint would appear as follows curl https://docs-test-001.openai.azure.com/openai/deployments/{YOUR-DEPLOYMENT_NAME_HERE}/chat/completions?api-version=2024-02-01 \ If you encounter an error double check to make sure that you don't have a doubling of the / at the separation between your endpoint and /openai/deployments.

If you want to run this command in a normal Windows command prompt you would need to alter the text to remove the \ and line breaks.

Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Output

{"id":"chatcmpl-6v7mkQj980V1yBec6ETrKPRqFjNw9",

"object":"chat.completion","created":1679072642,

"model":"gpt-35-turbo",

"usage":{"prompt_tokens":58,

"completion_tokens":68,

"total_tokens":126},

"choices":[{"message":{"role":"assistant",

"content":"Yes, other Azure AI services also support customer managed keys. Azure AI services offer multiple options for customers to manage keys, such as using Azure Key Vault, customer-managed keys in Azure Key Vault or customer-managed keys through Azure Storage service. This helps customers ensure that their data is secure and access to their services is controlled."},"finish_reason":"stop","index":0}]}

Output formatting adjusted for ease of reading, actual output is a single block of text without line breaks.

Understanding the message structure

The GPT-35-Turbo and GPT-4 models are optimized to work with inputs formatted as a conversation. The messages variable passes an array of dictionaries with different roles in the conversation delineated by system, user, and assistant. The system message can be used to prime the model by including context or instructions on how the model should respond.

The GPT-35-Turbo & GPT-4 how-to guide provides an in-depth introduction into the options for communicating with these new models.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- Learn more about how to work with GPT-35-Turbo and the GPT-4 models with our how-to guide.

- For more examples, check out the Azure OpenAI Samples GitHub repository

Prerequisites

- An Azure subscription - Create one for free

- Access granted to Azure OpenAI Service in the desired Azure subscription. Currently, access to this service is granted only by application. You can apply for access to Azure OpenAI Service by completing the form at https://aka.ms/oai/access.

- You can use either the latest version, PowerShell 7, or Windows PowerShell 5.1.

- An Azure OpenAI Service resource with a model deployed. For more information about model deployment, see the resource deployment guide.

- An Azure OpenAI Service resource with either the

gpt-35-turboor thegpt-4models deployed. For more information about model deployment, see the resource deployment guide.

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you'll need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the value in the Azure OpenAI Studio > Playground > Code View. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Endpoint and Keys can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Create and assign persistent environment variables for your key and endpoint.

Environment variables

$Env:AZURE_OPENAI_API_KEY = 'YOUR_KEY_VALUE'

$Env:AZURE_OPENAI_ENDPOINT = 'YOUR_ENDPOINT'

Create a new PowerShell script

Create a new PowerShell file called quickstart.ps1. Then open it up in your preferred editor or IDE.

Replace the contents of quickstart.ps1 with the following code. You need to set the

enginevariable to the deployment name you chose when you deployed the GPT-35-Turbo or GPT-4 models. Entering the model name will result in an error unless you chose a deployment name that is identical to the underlying model name.# Azure OpenAI metadata variables $openai = @{ api_key = $Env:AZURE_OPENAI_API_KEY api_base = $Env:AZURE_OPENAI_ENDPOINT # your endpoint should look like the following https://YOUR_RESOURCE_NAME.openai.azure.com/ api_version = '2024-02-01' # this may change in the future name = 'YOUR-DEPLOYMENT-NAME-HERE' #This will correspond to the custom name you chose for your deployment when you deployed a model. } # Completion text $messages = @() $messages += @{ role = 'system' content = 'You are a helpful assistant.' } $messages += @{ role = 'user' content = 'Does Azure OpenAI support customer managed keys?' } $messages += @{ role = 'assistant' content = 'Yes, customer managed keys are supported by Azure OpenAI.' } $messages += @{ role = 'user' content = 'Do other Azure AI services support this too?' } # Header for authentication $headers = [ordered]@{ 'api-key' = $openai.api_key } # Adjust these values to fine-tune completions $body = [ordered]@{ messages = $messages } | ConvertTo-Json # Send a request to generate an answer $url = "$($openai.api_base)/openai/deployments/$($openai.name)/chat/completions?api-version=$($openai.api_version)" $response = Invoke-RestMethod -Uri $url -Headers $headers -Body $body -Method Post -ContentType 'application/json' return $responseImportant

For production, use a secure way of storing and accessing your credentials like The PowerShell Secret Management with Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Run the script using PowerShell:

./quickstart.ps1

Output

# the output of the script will be a .NET object containing the response

id : chatcmpl-7sdJJRC6fDNGnfHMdfHXvPkYFbaVc

object : chat.completion

created : 1693255177

model : gpt-35-turbo

choices : {@{index=0; finish_reason=stop; message=}}

usage : @{completion_tokens=67; prompt_tokens=55; total_tokens=122}

# convert the output to JSON

./quickstart.ps1 | ConvertTo-Json -Depth 3

# or to view the text returned, select the specific object property

$reponse = ./quickstart.ps1

$response.choices.message.content

Understanding the message structure

The GPT-35-Turbo and GPT-4 models are optimized to work with inputs formatted as a conversation. The messages variable passes an array of dictionaries with different roles in the conversation delineated by system, user, and assistant. The system message can be used to prime the model by including context or instructions on how the model should respond.

The GPT-35-Turbo & GPT-4 how-to guide provides an in-depth introduction into the options for communicating with these new models.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- Learn more about how to work with GPT-35-Turbo and the GPT-4 models with our how-to guide.

- For more examples, check out the Azure OpenAI Samples GitHub repository

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for