Configure Windows Server Failover Cluster on Azure VMware Solution vSAN

In this article, learn how to configure Failover Clustering in Windows Server on Azure VMware Solution vSAN with native shared disks.

Windows Server Failover Cluster (WSFC), previously known as Microsoft Service Cluster Service (MSCS), is a Windows Server Operating System (OS) feature. WSFC is a business-critical feature, and for many applications is required. For example, WSFC is required for the following configurations:

- SQL Server configured as:

- Always On Failover Cluster Instance (FCI), for instance-level high availability.

- Always On Availability Group (AG), for database-level high availability.

- Windows File Services:

- Generic File share running on active cluster node.

- Scale-Out File Server (SOFS), which stores files in cluster shared volumes (CSV).

- Storage Spaces Direct (S2D); local disks used to create storage pools across different cluster nodes.

You can host the WSFC cluster on different Azure VMware Solution instances, known as Cluster-Across-Box (CAB). You can also place the WSFC cluster on a single Azure VMware Solution node. This configuration is known as Cluster-in-a-Box (CIB). We don't recommend using a CIB solution for a production implementation, use CAB instead with placement policies. Were the single Azure VMware Solution node to fail, all WSFC cluster nodes would be powered off, and the application would experience downtime. Azure VMware Solution requires a minimum of three nodes in a private cloud cluster.

It's important to deploy a supported WSFC configuration. You want your solution to be supported on VMware vSphere and with Azure VMware Solution. VMware provides a detailed document about WSFC on vSphere 7.0, Setup for Failover Clustering and Microsoft Cluster Service.

This article focuses on WSFC on Windows Server 2016 and Windows Server 2019. Unfortunately, older Windows Server versions are out of mainstream support, so we don't consider them here.

First, you need to create a WSFC. Then, use the information we provide in this article to specify a WSFC deployment on Azure VMware Solution.

Prerequisites

- Azure VMware Solution environment

- Microsoft Windows Server OS installation media

Reference architecture

Azure VMware Solution provides native support for virtualized WSFC. It supports SCSI-3 Persistent Reservations (SCSI3PR) on a virtual disk level. WSFC requires this support to arbitrate access to a shared disk between nodes. Support of SCSI3PRs enables configuration of WSFC with a disk resource shared between VMs natively on vSAN datastores.

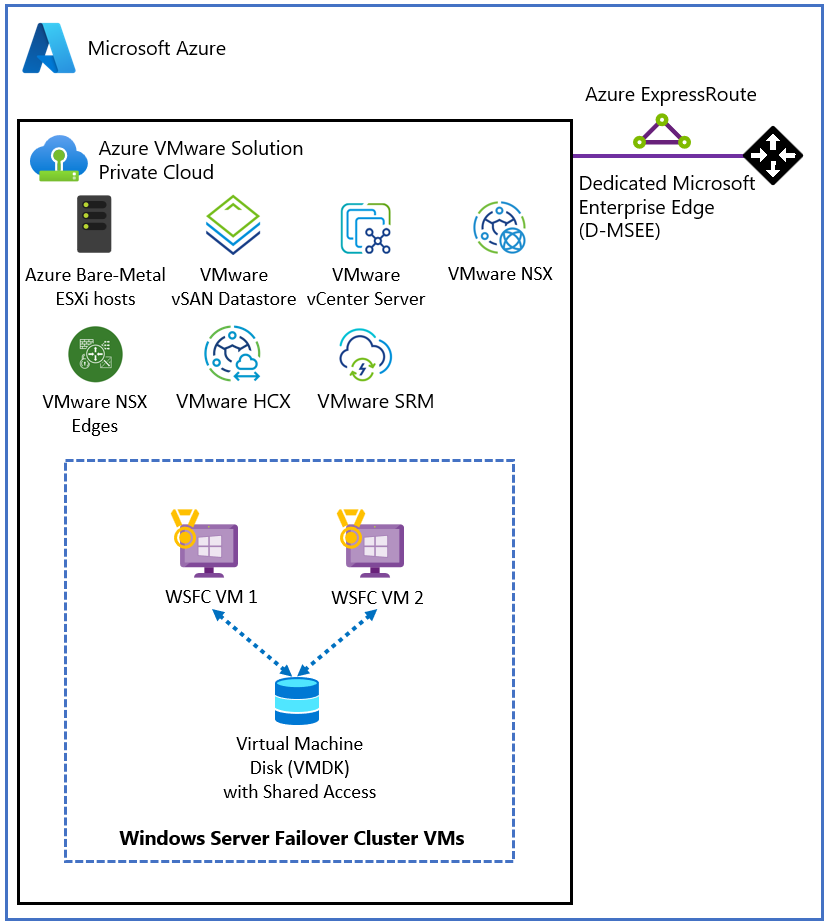

The following diagram illustrates the architecture of WSFC virtual nodes on an Azure VMware Solution private cloud. It shows where Azure VMware Solution resides, including the WSFC virtual servers (blue box), in relation to the broader Azure platform. This diagram illustrates a typical hub-spoke architecture, but a similar setup is possible using Azure Virtual WAN. Both offer all the value other Azure services can bring you.

Supported configurations

Currently, the configurations supported are:

Microsoft Windows Server 2012 or later

Up to five nodes (VMs) per a WSFC instance

Up to four PVSCSI adapters per VM

Up to 64 disks per PVSCSI adapter

Virtual machine configuration requirements

WSFC node configuration parameters

- Install the latest VMware Tools on each WSFC node.

- Mixing nonshared and shared disks on a single virtual SCSI adapter isn't supported. For example, if the system disk (drive C:) is attached to SCSI0:0, the first shared disk would be attached to SCSI1:0. A VM node of a WSFC has the same virtual SCSI controller maximum as an ordinary VM - up to four (4) virtual SCSI Controllers.

- Virtual discs SCSI IDs should be consistent between all VMs hosting nodes of the same WSFC.

| Component | Requirements |

|---|---|

| VM hardware version | 11 or higher to support Live vMotion. |

| Virtual NIC | VMXNET3 paravirtualized network interface card (NIC); enable the in-guest Windows Receive Side Scaling (RSS) on the virtual NIC. |

| Memory | Use full VM reservation memory for nodes in the WSFC cluster. |

| Increase the I/O timeout of each WSFC node. | Modify HKEY_LOCAL_MACHINE\System\CurrentControlSet\Services\Disk\TimeOutValueSet to 60 seconds or more. (If you recreate the cluster, this value might be reset to its default, so you must change it again.) |

| Windows cluster health monitoring | The value of the SameSubnetThreshold Parameter of Windows cluster health monitoring must be modified to allow 10 missed heartbeats at minimum. It's the default in Windows Server 2016. This recommendation applies to all applications using WSFC, including shared and nonshared disks. |

WSFC node - Boot disks configuration parameters

| Component | Requirements |

|---|---|

| SCSI Controller Type | LSI Logic SAS |

| Disk mode | Virtual |

| SCSI bus sharing | None |

| Modify advanced settings for a virtual SCSI controller hosting the boot device. | Add the following advanced settings to each WSFC node: scsiX.returnNoConnectDuringAPD = "TRUE" scsiX.returnBusyOnNoConnectStatus = "FALSE" Where X is the boot device SCSI bus controller ID number. By default, X is set to 0. |

WSFC node - Shared disks configuration parameters

| Component | Requirements |

|---|---|

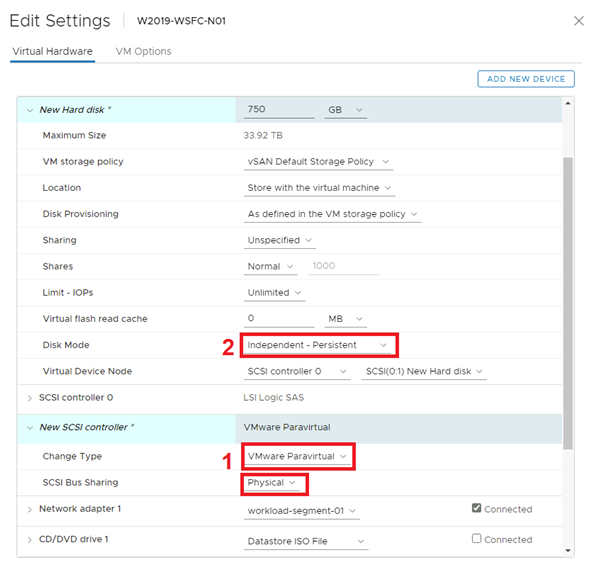

| SCSI Controller Type | VMware Paravirtualized (PVSCSI) |

| Disk mode | Independent - Persistent (see step 2 in the following illustration). By using this setting, you ensure that all disks are excluded from snapshots. Snapshots aren't supported for WSFC-based VMs. |

| SCSI bus sharing | Physical (see step 1 in the following illustration) |

| Multi-writer flag | Not used |

| Disk format | Thick provisioned (Eager Zeroed Thick (EZT) isn't required with vSAN) |

Unsupported scenarios

The following functionalities aren't supported for WSFC on Azure VMware Solution:

- NFS data stores

- Storage Spaces

- vSAN using iSCSI Service

- vSAN Stretched Cluster

- Enhanced vMotion Compatibility (EVC)

- vSphere Fault Tolerance (FT)

- Snapshots

- Live (online) storage vMotion

- N-Port ID Virtualization (NPIV)

Hot changes to virtual machine hardware might disrupt the heartbeat between the WSFC nodes.

The following activities aren't supported and might cause WSFC node failover:

- Hot adding memory

- Hot adding CPU

- Using snapshots

- Increasing the size of a shared disk

- Pausing and resuming the virtual machine state

- Memory over-commitment leading to ESXi swapping or VM memory ballooning

- Hot Extend Local VMDK file, even if it isn't associated with SCSI bus sharing controller

Configure WSFC with shared disks on Azure VMware Solution vSAN

Ensure that an Active Directory environment is available.

Create virtual machines (VMs) on the vSAN datastore.

Power on all VMs, configure the hostname and IP addresses, join all VMs to an Active Directory domain, and install the latest available OS updates.

Install the latest VMware Tools.

Enable and configure the Windows Server Failover Cluster feature on each VM.

Configure a Cluster Witness for quorum (can be a file share witness).

Power off all nodes of the WSFC cluster.

Add one or more Para virtual SCSI controllers (up to four) to each VM part of the WSFC. Use the settings per the previous paragraphs.

On the first cluster node, add all needed shared disks using Add New Device > Hard Disk. Leave Disk sharing as Unspecified (default) and Disk mode as Independent - Persistent. Then attach it to the controller(s) created in the previous steps.

Continue with the remaining WSFC nodes. Add the disks created in the previous step by selecting Add New Device > Existing Hard Disk. Be sure to maintain the same disk SCSI IDs on all WSFC nodes.

Power on the first WSFC node, sign in, and open the disk management console (mmc). Make sure the added shared disks are manageable by the OS and are initialized. Format the disks and assign a drive letter.

Power on the other WSFC nodes.

Add the disk to the WSFC cluster using the Add Disk wizard and add them to a Cluster Shared Volume.

Test a failover using the Move disk wizard and make sure the WSFC cluster with shared disks works properly.

Run the Validation Cluster wizard to confirm whether the cluster and its nodes are working properly.

It's important to keep the following specific items from the Cluster Validation test in mind:

Validate Storage Spaces Persistent Reservation. If you aren't using Storage Spaces with your cluster (such as on Azure VMware Solution vSAN), this test isn't applicable. You can ignore any results of the Validate Storage Spaces Persistent Reservation test including this warning. To avoid warnings, you can exclude this test.

Validate Network Communication. The Cluster Validation test displays a warning indicating that only one network interface per cluster node is available. You can ignore this warning. Azure VMware Solution provides the required availability and performance needed, since the nodes are connected to one of the NSX-T Data Center segments. However, keep this item as part of the Cluster Validation test, as it validates other aspects of network communication.

Create the relevant Placement Policies to situate the WSFC VMs on the correct Azure VMware Solution nodes depending upon the WSFC CIB or CAB configuration. To do so, you need a host-to-VM affinity rule. This way, cluster nodes run on the same or separate Azure VMware Solution host(s) respectively.

Related information

- Failover Clustering in Windows Server

- Guidelines for Microsoft Clustering on vSphere (1037959) (vmware.com)

- About Setup for Failover Clustering and Microsoft Cluster Service (vmware.com)

- vSAN 6.7 U3 - WSFC with Shared Disks & SCSI-3 Persistent Reservations (vmware.com)

- Azure VMware Solution limits

Next steps

Now that we covered setting up a WSFC in Azure VMware Solution, learn more about:

- Setting up your new WSFC by adding more applications that require the WSFC capability. For instance, SQL Server and SAP ASCS.

- Setting up a backup solution.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for