Connector overview

Data Factory in Microsoft Fabric offers a rich set of connectors that allow you to connect to different types of data stores. You can take advantage of those connectors to transform data in dataflows or move a PB-level of dataset with high-scale in a data pipeline.

Prerequisites

Before you can set up a connection in Dataflow Gen2 or a data pipeline, the following prerequisites are required:

A Microsoft Fabric tenant account with an active subscription. Create an account for free.

A Microsoft Fabric enabled Workspace. Create a workspace.

Supported data connectors in dataflows

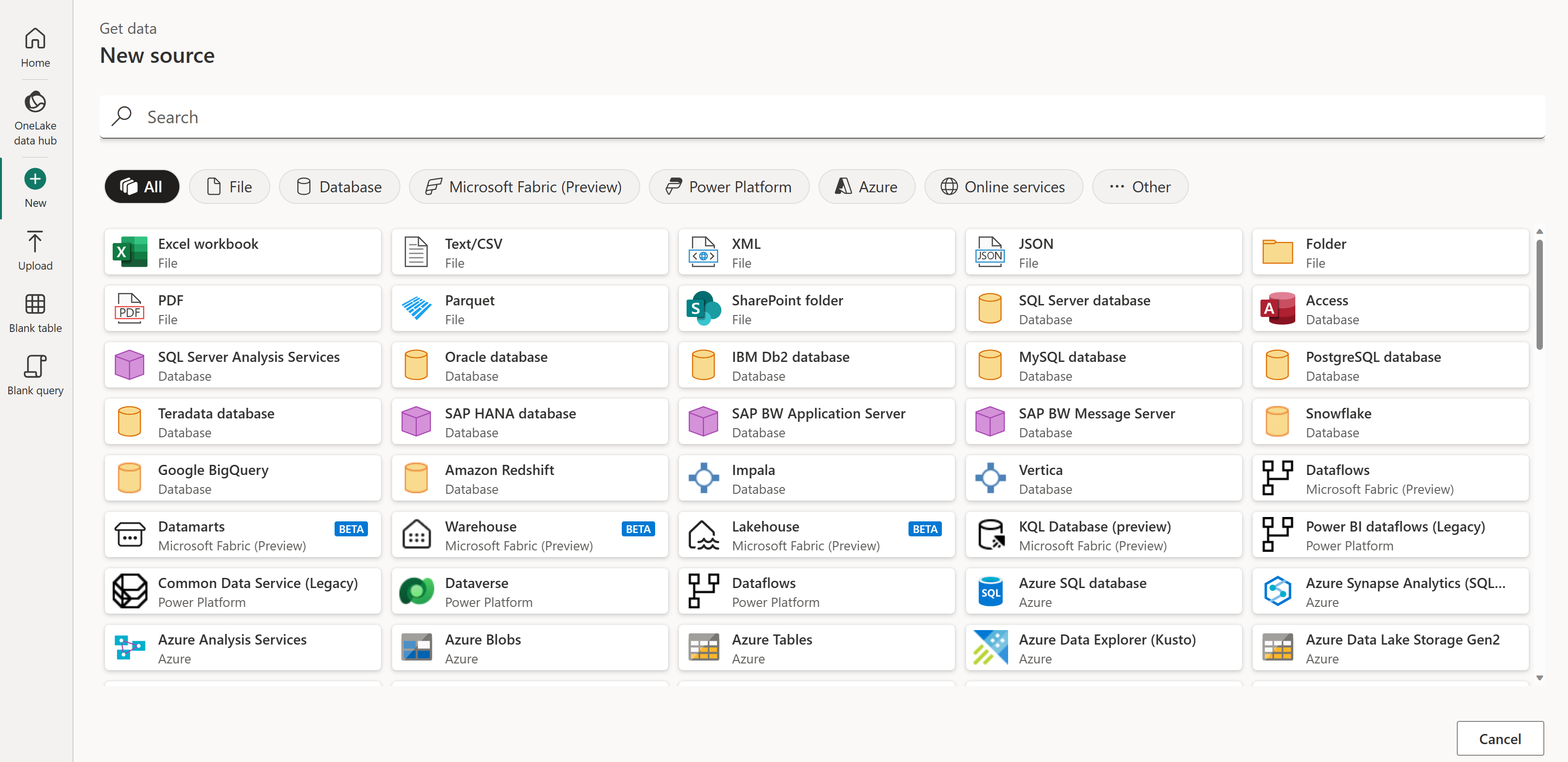

Dataflow Gen2 provide data ingestion and transformation capabilities over a wide range of data sources. These data sources include various types of files, databases, online, cloud, and on-premises data sources. There are greater than 145 different data connectors, which are accessible from the dataflows authoring experience within the get data experience.

For a comprehensive list of all currently supported data connectors, go to Dataflow Gen2 connectors in Microsoft Fabric.

The following connectors are currently available for output destinations in Dataflow Gen2:

- Azure Data Explorer

- Azure SQL

- Data Warehouse

- Lakehouse

Supported data stores in data pipeline

Data Factory in Microsoft Fabric supports data stores in a data pipeline through the Copy, Lookup, Get Metadata, Delete, Script, and Stored Procedure activities. For a list of all currently supported data connectors, go to Data pipeline connectors in Microsoft Fabric.

Note

Currently, a pipeline on managed VNet and on-premises data access with a gateway aren't supported in Data Factory for Microsoft Fabric.

Related content

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for