What is data warehousing in Microsoft Fabric?

Applies to:

SQL analytics endpoint and Warehouse in Microsoft Fabric

Microsoft Fabric provides customers with a unified product that addresses every aspect of their data estate by offering a complete, SaaS-ified Data, Analytics and AI platform, which is lake-centric and open. The foundation of Microsoft Fabric enables the novice user through to the seasoned professional to leverage Database, Analytics, Messaging, Data Integration and Business Intelligence workloads through a rich, easy to use, shared SaaS experience with Microsoft OneLake as the centerpiece.

A lake-centric SaaS experience built for any skill level

Microsoft Fabric introduces a lake-centric data warehouse built on an enterprise grade distributed processing engine that enables industry leading performance at scale while eliminating the need for configuration and management. Through an easy to use SaaS experience that is tightly integrated with Power BI for easy analysis and reporting, Warehouse in Microsoft Fabric converges the world of data lakes and warehouses with a goal of greatly simplifying an organizations investment in their analytics estate. Data warehousing workloads benefit from the rich capabilities of the SQL engine over an open data format, enabling customers to focus on data preparation, analysis and reporting over a single copy of their data stored in their Microsoft OneLake.

The Warehouse is built for any skill level - from the citizen developer through to the professional developer, DBA or data engineer. The rich set of experiences built into Microsoft Fabric workspace enables customers to reduce their time to insights by having an easily consumable, always connected semantic model that is integrated with Power BI in DirectLake mode. This enables second-to-none industry leading performance that ensures a customer's report always has the most recent data for analysis and reporting. Cross-database querying can be leveraged to quickly and seamlessly use multiple data sources that span multiple databases for fast insights and zero data duplication.

Virtual warehouses with cross database querying

Microsoft Fabric provides customers with the ability to stand up virtual warehouses containing data from virtually any source by using shortcuts. Customers can build a virtual warehouse by creating shortcuts to their data wherever it resides. A virtual warehouse can consist of data from OneLake, Azure Data Lake Storage, or any other cloud vendor storage within a single boundary and with no data duplication.

Seamlessly unlock value from a variety of data sources through the richness of cross-database querying in Microsoft Fabric. Cross database querying enables customers to quickly and seamlessly use multiple data sources for fast insights and with zero data duplication. Data stored in different sources can be easily joined together enabling customers to deliver rich insights that previously required significant effort from data integration and engineering teams.

Cross-database queries can be created through the Visual Query editor, which offers a no-code path to insights over multiple tables. The SQL Query editor, or other familiar tools such as SQL Server Management Studio (SSMS), can also be used to create cross-database queries.

Autonomous workload management

Warehouses in Microsoft Fabric leverage an industry-leading distributed query processing engine, which provides customers with workloads that have a natural isolation boundary. There are no knobs to turn with the autonomous allocation and relinquishing of resources to offer best in breed performance with automatic scale and concurrency built in. True isolation is achieved by separating workloads with different characteristics, ensuring that ETL jobs never interfere with their ad hoc analytics and reporting workloads.

Open format for seamless engine interoperability

Data in the Warehouse is stored in the parquet file format and published as Delta Lake Logs, enabling ACID transactions and cross engine interoperability that can be leveraged through other Microsoft Fabric workloads such as Spark, Pipelines, Power BI and Azure Data Explorer. Customers no longer need to create multiple copies of their data to enable data professionals with different skill sets. Data engineers that are accustomed to working in Python can easily use the same data that was modeled and served by a data warehouse professional that is accustomed to working in SQL. In parallel, BI professionals can quickly and easily use the same data to create a rich set of visualizations in Power BI with record performance and no data duplication.

Separation of storage and compute

Compute and storage are decoupled in a Warehouse which enables customers to scale near instantaneously to meet the demands of their business. This enables multiple compute engines to read from any supported storage source with robust security and full ACID transactional guarantees.

Easily ingest, load and transform at scale

Data can be ingested into the Warehouse through Pipelines, Dataflows, cross database querying or the COPY INTO command. Once ingested, data can be analyzed by multiple business groups through functionality such as sharing and cross database querying. Time to insights is expedited through a fully integrated BI experience through graphical data modeling easy to use web experience for querying within the Warehouse Editor.

Data warehousing items in Microsoft Fabric

There are two distinct data warehousing items: the SQL analytics endpoint of the Lakehouse and the Warehouse.

SQL analytics endpoint of the Lakehouse

A SQL analytics endpoint is a warehouse that is automatically generated from a Lakehouse in Microsoft Fabric. A customer can transition from the "Lake" view of the Lakehouse (which supports data engineering and Apache Spark) to the "SQL" view of the same Lakehouse. The SQL analytics endpoint is read-only, and data can only be modified through the "Lake" view of the Lakehouse using Spark.

Via the SQL analytics endpoint of the Lakehouse, the user has a subset of SQL commands that can define and query data objects but not manipulate the data. You can perform the following actions in the SQL analytics endpoint:

- Query the tables that reference data in your Delta Lake folders in the lake.

- Create views, inline TVFs, and procedures to encapsulate your semantics and business logic in T-SQL.

- Manage permissions on the objects.

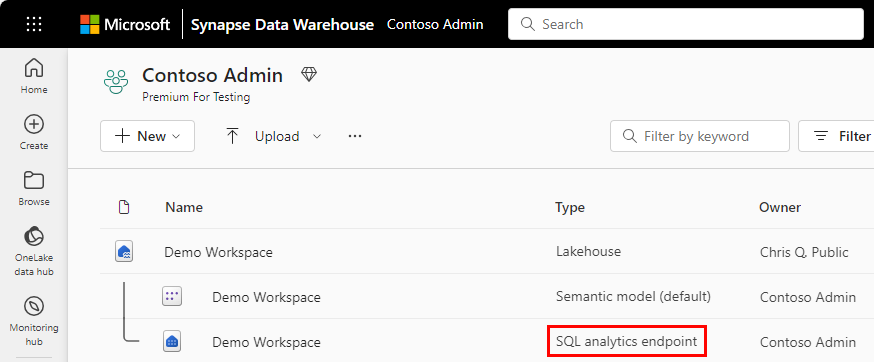

In a Microsoft Fabric workspace, a SQL analytics endpoint is labeled "SQL analytics endpoint" under the Type column. Each Lakehouse has an autogenerated SQL analytics endpoint that can be leveraged through familiar SQL tools such as SQL Server Management Studio, Azure Data Studio, the Microsoft Fabric SQL Query Editor.

To get started with the SQL analytics endpoint, see Better together: the lakehouse and warehouse in Microsoft Fabric.

Synapse Data Warehouse

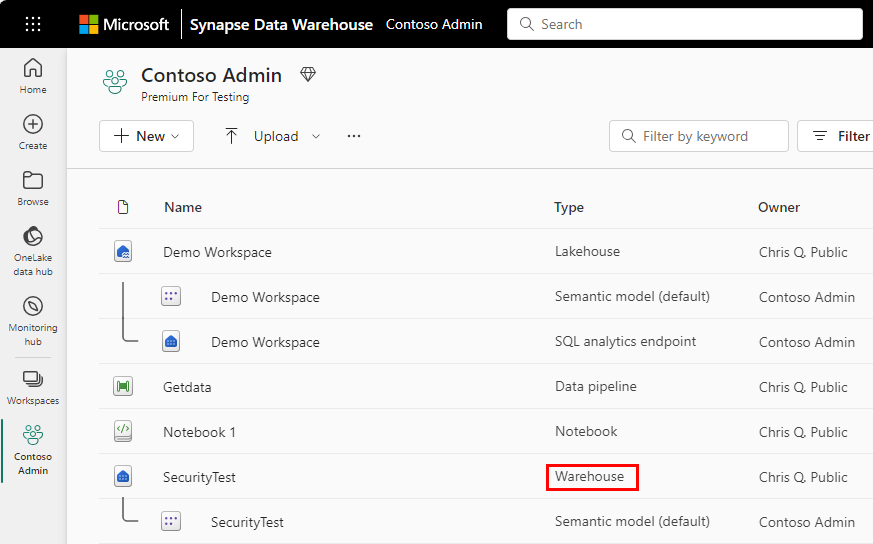

In a Microsoft Fabric workspace, a Synapse Data Warehouse or Warehouse is labeled as 'Warehouse' under the Type column. A Warehouse supports transactions, DDL, and DML queries.

Unlike a SQL analytics endpoint which only supports read only queries and creation of views and TVFs, a Warehouse has full transactional DDL and DML support and is created by a customer. A Warehouse is populated by one of the supported data ingestion methods such as COPY INTO, Pipelines, Dataflows, or cross database ingestion options such as CREATE TABLE AS SELECT (CTAS), INSERT..SELECT, or SELECT INTO.

To get started with the Warehouse, see Create a warehouse in Microsoft Fabric.

Compare the Warehouse and the SQL analytics endpoint of the Lakehouse

This section describes the differences between the Warehouse and SQL analytics endpoint in Microsoft Fabric.

The SQL analytics endpoint is a read-only warehouse that is automatically generated upon creation from a Lakehouse in Microsoft Fabric. Delta tables that are created through Spark in a Lakehouse are automatically discoverable in the SQL analytics endpoint as tables. The SQL analytics endpoint enables data engineers to build a relational layer on top of physical data in the Lakehouse and expose it to analysis and reporting tools using the SQL connection string. Data analysts can then use T-SQL to access Lakehouse data using Synapse Data Warehouse. Use SQL analytics endpoint to design your warehouse for BI needs and serving data.

The Synapse Data Warehouse or Warehouse is a 'traditional' data warehouse and supports the full transactional T-SQL capabilities like an enterprise data warehouse. As opposed to SQL analytics endpoint, where tables and data are automatically created, you are fully in control of creating tables, loading, transforming, and querying your data in the data warehouse using either the Microsoft Fabric portal or T-SQL commands.

For more information about querying your data in Microsoft Fabric, see Query the SQL analytics endpoint or Warehouse in Microsoft Fabric.

Compare different warehousing capabilities

In order to best serve your analytics use cases, there are a variety of capabilities available to you. Generally, the warehouse can be thought of as a superset of all other capabilities, providing a synergistic relationship between all other analytics offerings that provide T-SQL.

Within fabric, there are users who might need to decide between a Warehouse, Lakehouse, and even a Power BI datamart.

Microsoft Fabric offering

Warehouse

SQL analytics endpoint of the Lakehouse

Power BI datamart

Licensing

Fabric or Power BI Premium

Fabric or Power BI Premium

Power BI Premium only

Primary capabilities

ACID compliant, full data warehousing with transactions support in T-SQL.

Read only, system generated SQL analytics endpoint for Lakehouse for T-SQL querying and serving. Supports analytics on the Lakehouse Delta tables, and the Delta Lake folders referenced via shortcuts.

No-code data warehousing and T-SQL querying

Developer profile

SQL Developers or citizen developers

Data Engineers or SQL Developers

Citizen developer only

Recommended use case

- Data Warehousing for enterprise use

- Data Warehousing supporting departmental, business unit or self service use

- Structured data analysis in T-SQL with tables, views, procedures and functions and Advanced SQL support for BI

- Exploring and querying delta tables from the lakehouse

- Staging Data and Archival Zone for analysis

- Medallion lakehouse architecture with zones for bronze, silver and gold analysis

- Pairing with Warehouse for enterprise analytics use cases

- Small departmental or business unit warehousing use cases

- Self service data warehousing use cases

- Landing zone for Power BI dataflows and simple SQL support for BI

Development experience

- Warehouse Editor with full support for T-SQL data ingestion, modeling, development, and querying UI experiences for data ingestion, modeling, and querying

- Read / Write support for 1st and 3rd party tooling

- Lakehouse SQL analytics endpoint with limited T-SQL support for views, table valued functions, and SQL Queries

- UI experiences for modeling and querying

- Limited T-SQL support for 1st and 3rd party tooling

- Datamart Editor with UI experiences and queries support

- UI experiences for data ingestion, modeling, and querying

- Read-only support for 1st and 3rd party tooling

T-SQL capabilities

Full DQL, DML, and DDL T-SQL support, full transaction support

Full DQL, No DML, limited DDL T-SQL Support such as SQL Views and TVFs

Full DQL only

Data loading

SQL, pipelines, dataflows

Spark, pipelines, dataflows, shortcuts

Dataflows only

Delta table support

Reads and writes Delta tables

Reads delta tables

NA

Storage layer

Open Data Format - Delta

Open Data Format - Delta

NA

Automatically generated schema in the SQL analytics endpoint of the Lakehouse

The SQL analytics endpoint manages the automatically generated tables so the workspace users can't modify them. Users can enrich the database model by adding their own SQL schemas, views, procedures, and other database objects.

For every Delta table in your Lakehouse, the SQL analytics endpoint automatically generates one table.

Tables in the SQL analytics endpoint are created with a delay. Once you create or update Delta Lake folder/table in the lake, the warehouse table that references the lake data won't be immediately created/refreshed. The changes will be applied in the warehouse after 5-10 seconds.

For autogenerated schema data types for the SQL analytics endpoint, see Data types in Microsoft Fabric.

Related content

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for