How to recognize intents with simple language pattern matching

The Azure AI services Speech SDK has a built-in feature to provide intent recognition with simple language pattern matching. An intent is something the user wants to do: close a window, mark a checkbox, insert some text, etc.

In this guide, you use the Speech SDK to develop a C++ console application that derives intents from user utterances through your device's microphone. You learn how to:

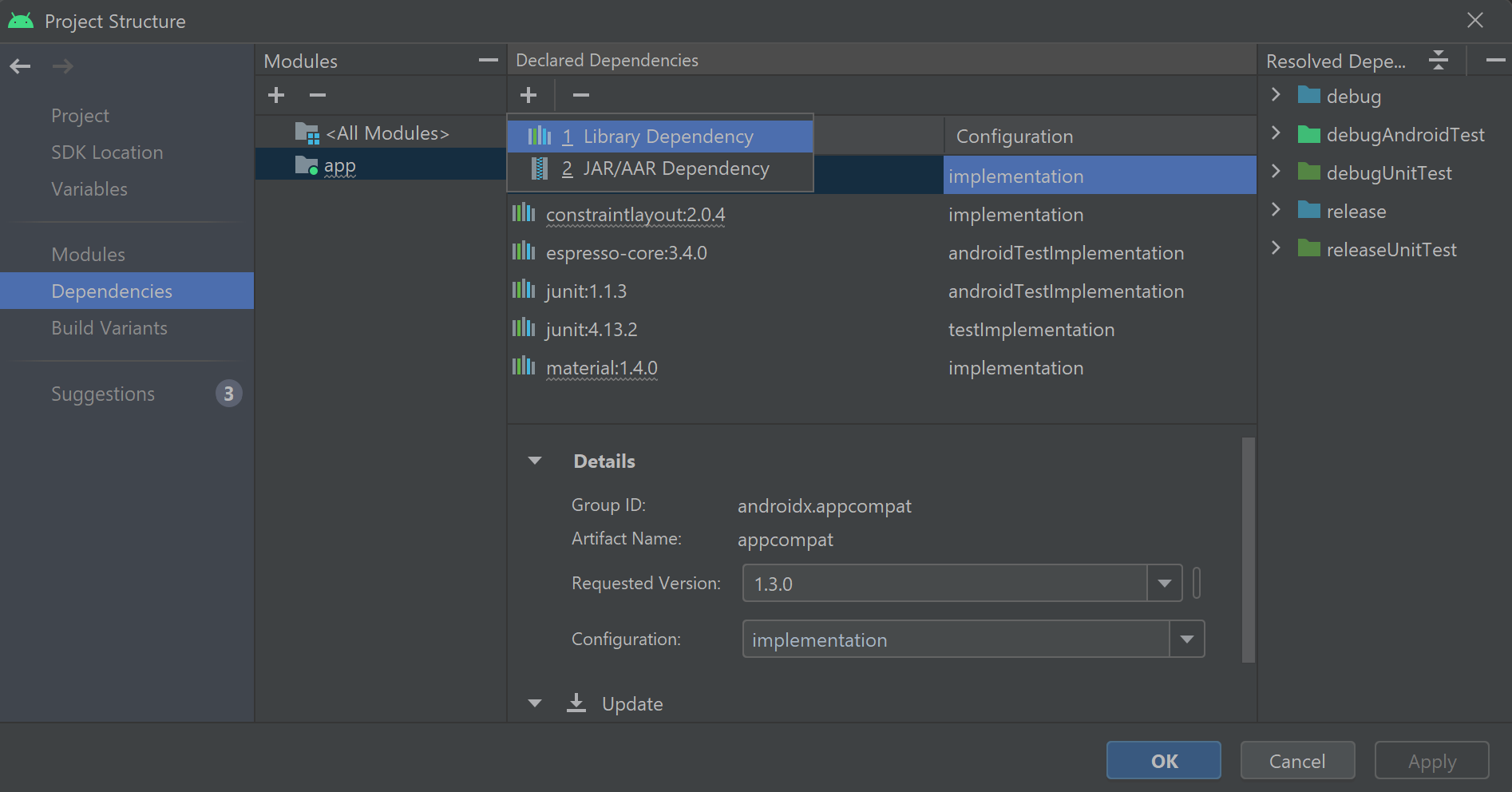

- Create a Visual Studio project referencing the Speech SDK NuGet package

- Create a speech configuration and get an intent recognizer

- Add intents and patterns via the Speech SDK API

- Recognize speech from a microphone

- Use asynchronous, event-driven continuous recognition

When to use pattern matching

Use pattern matching if:

- You're only interested in matching strictly what the user said. These patterns match more aggressively than conversational language understanding (CLU).

- You don't have access to a CLU model, but still want intents.

For more information, see the pattern matching overview.

Prerequisites

Be sure you have the following items before you begin this guide:

- An Azure AI services resource or a Unified Speech resource

- Visual Studio 2019 (any edition).

Speech and simple patterns

The simple patterns are a feature of the Speech SDK and need an Azure AI services resource or a Unified Speech resource.

A pattern is a phrase that includes an Entity somewhere within it. An Entity is defined by wrapping a word in curly brackets. This example defines an Entity with the ID "floorName", which is case-sensitive:

Take me to the {floorName}

All other special characters and punctuation are ignored.

Intents are added using calls to the IntentRecognizer->AddIntent() API.

Create a project

Create a new C# console application project in Visual Studio 2019 and install the Speech SDK.

Start with some boilerplate code

Let's open Program.cs and add some code that works as a skeleton for our project.

using System;

using System.Threading.Tasks;

using Microsoft.CognitiveServices.Speech;

using Microsoft.CognitiveServices.Speech.Intent;

namespace helloworld

{

class Program

{

static void Main(string[] args)

{

IntentPatternMatchingWithMicrophoneAsync().Wait();

}

private static async Task IntentPatternMatchingWithMicrophoneAsync()

{

var config = SpeechConfig.FromSubscription("YOUR_SUBSCRIPTION_KEY", "YOUR_SUBSCRIPTION_REGION");

}

}

}

Create a Speech configuration

Before you can initialize an IntentRecognizer object, you need to create a configuration that uses the key and location for your Azure AI services prediction resource.

- Replace

"YOUR_SUBSCRIPTION_KEY"with your Azure AI services prediction key. - Replace

"YOUR_SUBSCRIPTION_REGION"with your Azure AI services resource region.

This sample uses the FromSubscription() method to build the SpeechConfig. For a full list of available methods, see SpeechConfig Class.

Initialize an IntentRecognizer

Now create an IntentRecognizer. Insert this code right below your Speech configuration.

using (var intentRecognizer = new IntentRecognizer(config))

{

}

Add some intents

You need to associate some patterns with the IntentRecognizer by calling AddIntent().

We will add 2 intents with the same ID for changing floors, and another intent with a separate ID for opening and closing doors.

Insert this code inside the using block:

intentRecognizer.AddIntent("Take me to floor {floorName}.", "ChangeFloors");

intentRecognizer.AddIntent("Go to floor {floorName}.", "ChangeFloors");

intentRecognizer.AddIntent("{action} the door.", "OpenCloseDoor");

Note

There is no limit to the number of entities you can declare, but they will be loosely matched. If you add a phrase like "{action} door" it will match any time there is text before the word "door". Intents are evaluated based on their number of entities. If two patterns would match, the one with more defined entities is returned.

Recognize an intent

From the IntentRecognizer object, you're going to call the RecognizeOnceAsync() method. This method asks the Speech service to recognize speech in a single phrase, and stop recognizing speech once the phrase is identified. For simplicity we'll wait on the future returned to complete.

Insert this code below your intents:

Console.WriteLine("Say something...");

var result = await intentRecognizer.RecognizeOnceAsync();

Display the recognition results (or errors)

When the recognition result is returned by the Speech service, we will print the result.

Insert this code below var result = await recognizer.RecognizeOnceAsync();:

string floorName;

switch (result.Reason)

{

case ResultReason.RecognizedSpeech:

Console.WriteLine($"RECOGNIZED: Text= {result.Text}");

Console.WriteLine($" Intent not recognized.");

break;

case ResultReason.RecognizedIntent:

Console.WriteLine($"RECOGNIZED: Text= {result.Text}");

Console.WriteLine($" Intent Id= {result.IntentId}.");

var entities = result.Entities;

if (entities.TryGetValue("floorName", out floorName))

{

Console.WriteLine($" FloorName= {floorName}");

}

if (entities.TryGetValue("action", out floorName))

{

Console.WriteLine($" Action= {floorName}");

}

break;

case ResultReason.NoMatch:

{

Console.WriteLine($"NOMATCH: Speech could not be recognized.");

var noMatch = NoMatchDetails.FromResult(result);

switch (noMatch.Reason)

{

case NoMatchReason.NotRecognized:

Console.WriteLine($"NOMATCH: Speech was detected, but not recognized.");

break;

case NoMatchReason.InitialSilenceTimeout:

Console.WriteLine($"NOMATCH: The start of the audio stream contains only silence, and the service timed out waiting for speech.");

break;

case NoMatchReason.InitialBabbleTimeout:

Console.WriteLine($"NOMATCH: The start of the audio stream contains only noise, and the service timed out waiting for speech.");

break;

case NoMatchReason.KeywordNotRecognized:

Console.WriteLine($"NOMATCH: Keyword not recognized");

break;

}

break;

}

case ResultReason.Canceled:

{

var cancellation = CancellationDetails.FromResult(result);

Console.WriteLine($"CANCELED: Reason={cancellation.Reason}");

if (cancellation.Reason == CancellationReason.Error)

{

Console.WriteLine($"CANCELED: ErrorCode={cancellation.ErrorCode}");

Console.WriteLine($"CANCELED: ErrorDetails={cancellation.ErrorDetails}");

Console.WriteLine($"CANCELED: Did you set the speech resource key and region values?");

}

break;

}

default:

break;

}

Check your code

At this point, your code should look like this:

using System;

using System.Threading.Tasks;

using Microsoft.CognitiveServices.Speech;

using Microsoft.CognitiveServices.Speech.Intent;

namespace helloworld

{

class Program

{

static void Main(string[] args)

{

IntentPatternMatchingWithMicrophoneAsync().Wait();

}

private static async Task IntentPatternMatchingWithMicrophoneAsync()

{

var config = SpeechConfig.FromSubscription("YOUR_SUBSCRIPTION_KEY", "YOUR_SUBSCRIPTION_REGION");

using (var intentRecognizer = new IntentRecognizer(config))

{

intentRecognizer.AddIntent("Take me to floor {floorName}.", "ChangeFloors");

intentRecognizer.AddIntent("Go to floor {floorName}.", "ChangeFloors");

intentRecognizer.AddIntent("{action} the door.", "OpenCloseDoor");

Console.WriteLine("Say something...");

var result = await intentRecognizer.RecognizeOnceAsync();

string floorName;

switch (result.Reason)

{

case ResultReason.RecognizedSpeech:

Console.WriteLine($"RECOGNIZED: Text= {result.Text}");

Console.WriteLine($" Intent not recognized.");

break;

case ResultReason.RecognizedIntent:

Console.WriteLine($"RECOGNIZED: Text= {result.Text}");

Console.WriteLine($" Intent Id= {result.IntentId}.");

var entities = result.Entities;

if (entities.TryGetValue("floorName", out floorName))

{

Console.WriteLine($" FloorName= {floorName}");

}

if (entities.TryGetValue("action", out floorName))

{

Console.WriteLine($" Action= {floorName}");

}

break;

case ResultReason.NoMatch:

{

Console.WriteLine($"NOMATCH: Speech could not be recognized.");

var noMatch = NoMatchDetails.FromResult(result);

switch (noMatch.Reason)

{

case NoMatchReason.NotRecognized:

Console.WriteLine($"NOMATCH: Speech was detected, but not recognized.");

break;

case NoMatchReason.InitialSilenceTimeout:

Console.WriteLine($"NOMATCH: The start of the audio stream contains only silence, and the service timed out waiting for speech.");

break;

case NoMatchReason.InitialBabbleTimeout:

Console.WriteLine($"NOMATCH: The start of the audio stream contains only noise, and the service timed out waiting for speech.");

break;

case NoMatchReason.KeywordNotRecognized:

Console.WriteLine($"NOMATCH: Keyword not recognized");

break;

}

break;

}

case ResultReason.Canceled:

{

var cancellation = CancellationDetails.FromResult(result);

Console.WriteLine($"CANCELED: Reason={cancellation.Reason}");

if (cancellation.Reason == CancellationReason.Error)

{

Console.WriteLine($"CANCELED: ErrorCode={cancellation.ErrorCode}");

Console.WriteLine($"CANCELED: ErrorDetails={cancellation.ErrorDetails}");

Console.WriteLine($"CANCELED: Did you set the speech resource key and region values?");

}

break;

}

default:

break;

}

}

}

}

}

Build and run your app

Now you're ready to build your app and test our speech recognition using the Speech service.

- Compile the code - From the menu bar of Visual Studio, choose Build > Build Solution.

- Start your app - From the menu bar, choose Debug > Start Debugging or press F5.

- Start recognition - It will prompt you to say something. The default language is English. Your speech is sent to the Speech service, transcribed as text, and rendered in the console.

For example if you say "Take me to floor 7", this should be the output:

Say something ...

RECOGNIZED: Text= Take me to floor 7.

Intent Id= ChangeFloors

FloorName= 7

Create a project

Create a new C++ console application project in Visual Studio 2019 and install the Speech SDK.

Start with some boilerplate code

Let's open helloworld.cpp and add some code that works as a skeleton for our project.

#include <iostream>

#include <speechapi_cxx.h>

using namespace Microsoft::CognitiveServices::Speech;

using namespace Microsoft::CognitiveServices::Speech::Intent;

int main()

{

std::cout << "Hello World!\n";

auto config = SpeechConfig::FromSubscription("YOUR_SUBSCRIPTION_KEY", "YOUR_SUBSCRIPTION_REGION");

}

Create a Speech configuration

Before you can initialize an IntentRecognizer object, you need to create a configuration that uses the key and location for your Azure AI services prediction resource.

- Replace

"YOUR_SUBSCRIPTION_KEY"with your Azure AI services prediction key. - Replace

"YOUR_SUBSCRIPTION_REGION"with your Azure AI services resource region.

This sample uses the FromSubscription() method to build the SpeechConfig. For a full list of available methods, see SpeechConfig Class.

Initialize an IntentRecognizer

Now create an IntentRecognizer. Insert this code right below your Speech configuration.

auto intentRecognizer = IntentRecognizer::FromConfig(config);

Add some intents

You need to associate some patterns with the IntentRecognizer by calling AddIntent().

We will add 2 intents with the same ID for changing floors, and another intent with a separate ID for opening and closing doors.

intentRecognizer->AddIntent("Take me to floor {floorName}.", "ChangeFloors");

intentRecognizer->AddIntent("Go to floor {floorName}.", "ChangeFloors");

intentRecognizer->AddIntent("{action} the door.", "OpenCloseDoor");

Note

There is no limit to the number of entities you can declare, but they will be loosely matched. If you add a phrase like "{action} door" it will match any time there is text before the word "door". Intents are evaluated based on their number of entities. If two patterns would match, the one with more defined entities is returned.

Recognize an intent

From the IntentRecognizer object, you're going to call the RecognizeOnceAsync() method. This method asks the Speech service to recognize speech in a single phrase, and stop recognizing speech once the phrase is identified. For simplicity we'll wait on the future returned to complete.

Insert this code below your intents:

std::cout << "Say something ..." << std::endl;

auto result = intentRecognizer->RecognizeOnceAsync().get();

Display the recognition results (or errors)

When the recognition result is returned by the Speech service, we will print the result.

Insert this code below auto result = intentRecognizer->RecognizeOnceAsync().get();:

switch (result->Reason)

{

case ResultReason::RecognizedSpeech:

std::cout << "RECOGNIZED: Text = " << result->Text.c_str() << std::endl;

std::cout << "NO INTENT RECOGNIZED!" << std::endl;

break;

case ResultReason::RecognizedIntent:

std::cout << "RECOGNIZED: Text = " << result->Text.c_str() << std::endl;

std::cout << " Intent Id = " << result->IntentId.c_str() << std::endl;

auto entities = result->GetEntities();

if (entities.find("floorName") != entities.end())

{

std::cout << " Floor name: = " << entities["floorName"].c_str() << std::endl;

}

if (entities.find("action") != entities.end())

{

std::cout << " Action: = " << entities["action"].c_str() << std::endl;

}

break;

case ResultReason::NoMatch:

{

auto noMatch = NoMatchDetails::FromResult(result);

switch (noMatch->Reason)

{

case NoMatchReason::NotRecognized:

std::cout << "NOMATCH: Speech was detected, but not recognized." << std::endl;

break;

case NoMatchReason::InitialSilenceTimeout:

std::cout << "NOMATCH: The start of the audio stream contains only silence, and the service timed out waiting for speech." << std::endl;

break;

case NoMatchReason::InitialBabbleTimeout:

std::cout << "NOMATCH: The start of the audio stream contains only noise, and the service timed out waiting for speech." << std::endl;

break;

case NoMatchReason::KeywordNotRecognized:

std::cout << "NOMATCH: Keyword not recognized" << std::endl;

break;

}

break;

}

case ResultReason::Canceled:

{

auto cancellation = CancellationDetails::FromResult(result);

if (!cancellation->ErrorDetails.empty())

{

std::cout << "CANCELED: ErrorDetails=" << cancellation->ErrorDetails.c_str() << std::endl;

std::cout << "CANCELED: Did you set the speech resource key and region values?" << std::endl;

}

}

default:

break;

}

Check your code

At this point, your code should look like this:

#include <iostream>

#include <speechapi_cxx.h>

using namespace Microsoft::CognitiveServices::Speech;

using namespace Microsoft::CognitiveServices::Speech::Intent;

int main()

{

auto config = SpeechConfig::FromSubscription("YOUR_SUBSCRIPTION_KEY", "YOUR_SUBSCRIPTION_REGION");

auto intentRecognizer = IntentRecognizer::FromConfig(config);

intentRecognizer->AddIntent("Take me to floor {floorName}.", "ChangeFloors");

intentRecognizer->AddIntent("Go to floor {floorName}.", "ChangeFloors");

intentRecognizer->AddIntent("{action} the door.", "OpenCloseDoor");

std::cout << "Say something ..." << std::endl;

auto result = intentRecognizer->RecognizeOnceAsync().get();

switch (result->Reason)

{

case ResultReason::RecognizedSpeech:

std::cout << "RECOGNIZED: Text = " << result->Text.c_str() << std::endl;

std::cout << "NO INTENT RECOGNIZED!" << std::endl;

break;

case ResultReason::RecognizedIntent:

std::cout << "RECOGNIZED: Text = " << result->Text.c_str() << std::endl;

std::cout << " Intent Id = " << result->IntentId.c_str() << std::endl;

auto entities = result->GetEntities();

if (entities.find("floorName") != entities.end())

{

std::cout << " Floor name: = " << entities["floorName"].c_str() << std::endl;

}

if (entities.find("action") != entities.end())

{

std::cout << " Action: = " << entities["action"].c_str() << std::endl;

}

break;

case ResultReason::NoMatch:

{

auto noMatch = NoMatchDetails::FromResult(result);

switch (noMatch->Reason)

{

case NoMatchReason::NotRecognized:

std::cout << "NOMATCH: Speech was detected, but not recognized." << std::endl;

break;

case NoMatchReason::InitialSilenceTimeout:

std::cout << "NOMATCH: The start of the audio stream contains only silence, and the service timed out waiting for speech." << std::endl;

break;

case NoMatchReason::InitialBabbleTimeout:

std::cout << "NOMATCH: The start of the audio stream contains only noise, and the service timed out waiting for speech." << std::endl;

break;

case NoMatchReason::KeywordNotRecognized:

std::cout << "NOMATCH: Keyword not recognized." << std::endl;

break;

}

break;

}

case ResultReason::Canceled:

{

auto cancellation = CancellationDetails::FromResult(result);

if (!cancellation->ErrorDetails.empty())

{

std::cout << "CANCELED: ErrorDetails=" << cancellation->ErrorDetails.c_str() << std::endl;

std::cout << "CANCELED: Did you set the speech resource key and region values?" << std::endl;

}

}

default:

break;

}

}

Build and run your app

Now you're ready to build your app and test our speech recognition using the Speech service.

- Compile the code - From the menu bar of Visual Studio, choose Build > Build Solution.

- Start your app - From the menu bar, choose Debug > Start Debugging or press F5.

- Start recognition - It will prompt you to say something. The default language is English. Your speech is sent to the Speech service, transcribed as text, and rendered in the console.

For example if you say "Take me to floor 7", this should be the output:

Say something ...

RECOGNIZED: Text = Take me to floor 7.

Intent Id = ChangeFloors

Floor name: = 7

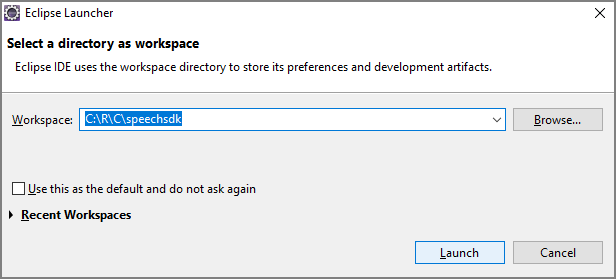

Reference documentation | Additional Samples on GitHub

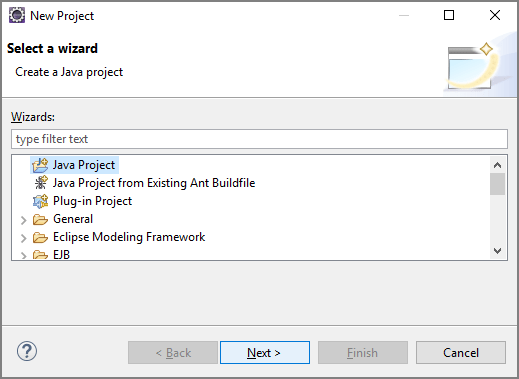

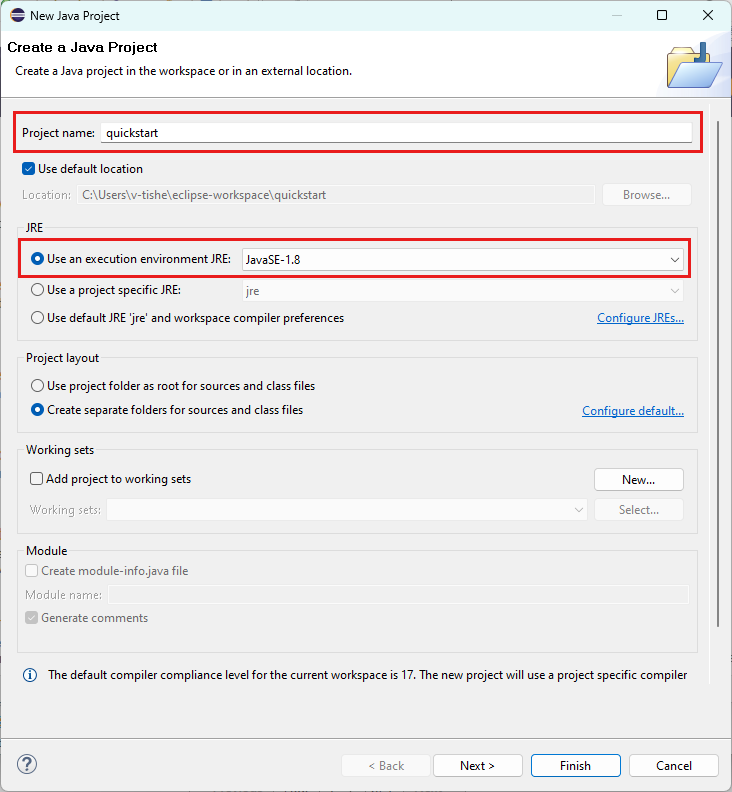

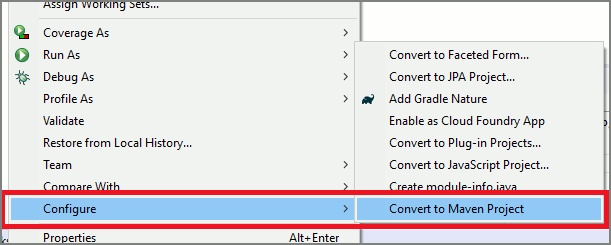

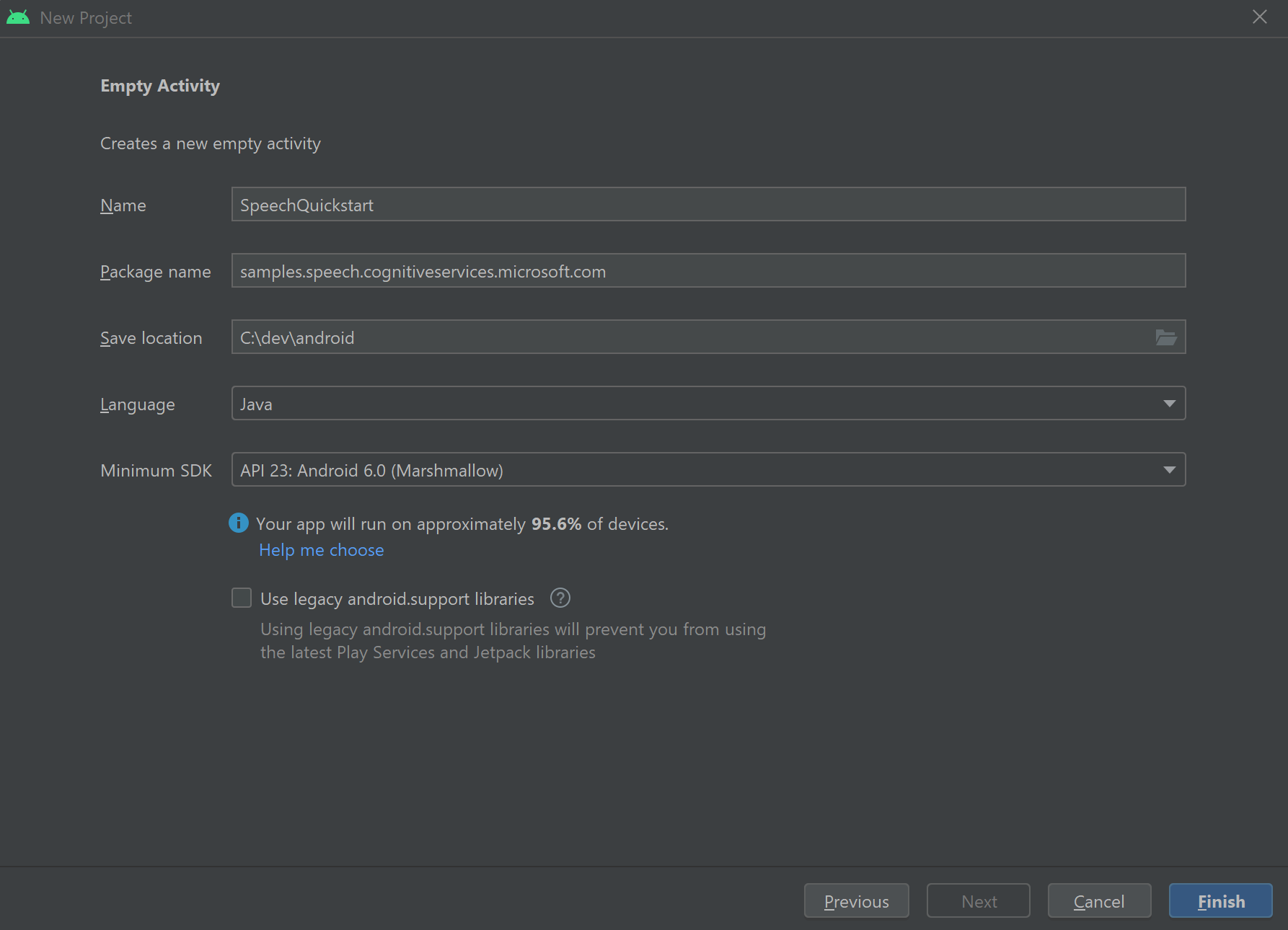

In this quickstart, you install the Speech SDK for Java.

Platform requirements

Choose your target environment:

The Speech SDK for Java is compatible with Windows, Linux, and macOS.

On Windows, you must use the 64-bit target architecture. Windows 10 or later is required.

Install the Microsoft Visual C++ Redistributable for Visual Studio 2015, 2017, 2019, and 2022 for your platform. Installing this package for the first time might require a restart.

The Speech SDK for Java doesn't support Windows on ARM64.

Install a Java Development Kit such as Azul Zulu OpenJDK. The Microsoft Build of OpenJDK or your preferred JDK should also work.

Install the Speech SDK for Java

Some of the instructions use a specific SDK version such as 1.24.2. To check the latest version, search our GitHub repository.

Choose your target environment:

This guide shows how to install the Speech SDK for Java on the Java Runtime.

Supported operating systems

The Speech SDK for Java package is available for these operating systems:

- Windows: 64-bit only.

- Mac: macOS X version 10.14 or later.

- Linux: See the supported Linux distributions and target architectures.

Follow these steps to install the Speech SDK for Java using Apache Maven:

Install Apache Maven.

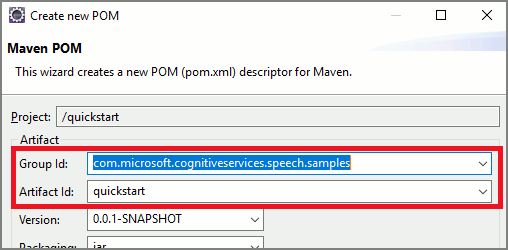

Open a command prompt where you want the new project, and create a new pom.xml file.

Copy the following XML content into pom.xml:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.microsoft.cognitiveservices.speech.samples</groupId> <artifactId>quickstart-eclipse</artifactId> <version>1.0.0-SNAPSHOT</version> <build> <sourceDirectory>src</sourceDirectory> <plugins> <plugin> <artifactId>maven-compiler-plugin</artifactId> <version>3.7.0</version> <configuration> <source>1.8</source> <target>1.8</target> </configuration> </plugin> </plugins> </build> <dependencies> <dependency> <groupId>com.microsoft.cognitiveservices.speech</groupId> <artifactId>client-sdk</artifactId> <version>1.38.0</version> </dependency> </dependencies> </project>Run the following Maven command to install the Speech SDK and dependencies.

mvn clean dependency:copy-dependencies

Start with some boilerplate code

Open

Main.javafrom the src dir.Replace the contents of the file with following:

package quickstart;

import java.util.Dictionary;

import java.util.concurrent.ExecutionException;

import com.microsoft.cognitiveservices.speech.*;

import com.microsoft.cognitiveservices.speech.intent.*;

public class Program {

public static void main(String[] args) throws InterruptedException, ExecutionException {

IntentPatternMatchingWithMicrophone();

}

public static void IntentPatternMatchingWithMicrophone() throws InterruptedException, ExecutionException {

SpeechConfig config = SpeechConfig.fromSubscription("YOUR_SUBSCRIPTION_KEY", "YOUR_SUBSCRIPTION_REGION");

}

}

Create a Speech configuration

Before you can initialize an IntentRecognizer object, you need to create a configuration that uses the key and location for your Azure AI services prediction resource.

- Replace

"YOUR_SUBSCRIPTION_KEY"with your Azure AI services prediction key. - Replace

"YOUR_SUBSCRIPTION_REGION"with your Azure AI services resource region.

This sample uses the FromSubscription() method to build the SpeechConfig. For a full list of available methods, see SpeechConfig Class.

Initialize an IntentRecognizer

Now create an IntentRecognizer. Insert this code right below your Speech configuration.

try (IntentRecognizer intentRecognizer = new IntentRecognizer(config)) {

}

Add some intents

You need to associate some patterns with the IntentRecognizer by calling addIntent().

We will add 2 intents with the same ID for changing floors, and another intent with a separate ID for opening and closing doors.

Insert this code inside the try block:

intentRecognizer.addIntent("Take me to floor {floorName}.", "ChangeFloors");

intentRecognizer.addIntent("Go to floor {floorName}.", "ChangeFloors");

intentRecognizer.addIntent("{action} the door.", "OpenCloseDoor");

Note

There is no limit to the number of entities you can declare, but they will be loosely matched. If you add a phrase like "{action} door" it will match any time there is text before the word "door". Intents are evaluated based on their number of entities. If two patterns would match, the one with more defined entities is returned.

Recognize an intent

From the IntentRecognizer object, you're going to call the recognizeOnceAsync() method. This method asks the Speech service to recognize speech in a single phrase, and stop recognizing speech once the phrase is identified. For simplicity we'll wait on the future returned to complete.

Insert this code below your intents:

System.out.println("Say something...");

IntentRecognitionResult result = intentRecognizer.recognizeOnceAsync().get();

Display the recognition results (or errors)

When the recognition result is returned by the Speech service, we will print the result.

Insert this code below IntentRecognitionResult result = recognizer.recognizeOnceAsync().get();:

if (result.getReason() == ResultReason.RecognizedSpeech) {

System.out.println("RECOGNIZED: Text= " + result.getText());

System.out.println(String.format("%17s", "Intent not recognized."));

}

else if (result.getReason() == ResultReason.RecognizedIntent) {

System.out.println("RECOGNIZED: Text= " + result.getText());

System.out.println(String.format("%17s %s", "Intent Id=", result.getIntentId() + "."));

Dictionary<String, String> entities = result.getEntities();

if (entities.get("floorName") != null) {

System.out.println(String.format("%17s %s", "FloorName=", entities.get("floorName")));

}

if (entities.get("action") != null) {

System.out.println(String.format("%17s %s", "Action=", entities.get("action")));

}

}

else if (result.getReason() == ResultReason.NoMatch) {

System.out.println("NOMATCH: Speech could not be recognized.");

}

else if (result.getReason() == ResultReason.Canceled) {

CancellationDetails cancellation = CancellationDetails.fromResult(result);

System.out.println("CANCELED: Reason=" + cancellation.getReason());

if (cancellation.getReason() == CancellationReason.Error)

{

System.out.println("CANCELED: ErrorCode=" + cancellation.getErrorCode());

System.out.println("CANCELED: ErrorDetails=" + cancellation.getErrorDetails());

System.out.println("CANCELED: Did you update the subscription info?");

}

}

Check your code

At this point, your code should look like this:

package quickstart;

import java.util.Dictionary;

import java.util.concurrent.ExecutionException;

import com.microsoft.cognitiveservices.speech.*;

import com.microsoft.cognitiveservices.speech.intent.*;

public class Main {

public static void main(String[] args) throws InterruptedException, ExecutionException {

IntentPatternMatchingWithMicrophone();

}

public static void IntentPatternMatchingWithMicrophone() throws InterruptedException, ExecutionException {

SpeechConfig config = SpeechConfig.fromSubscription("YOUR_SUBSCRIPTION_KEY", "YOUR_SUBSCRIPTION_REGION");

try (IntentRecognizer intentRecognizer = new IntentRecognizer(config)) {

intentRecognizer.addIntent("Take me to floor {floorName}.", "ChangeFloors");

intentRecognizer.addIntent("Go to floor {floorName}.", "ChangeFloors");

intentRecognizer.addIntent("{action} the door.", "OpenCloseDoor");

System.out.println("Say something...");

IntentRecognitionResult result = intentRecognizer.recognizeOnceAsync().get();

if (result.getReason() == ResultReason.RecognizedSpeech) {

System.out.println("RECOGNIZED: Text= " + result.getText());

System.out.println(String.format("%17s", "Intent not recognized."));

}

else if (result.getReason() == ResultReason.RecognizedIntent) {

System.out.println("RECOGNIZED: Text= " + result.getText());

System.out.println(String.format("%17s %s", "Intent Id=", result.getIntentId() + "."));

Dictionary<String, String> entities = result.getEntities();

if (entities.get("floorName") != null) {

System.out.println(String.format("%17s %s", "FloorName=", entities.get("floorName")));

}

if (entities.get("action") != null) {

System.out.println(String.format("%17s %s", "Action=", entities.get("action")));

}

}

else if (result.getReason() == ResultReason.NoMatch) {

System.out.println("NOMATCH: Speech could not be recognized.");

}

else if (result.getReason() == ResultReason.Canceled) {

CancellationDetails cancellation = CancellationDetails.fromResult(result);

System.out.println("CANCELED: Reason=" + cancellation.getReason());

if (cancellation.getReason() == CancellationReason.Error)

{

System.out.println("CANCELED: ErrorCode=" + cancellation.getErrorCode());

System.out.println("CANCELED: ErrorDetails=" + cancellation.getErrorDetails());

System.out.println("CANCELED: Did you update the subscription info?");

}

}

}

}

}

Build and run your app

Now you're ready to build your app and test our intent recognition using the speech service and the embedded pattern matcher.

Select the run button in Eclipse or press ctrl+F11, then watch the output for the "Say something..." prompt. Once it appears speak your utterance and watch the output.

For example if you say "Take me to floor 7", this should be the output:

Say something ...

RECOGNIZED: Text= Take me to floor 7.

Intent Id= ChangeFloors

FloorName= 7

Next steps

Improve your pattern matching by using custom entities.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for