Monitor Nexus Kubernetes cluster

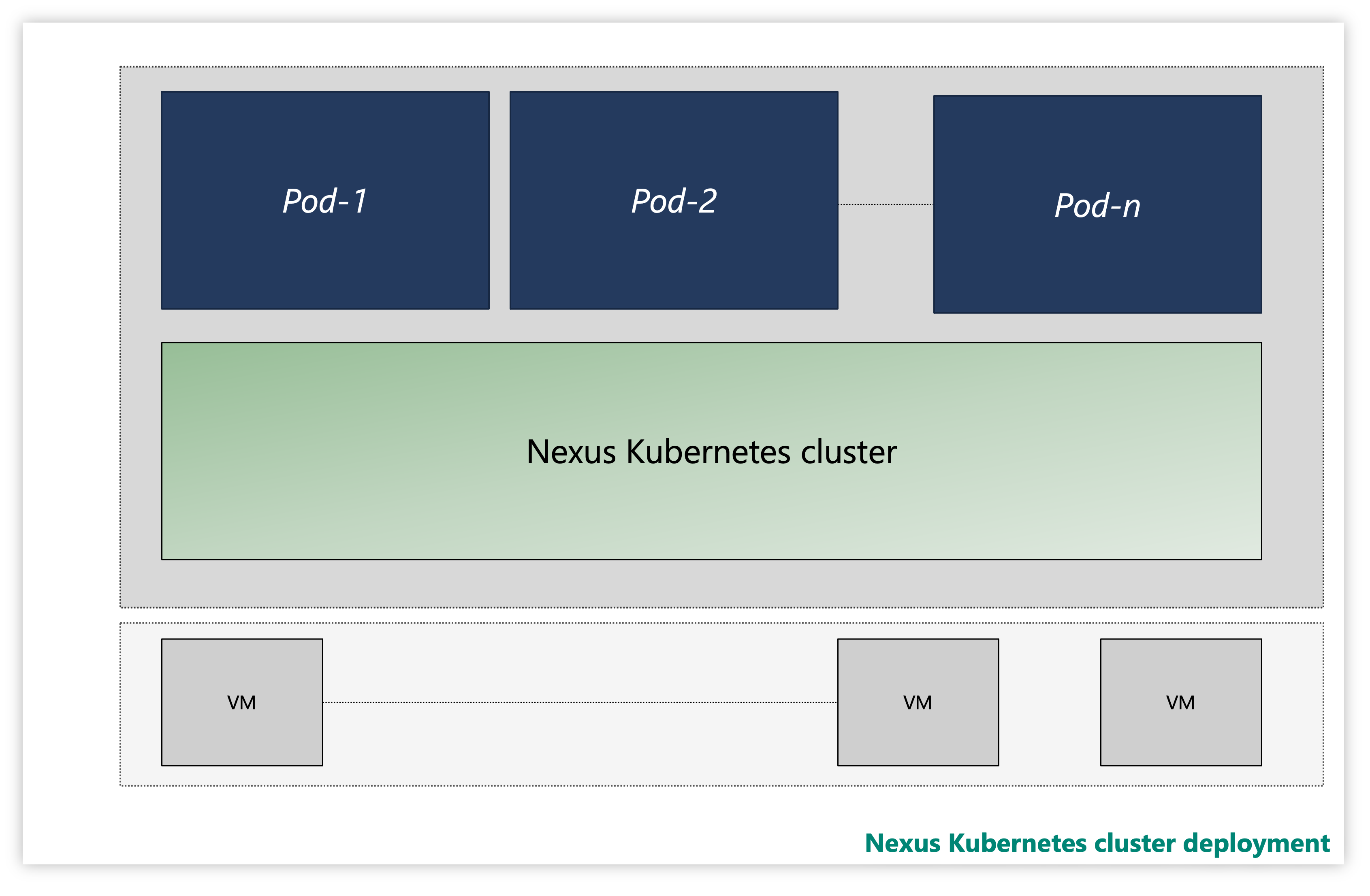

Each Nexus Kubernetes cluster consists of multiple layers:

- Virtual Machines (VMs)

- Kubernetes layer

- Application pods

Figure: Sample Nexus Kubernetes cluster

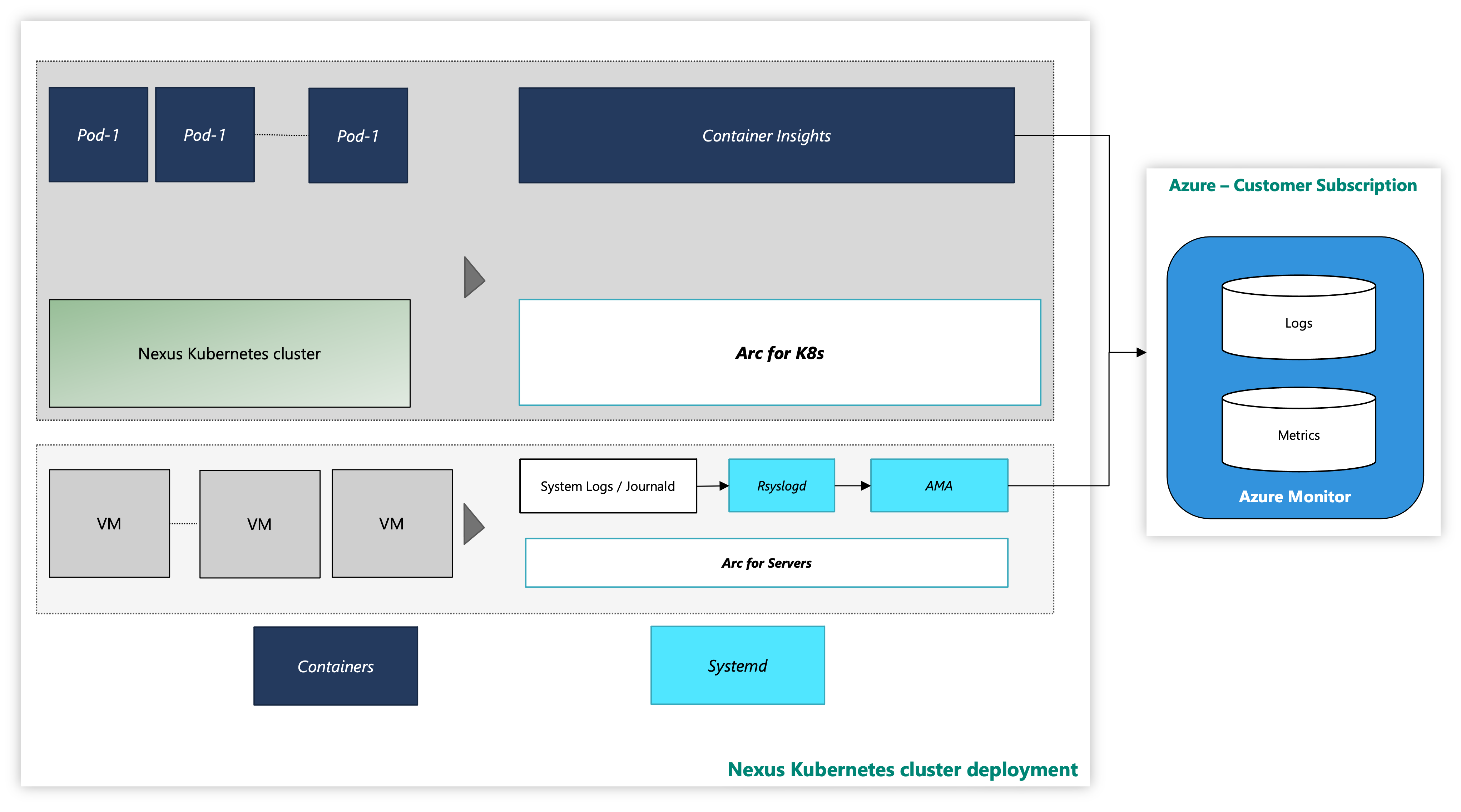

On an instance, Nexus Kubernetes clusters are delivered with an optional Container Insights observability solution. Container Insights captures the logs and metrics from Nexus Kubernetes clusters and workloads. It's solely your discretion whether to enable this tooling or deploy your own telemetry stack.

The Nexus Kubernetes cluster with Azure monitoring tool looks like:

Figure: Nexus Kubernetes cluster with Monitoring Tools

Extension onboarding with CLI using managed identity auth

Documentation for starting with Azure CLI, how to install it across multiple operating systems, and how to install CLI extensions.

Install latest version of the necessary CLI extensions.

Monitor Nexus Kubernetes cluster – VM layer

This how-to guide provides steps and utility scripts to Arc connect the Nexus Kubernetes cluster Virtual Machines to Azure and enable monitoring agents for the collection of System logs from these VMs using Azure Monitoring Agent. The instructions further capture details on how to set up log data collection into a Log Analytics workspace.

The following resources provide you with support:

arc-connect.env: use this template file to create environment variables needed by included scripts

export SUBSCRIPTION_ID=""

export SERVICE_PRINCIPAL_ID=""

export SERVICE_PRINCIPAL_SECRET=""

export RESOURCE_GROUP=""

export TENANT_ID=""

export LOCATION=""

export INSTALL_AZURE_MONITOR_AGENT="true"

export PROXY_URL=""

export NAMESPACE=""

export AZURE_MONITOR_AGENT_VERSION="1.24.2"

export CONNECTEDMACHINE_AZCLI_VERSION="0.6.0"

dcr.sh: use this script to create a Data Collection Rule (DCR) to configure syslog collection

#!/bin/bash

set -e

SUBSCRIPTION_ID="${SUBSCRIPTION_ID:?SUBSCRIPTION_ID must be set}"

SERVICE_PRINCIPAL_ID="${SERVICE_PRINCIPAL_ID:?SERVICE_PRINCIPAL_ID must be set}"

SERVICE_PRINCIPAL_SECRET="${SERVICE_PRINCIPAL_SECRET:?SERVICE_PRINCIPAL_SECRET must be set}"

RESOURCE_GROUP="${RESOURCE_GROUP:?RESOURCE_GROUP must be set}"

TENANT_ID="${TENANT_ID:?TENANT_ID must be set}"

LOCATION="${LOCATION:?LOCATION must be set}"

LAW_RESOURCE_ID="${LAW_RESOURCE_ID:?LAW_RESOURCE_ID must be set}"

DCR_NAME=${DCR_NAME:-${RESOURCE_GROUP}-syslog-dcr}

az login --service-principal -u "${SERVICE_PRINCIPAL_ID}" -p "${SERVICE_PRINCIPAL_SECRET}" -t "${TENANT_ID}"

az account set -s "${SUBSCRIPTION_ID}"

az extension add --name monitor-control-service

RULEFILE=$(mktemp)

tee "${RULEFILE}" <<EOF

{

"location": "${LOCATION}",

"properties": {

"dataSources": {

"syslog": [

{

"name": "syslog",

"streams": [

"Microsoft-Syslog"

],

"facilityNames": [

"auth",

"authpriv",

"cron",

"daemon",

"mark",

"kern",

"local0",

"local1",

"local2",

"local3",

"local4",

"local5",

"local6",

"local7",

"lpr",

"mail",

"news",

"syslog",

"user",

"uucp"

],

"logLevels": [

"Info",

"Notice",

"Warning",

"Error",

"Critical",

"Alert",

"Emergency"

]

}

]

},

"destinations": {

"logAnalytics": [

{

"workspaceResourceId": "${LAW_RESOURCE_ID}",

"name": "centralWorkspace"

}

]

},

"dataFlows": [

{

"streams": [

"Microsoft-Syslog"

],

"destinations": [

"centralWorkspace"

]

}

]

}

}

EOF

az monitor data-collection rule create --name "${DCR_NAME}" --resource-group "${RESOURCE_GROUP}" --location "${LOCATION}" --rule-file "${RULEFILE}" -o tsv --query id

rm -rf "${RULEFILE}"

assign.sh: use the script to create a policy to associate the DCR with all Arc-enabled servers in a resource group

#!/bin/bash

set -e

SUBSCRIPTION_ID="${SUBSCRIPTION_ID:?SUBSCRIPTION_ID must be set}"

SERVICE_PRINCIPAL_ID="${SERVICE_PRINCIPAL_ID:?SERVICE_PRINCIPAL_ID must be set}"

SERVICE_PRINCIPAL_SECRET="${SERVICE_PRINCIPAL_SECRET:?SERVICE_PRINCIPAL_SECRET must be set}"

RESOURCE_GROUP="${RESOURCE_GROUP:?RESOURCE_GROUP must be set}"

TENANT_ID="${TENANT_ID:?TENANT_ID must be set}"

LOCATION="${LOCATION:?LOCATION must be set}"

DCR_NAME=${DCR_NAME:-${RESOURCE_GROUP}-syslog-dcr}

POLICY_NAME=${POLICY_NAME:-${DCR_NAME}-policy}

az login --service-principal -u "${SERVICE_PRINCIPAL_ID}" -p "${SERVICE_PRINCIPAL_SECRET}" -t "${TENANT_ID}"

az account set -s "${SUBSCRIPTION_ID}"

DCR=$(az monitor data-collection rule show --name "${DCR_NAME}" --resource-group "${RESOURCE_GROUP}" -o tsv --query id)

PRINCIPAL=$(az policy assignment create \

--name "${POLICY_NAME}" \

--display-name "${POLICY_NAME}" \

--resource-group "${RESOURCE_GROUP}" \

--location "${LOCATION}" \

--policy "d5c37ce1-5f52-4523-b949-f19bf945b73a" \

--assign-identity \

-p "{\"dcrResourceId\":{\"value\":\"${DCR}\"}}" \

-o tsv --query identity.principalId)

required_roles=$(az policy definition show -n "d5c37ce1-5f52-4523-b949-f19bf945b73a" --query policyRule.then.details.roleDefinitionIds -o tsv)

for roleId in $(echo "$required_roles"); do

az role assignment create \

--role "${roleId##*/}" \

--assignee-object-id "${PRINCIPAL}" \

--assignee-principal-type "ServicePrincipal" \

--scope /subscriptions/"$SUBSCRIPTION_ID"/resourceGroups/"$RESOURCE_GROUP"

done

install.sh: Install Azure Monitoring Agent on each VM to collect monitoring data from Azure Virtual Machines.

#!/bin/bash

set -e

function create_secret() {

kubectl apply -f - -n "${NAMESPACE}" <<EOF

apiVersion: v1

kind: Secret

metadata:

name: naks-vm-telemetry

type: Opaque

stringData:

SUBSCRIPTION_ID: "${SUBSCRIPTION_ID}"

SERVICE_PRINCIPAL_ID: "${SERVICE_PRINCIPAL_ID}"

SERVICE_PRINCIPAL_SECRET: "${SERVICE_PRINCIPAL_SECRET}"

RESOURCE_GROUP: "${RESOURCE_GROUP}"

TENANT_ID: "${TENANT_ID}"

LOCATION: "${LOCATION}"

PROXY_URL: "${PROXY_URL}"

INSTALL_AZURE_MONITOR_AGENT: "${INSTALL_AZURE_MONITOR_AGENT}"

VERSION: "${AZURE_MONITOR_AGENT_VERSION}"

CONNECTEDMACHINE_AZCLI_VERSION: "${CONNECTEDMACHINE_AZCLI_VERSION}"

EOF

}

function create_daemonset() {

kubectl apply -f - -n "${NAMESPACE}" <<EOF

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: naks-vm-telemetry

labels:

k8s-app: naks-vm-telemetry

spec:

selector:

matchLabels:

name: naks-vm-telemetry

template:

metadata:

labels:

name: naks-vm-telemetry

spec:

hostNetwork: true

hostPID: true

containers:

- name: naks-vm-telemetry

image: mcr.microsoft.com/oss/mirror/docker.io/library/ubuntu:20.04

env:

- name: SUBSCRIPTION_ID

valueFrom:

secretKeyRef:

name: naks-vm-telemetry

key: SUBSCRIPTION_ID

- name: SERVICE_PRINCIPAL_ID

valueFrom:

secretKeyRef:

name: naks-vm-telemetry

key: SERVICE_PRINCIPAL_ID

- name: SERVICE_PRINCIPAL_SECRET

valueFrom:

secretKeyRef:

name: naks-vm-telemetry

key: SERVICE_PRINCIPAL_SECRET

- name: RESOURCE_GROUP

valueFrom:

secretKeyRef:

name: naks-vm-telemetry

key: RESOURCE_GROUP

- name: TENANT_ID

valueFrom:

secretKeyRef:

name: naks-vm-telemetry

key: TENANT_ID

- name: LOCATION

valueFrom:

secretKeyRef:

name: naks-vm-telemetry

key: LOCATION

- name: PROXY_URL

valueFrom:

secretKeyRef:

name: naks-vm-telemetry

key: PROXY_URL

- name: INSTALL_AZURE_MONITOR_AGENT

valueFrom:

secretKeyRef:

name: naks-vm-telemetry

key: INSTALL_AZURE_MONITOR_AGENT

- name: VERSION

valueFrom:

secretKeyRef:

name: naks-vm-telemetry

key: VERSION

- name: CONNECTEDMACHINE_AZCLI_VERSION

valueFrom:

secretKeyRef:

name: naks-vm-telemetry

key: CONNECTEDMACHINE_AZCLI_VERSION

securityContext:

privileged: true

command:

- /bin/bash

- -c

- |

set -e

WORKDIR=\$(nsenter -t1 -m -u -n -i mktemp -d)

trap 'nsenter -t1 -m -u -n -i rm -rf "\${WORKDIR}"; echo "Azure Monitor Configuration Failed"' ERR

nsenter -t1 -m -u -n -i mkdir -p "\${WORKDIR}"/telemetry

nsenter -t1 -m -u -n -i tee "\${WORKDIR}"/telemetry/telemetry_common.py > /dev/null <<EOF

#!/usr/bin/python3

import json

import logging

import os

import socket

import subprocess

import sys

arc_config_file = "\${WORKDIR}/telemetry/arc-connect.json"

class AgentryResult:

CONNECTED = "Connected"

CREATING = "Creating"

DISCONNECTED = "Disconnected"

FAILED = "Failed"

SUCCEEDED = "Succeeded"

class OnboardingMessage:

COMPLETED = "Onboarding completed"

STILL_CREATING = "Azure still creating"

STILL_TRYING = "Service still trying"

def get_logger(logger_name):

logger = logging.getLogger(logger_name)

logger.setLevel(logging.DEBUG)

handler = logging.StreamHandler(stream=sys.stdout)

format = logging.Formatter(fmt="%(name)s - %(levelname)s - %(message)s")

handler.setFormatter(format)

logger.addHandler(handler)

return logger

def az_cli_cm_ext_install(logger, config):

logger.info("Install az CLI connectedmachine extension")

proxy_url = config.get("PROXY_URL")

if proxy_url is not None:

os.environ["HTTP_PROXY"] = proxy_url

os.environ["HTTPS_PROXY"] = proxy_url

cm_azcli_version = config.get("CONNECTEDMACHINE_AZCLI_VERSION")

logger.info("Install az CLI connectedmachine extension: {cm_azcli_version}")

ext_cmd = f'/usr/bin/az extension add --name connectedmachine --version "{cm_azcli_version}" --yes'

run_cmd(logger, ext_cmd)

def get_cm_properties(logger, config):

hostname = socket.gethostname()

resource_group = config.get("RESOURCE_GROUP")

logger.info(f"Getting arc enrollment properties for {hostname}...")

az_login(logger, config)

property_cmd = f'/usr/bin/az connectedmachine show --machine-name "{hostname}" --resource-group "{resource_group}"'

try:

raw_property = run_cmd(logger, property_cmd)

cm_json = json.loads(raw_property.stdout)

provisioning_state = cm_json["provisioningState"]

status = cm_json["status"]

except:

logger.warning("Connectedmachine not yet present")

provisioning_state = "NOT_PROVISIONED"

status = "NOT_CONNECTED"

finally:

az_logout(logger)

logger.info(

f'Connected machine "{hostname}" provisioningState is "{provisioning_state}" and status is "{status}"'

)

return provisioning_state, status

def get_cm_extension_state(logger, config, extension_name):

resource_group = config.get("RESOURCE_GROUP")

hostname = socket.gethostname()

logger.info(f"Getting {extension_name} state for {hostname}...")

az_login(logger, config)

state_cmd = f'/usr/bin/az connectedmachine extension show --name "{extension_name}" --machine-name "{hostname}" --resource-group "{resource_group}"'

try:

raw_state = run_cmd(logger, state_cmd)

cme_json = json.loads(raw_state.stdout)

provisioning_state = cme_json["provisioningState"]

except:

logger.warning("Connectedmachine extension not yet present")

provisioning_state = "NOT_PROVISIONED"

finally:

az_logout(logger)

logger.info(

f'Connected machine "{hostname}" extenstion "{extension_name}" provisioningState is "{provisioning_state}"'

)

return provisioning_state

def run_cmd(logger, cmd, check_result=True, echo_output=True):

res = subprocess.run(

cmd,

shell=True,

stdout=subprocess.PIPE,

stderr=subprocess.PIPE,

universal_newlines=True,

)

if res.stdout:

if echo_output:

logger.info(f"[OUT] {res.stdout}")

if res.stderr:

if echo_output:

logger.info(f"[ERR] {res.stderr}")

if check_result:

res.check_returncode()

return res # can parse out res.stdout and res.returncode

def az_login(logger, config):

logger.info("Login to Azure account...")

proxy_url = config.get("PROXY_URL")

if proxy_url is not None:

os.environ["HTTP_PROXY"] = proxy_url

os.environ["HTTPS_PROXY"] = proxy_url

service_principal_id = config.get("SERVICE_PRINCIPAL_ID")

service_principal_secret = config.get("SERVICE_PRINCIPAL_SECRET")

tenant_id = config.get("TENANT_ID")

subscription_id = config.get("SUBSCRIPTION_ID")

cmd = f'/usr/bin/az login --service-principal --username "{service_principal_id}" --password "{service_principal_secret}" --tenant "{tenant_id}"'

run_cmd(logger, cmd)

logger.info(f"Set Subscription...{subscription_id}")

set_sub = f'/usr/bin/az account set --subscription "{subscription_id}"'

run_cmd(logger, set_sub)

def az_logout(logger):

logger.info("Logout of Azure account...")

run_cmd(logger, "/usr/bin/az logout --verbose", check_result=False)

EOF

nsenter -t1 -m -u -n -i tee "\${WORKDIR}"/telemetry/setup_azure_monitor_agent.py > /dev/null <<EOF

#!/usr/bin/python3

import json

import os

import socket

import time

import telemetry_common

def run_install(logger, ama_config):

logger.info("Install Azure Monitor agent...")

resource_group = ama_config.get("RESOURCE_GROUP")

location = ama_config.get("LOCATION")

proxy_url = ama_config.get("PROXY_URL")

hostname = socket.gethostname()

if proxy_url is not None:

os.environ["HTTP_PROXY"] = proxy_url

os.environ["HTTPS_PROXY"] = proxy_url

settings = (

'{"proxy":{"mode":"application","address":"'

+ proxy_url

+ '","auth": "false"}}'

)

cmd = f'/usr/bin/az connectedmachine extension create --no-wait --name "AzureMonitorLinuxAgent" --publisher "Microsoft.Azure.Monitor" --type "AzureMonitorLinuxAgent" --machine-name "{hostname}" --resource-group "{resource_group}" --location "{location}" --verbose --settings \'{settings}\''

else:

cmd = f'/usr/bin/az connectedmachine extension create --no-wait --name "AzureMonitorLinuxAgent" --publisher "Microsoft.Azure.Monitor" --type "AzureMonitorLinuxAgent" --machine-name "{hostname}" --resource-group "{resource_group}" --location "{location}" --verbose'

version = ama_config.get("VERSION")

if version is not None:

cmd += f' --type-handler-version "{version}"'

logger.info("Installing Azure Monitor agent...")

telemetry_common.az_login(logger, ama_config)

try:

telemetry_common.run_cmd(logger, cmd)

except:

logger.info("Trying to install Azure Monitor agent...")

finally:

telemetry_common.az_logout(logger)

def run_uninstall(logger, ama_config):

logger.info("Uninstall Azure Monitor agent...")

resource_group = ama_config.get("RESOURCE_GROUP")

hostname = socket.gethostname()

cmd = f'/usr/bin/az connectedmachine extension delete --name "AzureMonitorLinuxAgent" --machine-name "{hostname}" --resource-group "{resource_group}" --yes --verbose'

telemetry_common.az_login(logger, ama_config)

logger.info("Uninstalling Azure Monitor agent...")

try:

telemetry_common.run_cmd(logger, cmd)

except:

print("Trying to uninstall Azure Monitor agent...")

finally:

telemetry_common.az_logout(logger)

def ama_installation(logger, ama_config):

logger.info("Executing AMA extenstion installation...")

telemetry_common.az_cli_cm_ext_install(logger, ama_config)

# Get connected machine properties

cm_provisioning_state, cm_status = telemetry_common.get_cm_properties(

logger, ama_config

)

if (

cm_provisioning_state == telemetry_common.AgentryResult.SUCCEEDED

and cm_status == telemetry_common.AgentryResult.CONNECTED

):

# Get AzureMonitorLinuxAgent extension status

ext_provisioning_state = telemetry_common.get_cm_extension_state(

logger, ama_config, "AzureMonitorLinuxAgent"

)

if ext_provisioning_state == telemetry_common.AgentryResult.SUCCEEDED:

logger.info(telemetry_common.OnboardingMessage.COMPLETED)

return True

elif ext_provisioning_state == telemetry_common.AgentryResult.FAILED:

run_uninstall(logger, ama_config)

logger.warning(telemetry_common.OnboardingMessage.STILL_TRYING)

return False

elif ext_provisioning_state == telemetry_common.AgentryResult.CREATING:

logger.warning(telemetry_common.OnboardingMessage.STILL_CREATING)

return False

else:

run_install(logger, ama_config)

logger.warning(telemetry_common.OnboardingMessage.STILL_TRYING)

return False

else:

logger.error("Server not arc enrolled, enroll the server and retry")

return False

def main():

timeout = 60 # TODO: increase when executed via systemd unit

start_time = time.time()

end_time = start_time + timeout

config_file = telemetry_common.arc_config_file

logger = telemetry_common.get_logger(__name__)

logger.info("Running setup_azure_monitor_agent.py...")

if config_file is None:

raise Exception("config file is expected")

ama_config = {}

with open(config_file, "r") as file:

ama_config = json.load(file)

ama_installed = False

while time.time() < end_time:

logger.info("Installing AMA extension...")

try:

ama_installed = ama_installation(logger, ama_config)

except Exception as e:

logger.error(f"Could not install AMA extension: {e}")

if ama_installed:

break

logger.info("Sleeping 30s...") # retry for Azure info

time.sleep(30)

if __name__ == "__main__":

main()

EOF

nsenter -t1 -m -u -n -i tee "\${WORKDIR}"/arc-connect.sh > /dev/null <<EOF

#!/bin/bash

set -e

echo "{\"VERSION\": \"\${VERSION}\", \"SUBSCRIPTION_ID\": \"\${SUBSCRIPTION_ID}\", \"SERVICE_PRINCIPAL_ID\": \"\${SERVICE_PRINCIPAL_ID}\", \"SERVICE_PRINCIPAL_SECRET\": \"\${SERVICE_PRINCIPAL_SECRET}\", \"RESOURCE_GROUP\": \"\${RESOURCE_GROUP}\", \"TENANT_ID\": \"\${TENANT_ID}\", \"LOCATION\": \"\${LOCATION}\", \"PROXY_URL\": \"\${PROXY_URL}\", \"CONNECTEDMACHINE_AZCLI_VERSION\": \"\${CONNECTEDMACHINE_AZCLI_VERSION}\"}" > "\${WORKDIR}"/telemetry/arc-connect.json

if [ "\${INSTALL_AZURE_MONITOR_AGENT}" = "true" ]; then

echo "Installing Azure Monitor agent..."

/usr/bin/python3 "\${WORKDIR}"/telemetry/setup_azure_monitor_agent.py > "\${WORKDIR}"/setup_azure_monitor_agent.out

cat "\${WORKDIR}"/setup_azure_monitor_agent.out

if grep "Could not install AMA extension" "\${WORKDIR}"/setup_azure_monitor_agent.out > /dev/null; then

exit 1

fi

fi

EOF

nsenter -t1 -m -u -n -i sh "\${WORKDIR}"/arc-connect.sh

nsenter -t1 -m -u -n -i rm -rf "\${WORKDIR}"

echo "Server monitoring configured successfully"

tail -f /dev/null

livenessProbe:

initialDelaySeconds: 600

periodSeconds: 60

timeoutSeconds: 30

exec:

command:

- /bin/bash

- -c

- |

set -e

WORKDIR=\$(nsenter -t1 -m -u -n -i mktemp -d)

trap 'nsenter -t1 -m -u -n -i rm -rf "\${WORKDIR}"' ERR EXIT

nsenter -t1 -m -u -n -i tee "\${WORKDIR}"/liveness.sh > /dev/null <<EOF

#!/bin/bash

set -e

# Check AMA processes

ps -ef | grep "\\\s/opt/microsoft/azuremonitoragent/bin/agentlauncher\\\s"

ps -ef | grep "\\\s/opt/microsoft/azuremonitoragent/bin/mdsd\\\s"

ps -ef | grep "\\\s/opt/microsoft/azuremonitoragent/bin/amacoreagent\\\s"

# Check Arc server agent is Connected

AGENTSTATUS="\\\$(azcmagent show -j)"

if [[ \\\$(echo "\\\${AGENTSTATUS}" | jq -r .status) != "Connected" ]]; then

echo "azcmagent is not connected"

echo "\\\${AGENTSTATUS}"

exit 1

fi

# Verify dependent services are running

while IFS= read -r status; do

if [[ "\\\${status}" != "active" ]]; then

echo "one or more azcmagent services not active"

echo "\\\${AGENTSTATUS}"

exit 1

fi

done < <(jq -r '.services[] | (.status)' <<<\\\${AGENTSTATUS})

# Run connectivity tests

RESULT="\\\$(azcmagent check -j)"

while IFS= read -r reachable; do

if [[ ! \\\${reachable} ]]; then

echo "one or more connectivity tests failed"

echo "\\\${RESULT}"

exit 1

fi

done < <(jq -r '.[] | (.reachable)' <<<\\\${RESULT})

EOF

nsenter -t1 -m -u -n -i sh "\${WORKDIR}"/liveness.sh

nsenter -t1 -m -u -n -i rm -rf "\${WORKDIR}"

echo "Liveness check succeeded"

tolerations:

- operator: "Exists"

effect: "NoSchedule"

EOF

}

SUBSCRIPTION_ID="${SUBSCRIPTION_ID:?SUBSCRIPTION_ID must be set}"

SERVICE_PRINCIPAL_ID="${SERVICE_PRINCIPAL_ID:?SERVICE_PRINCIPAL_ID must be set}"

SERVICE_PRINCIPAL_SECRET="${SERVICE_PRINCIPAL_SECRET:?SERVICE_PRINCIPAL_SECRET must be set}"

RESOURCE_GROUP="${RESOURCE_GROUP:?RESOURCE_GROUP must be set}"

TENANT_ID="${TENANT_ID:?TENANT_ID must be set}"

LOCATION="${LOCATION:?LOCATION must be set}"

PROXY_URL="${PROXY_URL:?PROXY_URL must be set}"

INSTALL_AZURE_MONITOR_AGENT="${INSTALL_AZURE_MONITOR_AGENT:?INSTALL_AZURE_MONITOR_AGENT must be true/false}"

NAMESPACE="${NAMESPACE:?NAMESPACE must be set}"

AZURE_MONITOR_AGENT_VERSION="${AZURE_MONITOR_AGENT_VERSION:-"1.24.2"}"

CONNECTEDMACHINE_AZCLI_VERSION="${CONNECTEDMACHINE_AZCLI_VERSION:-"0.6.0"}"

create_secret

create_daemonset

Prerequisites-VM

Cluster administrator access to the Nexus Kubernetes cluster.

To use Azure Arc-enabled servers, register the following Azure resource providers in your subscription:

- Microsoft.HybridCompute

- Microsoft.GuestConfiguration

- Microsoft.HybridConnectivity

Register these resource providers, if not done previously:

az account set --subscription "{the Subscription Name}"

az provider register --namespace 'Microsoft.HybridCompute'

az provider register --namespace 'Microsoft.GuestConfiguration'

az provider register --namespace 'Microsoft.HybridConnectivity'

- Assign an Azure service principal to the following Azure built-in roles, as needed. Assign the service principal to the Azure resource group that has the machines to be connected:

| Role | Needed to |

|---|---|

| Azure Connected Machine Resource Administrator or Contributor | Connect Arc-enabled Nexus Kubernetes cluster VM server in the resource group and install the Azure Monitoring Agent (AMA) |

| Monitoring Contributor or Contributor | Create a Data Collection Rule (DCR) in the resource group and associate Arc-enabled servers to it |

| User Access Administrator, and Resource Policy Contributor or Contributor | Needed if you want to use Azure policy assignment(s) to ensure that a DCR is associated with Arc-enabled machines |

| Kubernetes Extension Contributor | Needed to deploy the K8s extension for Container Insights |

Environment setup

Copy and run the included scripts. You can run them from an Azure Cloud Shell, in the Azure portal. Or you can run them from a Linux command prompt where the Kubernetes command line tool (kubectl) and Azure CLI are installed.

Prior to running the included scripts, define the following environment variables:

| Environment Variable | Description |

|---|---|

| SUBSCRIPTION_ID | The ID of the Azure subscription that contains the resource group |

| RESOURCE_GROUP | The resource group name where Arc-enabled server and associated resources are created |

| LOCATION | The Azure Region where the Arc-enabled servers and associated resources are created |

| SERVICE_PRINCIPAL_ID | The appId of the Azure service principal with appropriate role assignment(s) |

| SERVICE_PRINCIPAL_SECRET | The authentication password for the Azure service principal |

| TENANT_ID | The ID of the tenant directory where the service principal exists |

| PROXY_URL | The proxy URL to use for connecting to Azure services |

| NAMESPACE | The namespace where the Kubernetes artifacts are created |

For convenience, you can modify the template file, arc-connect.env, to set the environment variable values.

# Apply the modified values to the environment

./arc-connect.env

Add a data collection rule (DCR)

Associate the Arc-enabled servers with a DCR to enable the collection of log data into a Log Analytics workspace. You can create the DCR via the Azure portal or CLI. Information on creating a DCR to collect data from the VMs is available here.

The included dcr.sh script creates a DCR, in the specified resource group, that will configure log collection.

Ensure proper environment setup and role prerequisites for the service principal. The DCR is created in the specified resource group.

Create or identify a Log Analytics workspace for log data ingestion as per the DCR. Set an environment variable, LAW_RESOURCE_ID to its resource ID. Retrieve the resource ID for a known Log Analytics workspace name:

export LAW_RESOURCE_ID=$(az monitor log-analytics workspace show -g "${RESOURCE_GROUP}" -n <law name> --query id -o tsv)

- Run the dcr.sh script. It creates a DCR in the specified resource group with name ${RESOURCE_GROUP}-syslog-dcr

./dcr.sh

View/manage the DCR from the Azure portal or CLI. By default, the Linux Syslog log level is set to "INFO". You can change the log level as needed.

Note

Manually, or via a policy, associate servers created prior to the DCR's creation. See remediation task.

Associate Arc-enabled server resources to DCR

Associate the Arc-enabled server resources to the created DCR for logs to flow to the Log Analytics workspace. There are options for associating servers with DCRs.

Use Azure portal or CLI to associate selected Arc-enabled servers to DCR

In Azure portal, add Arc-enabled server resource to the DCR using its Resources section.

Use this link for information about associating the resources via the Azure CLI.

Use Azure policy to manage DCR associations

Assign a policy to the resource group to enforce the association. There's a built-in policy definition, to associate Linux Arc Machines with a DCR. Assign the policy to the resource group with DCR as a parameter. It ensures association of all Arc-enabled servers, within the resource group, with the same DCR.

In the Azure portal, select the Assign button from the policy definition page.

For convenience, the provided assign.sh script assigns the built-in policy to the specified resource group and DCR created with the dcr.sh script.

- Ensure proper environment setup and role prerequisites for the service principal to do policy and role assignments.

- Create the DCR, in the resource group, using

dcr.shscript as described in Adding a Data Collection Rule section. - Run the

assign.shscript. It creates the policy assignment and necessary role assignments.

./assign.sh

Install Azure monitoring agent

Use the included install.sh which creates a Kubernetes daemonSet on the Nexus Kubernetes cluster.

It deploys a pod to each cluster node and installs the Azure Monitoring Agent (AMA).

The daemonSet also includes a liveness probe that monitors the server connection and AMA processes.

Note

To install Azure Monitoring Agent, you must first Arc connect the Nexus Kubernetes cluster VMs. This process is automated if you are using the latest version bundle. However, if the version bundle you use does not support cluster VM Arc enrollment by default, you will need to upgrade your cluster to the latest version bundle. For more information about the version bundle, please refer Nexus Kubernetes cluster supported versions

- Set the environment as specified in Environment Setup. Set the current

kubeconfigcontext for the Nexus Kubernetes cluster VMs. - Permit

Kubectlaccess to the Nexus Kubernetes cluster.To access your cluster, you need to set up the cluster connectNote

When you create a Nexus Kubernetes cluster, Nexus automatically creates a managed resource group dedicated to storing the cluster resources, within this group, the Arc connected cluster resource is established.

kubeconfig. After logging into Azure CLI with the relevant Microsoft Entra entity, you can obtain thekubeconfignecessary to communicate with the cluster from anywhere, even outside the firewall that surrounds it.Set

CLUSTER_NAME,RESOURCE_GROUPandSUBSCRIPTION_IDvariables.CLUSTER_NAME="myNexusK8sCluster" RESOURCE_GROUP="myResourceGroup" SUBSCRIPTION_ID=<set the correct subscription_id>Query managed resource group with

azand store inMANAGED_RESOURCE_GROUPaz account set -s $SUBSCRIPTION_ID MANAGED_RESOURCE_GROUP=$(az networkcloud kubernetescluster show -n $CLUSTER_NAME -g $RESOURCE_GROUP --output tsv --query managedResourceGroupConfiguration.name)The following command starts a connectedk8s proxy that allows you to connect to the Kubernetes API server for the specified Nexus Kubernetes cluster.

az connectedk8s proxy -n $CLUSTER_NAME -g $MANAGED_RESOURCE_GROUP &Use

kubectlto send requests to the cluster:kubectl get pods -AYou should now see a response from the cluster containing the list of all nodes.

Note

If you see the error message "Failed to post access token to client proxyFailed to connect to MSI", you may need to perform an

az loginto re-authenticate with Azure. - Run the

install.shscript from the command prompt with kubectl access to the Nexus Kubernetes cluster.

The script deploys the daemonSet to the cluster. Monitor the progress as follows:

# Run the install script and observe results

./install.sh

kubectl get pod --selector='name=naks-vm-telemetry'

kubectl logs <podname>

On completion, the system logs the message "Server monitoring configured successfully".

Note

Associate these connected servers to the DCR. After you configure a policy, there may be some delay to observe the logs in Azure Log Analytics Workspace

Monitor Nexus Kubernetes cluster – K8s layer

Prerequisites-Kubernetes

There are certain prerequisites the operator should ensure to configure the monitoring tools on Nexus Kubernetes Clusters.

Container Insights stores its data in a Log Analytics workspace. Log data flows into the workspace whose Resource ID you provided during the initial scripts covered in the "Add a data collection rule (DCR)" section. Else, data funnels into a default workspace in the Resource group associated with your subscription (based on Azure location).

An example for East US may look like follows:

- Log Analytics workspace Name: DefaultWorkspace-<GUID>-EUS

- Resource group name: DefaultResourceGroup-EUS

Run the following command to get a pre-existing Log Analytics workspace Resource ID:

az login

az account set --subscription "<Subscription Name or ID the Log Analytics workspace is in>"

az monitor log-analytics workspace show --workspace-name "<Log Analytics workspace Name>" \

--resource-group "<Log Analytics workspace Resource Group>" \

-o tsv --query id

To deploy Container Insights and view data in the applicable Log Analytics workspace requires certain role assignments in your account. For example, the "Contributor" role assignment. See the instructions for assigning required roles:

- Log Analytics Contributor role: necessary permissions to enable container monitoring on a CNF (provisioned) cluster.

- Log Analytics Reader role: non-members of the Log Analytics Contributor role, receive permissions to view data in the Log Analytics workspace once you enable container monitoring.

Install the cluster extension

Sign-in into the Azure Cloud Shell to access the cluster:

az login

az account set --subscription "<Subscription Name or ID the Provisioned Cluster is in>"

Now, deploy Container Insights extension on a provisioned Nexus Kubernetes cluster using either of the next two commands:

With customer pre-created Log analytics workspace

az k8s-extension create --name azuremonitor-containers \

--cluster-name "<Nexus Kubernetes cluster Name>" \

--resource-group "<Nexus Kubernetes cluster Resource Group>" \

--cluster-type connectedClusters \

--extension-type Microsoft.AzureMonitor.Containers \

--release-train preview \

--configuration-settings logAnalyticsWorkspaceResourceID="<Log Analytics workspace Resource ID>" \

amalogsagent.useAADAuth=true

Use the default Log analytics workspace

az k8s-extension create --name azuremonitor-containers \

--cluster-name "<Nexus Kubernetes cluster Name>" \

--resource-group "<Nexus Kubernetes cluster Resource Group>" \

--cluster-type connectedClusters \

--extension-type Microsoft.AzureMonitor.Containers \

--release-train preview \

--configuration-settings amalogsagent.useAADAuth=true

Validate Cluster extension

Validate the successful deployment of monitoring agents’ enablement on Nexus Kubernetes Clusters using the following command:

az k8s-extension show --name azuremonitor-containers \

--cluster-name "<Nexus Kubernetes cluster Name>" \

--resource-group "<Nexus Kubernetes cluster Resource Group>" \

--cluster-type connectedClusters

Look for a Provisioning State of "Succeeded" for the extension. The "k8s-extension create" command may have also returned the status.

Customize logs & metrics collection

Container Insights provides end-users functionality to fine-tune the collection of logs and metrics from Nexus Kubernetes Clusters--Configure Container insights agent data collection.

Extra resources

- Review workbooks documentation and then you may use Operator Nexus telemetry sample Operator Nexus workbooks.

- Review Azure Monitor Alerts, how to create Azure Monitor Alert rules, and use sample Operator Nexus Alert templates.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for