Integrate OneLake with Azure Synapse Analytics

Azure Synapse is a limitless analytics service that brings together enterprise data warehousing and Big Data analytics. This tutorial shows how to connect to OneLake using Azure Synapse Analytics.

Write data from Synapse using Apache Spark

Follow these steps to use Apache Spark to write sample data to OneLake from Azure Synapse Analytics.

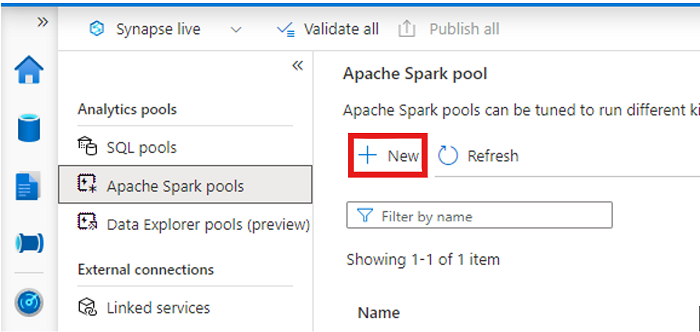

Open your Synapse workspace and create an Apache Spark pool with your preferred parameters.

Create a new Apache Spark notebook.

Open the notebook, set the language to PySpark (Python), and connect it to your newly created Spark pool.

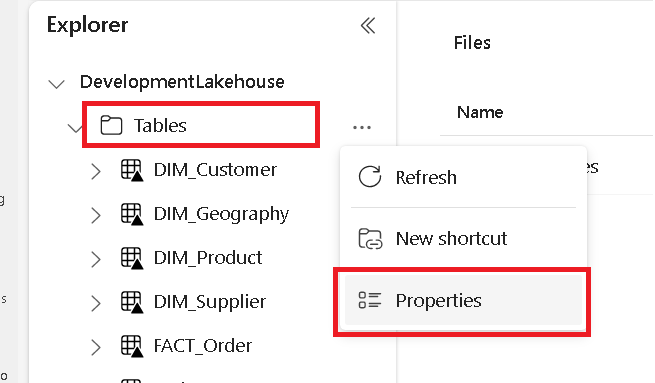

In a separate tab, navigate to your Microsoft Fabric lakehouse and find the top-level Tables folder.

Right-click on the Tables folder and select Properties.

Copy the ABFS path from the properties pane.

Back in the Azure Synapse notebook, in the first new code cell, provide the lakehouse path. This lakehouse is where your data is written later. Run the cell.

# Replace the path below with the ABFS path to your lakehouse Tables folder. oneLakePath = 'abfss://WorkspaceName@onelake.dfs.fabric.microsoft.com/LakehouseName.lakehouse/Tables'In a new code cell, load data from an Azure open dataset into a dataframe. This dataset is the one you load into your lakehouse. Run the cell.

yellowTaxiDf = spark.read.parquet('wasbs://nyctlc@azureopendatastorage.blob.core.windows.net/yellow/puYear=2018/puMonth=2/*.parquet') display(yellowTaxiDf.limit(10))In a new code cell, filter, transform, or prep your data. For this scenario, you can trim down your dataset for faster loading, join with other datasets, or filter down to specific results. Run the cell.

filteredTaxiDf = yellowTaxiDf.where(yellowTaxiDf.tripDistance>2).where(yellowTaxiDf.passengerCount==1) display(filteredTaxiDf.limit(10))In a new code cell, using your OneLake path, write your filtered dataframe to a new Delta-Parquet table in your Fabric lakehouse. Run the cell.

filteredTaxiDf.write.format("delta").mode("overwrite").save(oneLakePath + '/Taxi/')Finally, in a new code cell, test that your data was successfully written by reading your newly loaded file from OneLake. Run the cell.

lakehouseRead = spark.read.format('delta').load(oneLakePath + '/Taxi/') display(lakehouseRead.limit(10))

Congratulations. You can now read and write data in OneLake using Apache Spark in Azure Synapse Analytics.

Read data from Synapse using SQL

Follow these steps to use SQL serverless to read data from OneLake from Azure Synapse Analytics.

Open a Fabric lakehouse and identify a table that you'd like to query from Synapse.

Right-click on the table and select Properties.

Copy the ABFS path for the table.

Open your Synapse workspace in Synapse Studio.

Create a new SQL script.

In the SQL query editor, enter the following query, replacing

ABFS_PATH_HEREwith the path you copied earlier.SELECT TOP 10 * FROM OPENROWSET( BULK 'ABFS_PATH_HERE', FORMAT = 'delta') as rows;Run the query to view the top 10 rows of your table.

Congratulations. You can now read data from OneLake using SQL serverless in Azure Synapse Analytics.

Related content

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for