Integrate OpenAI, Communication, and Organizational Data Features into a Line of Business App

Level: Intermediate

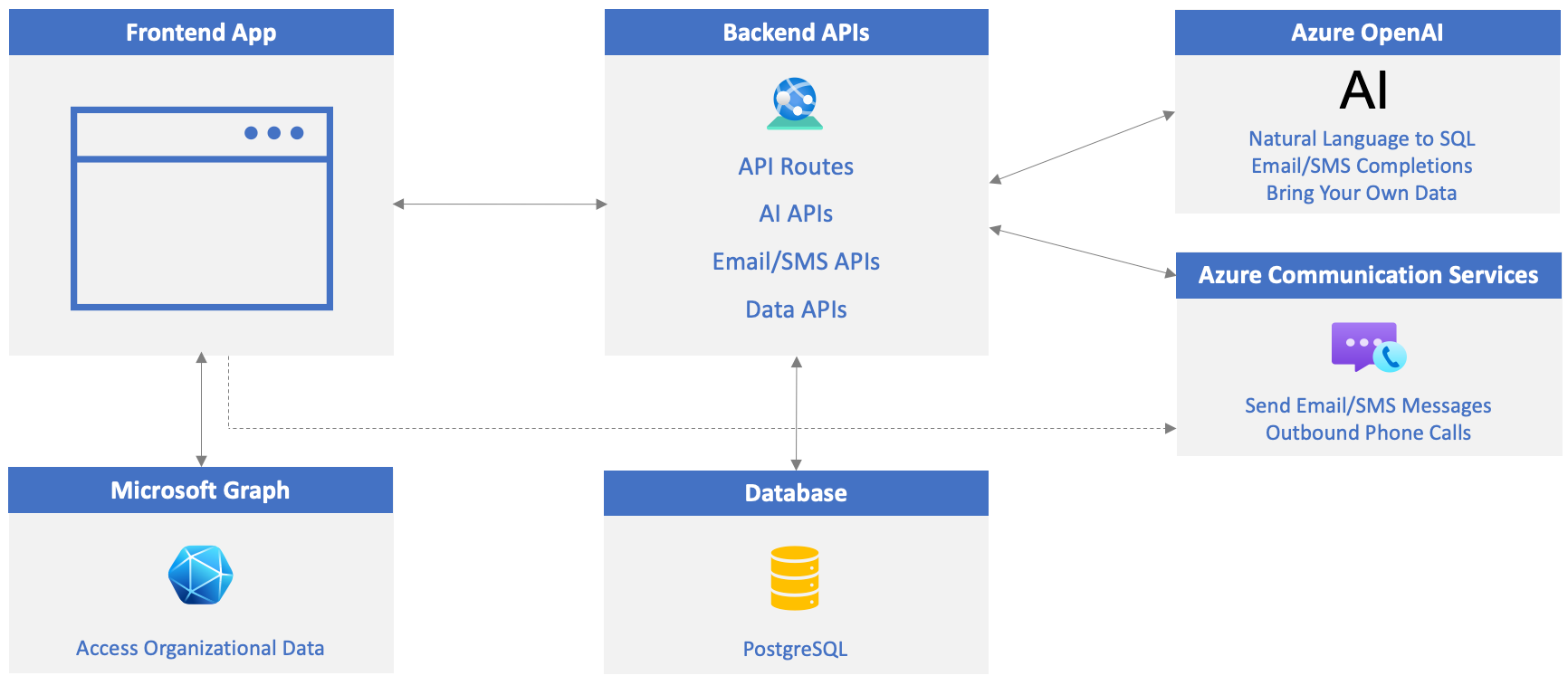

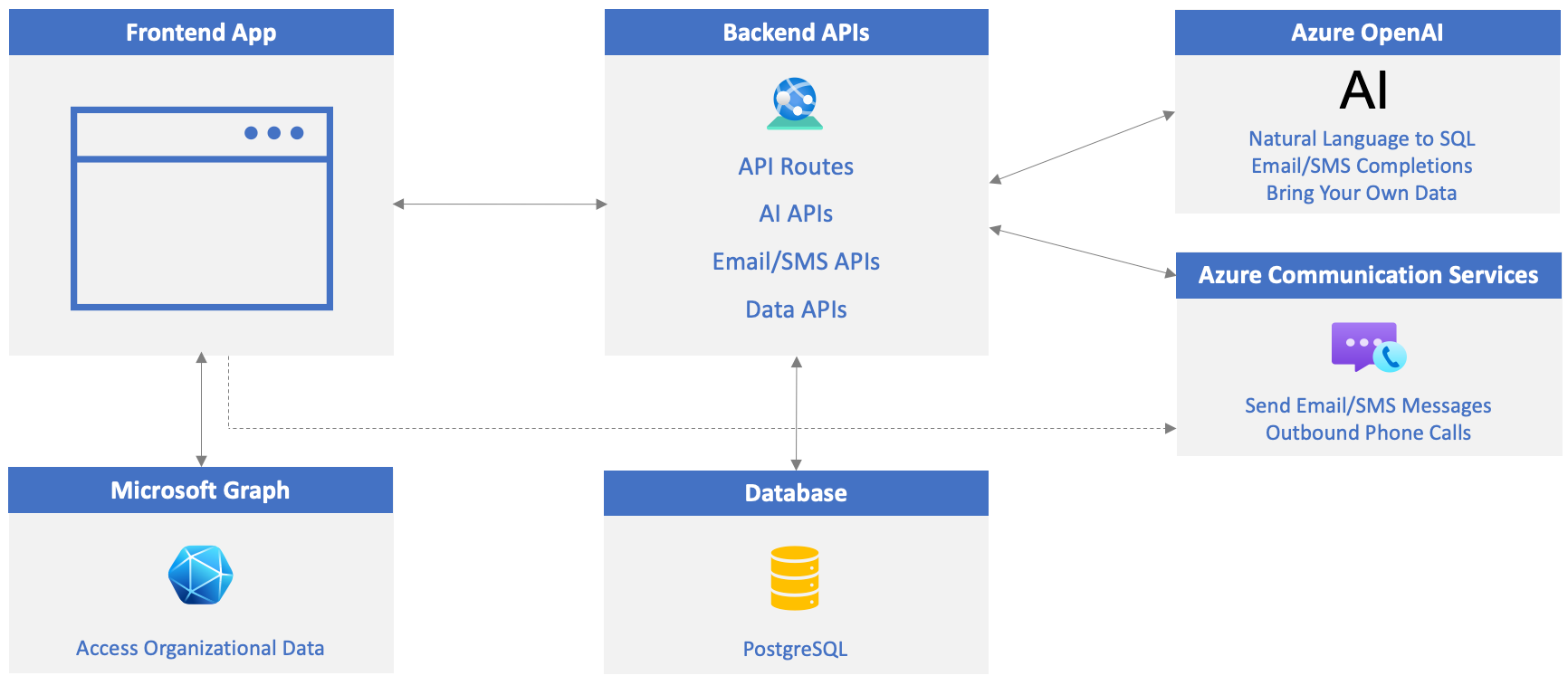

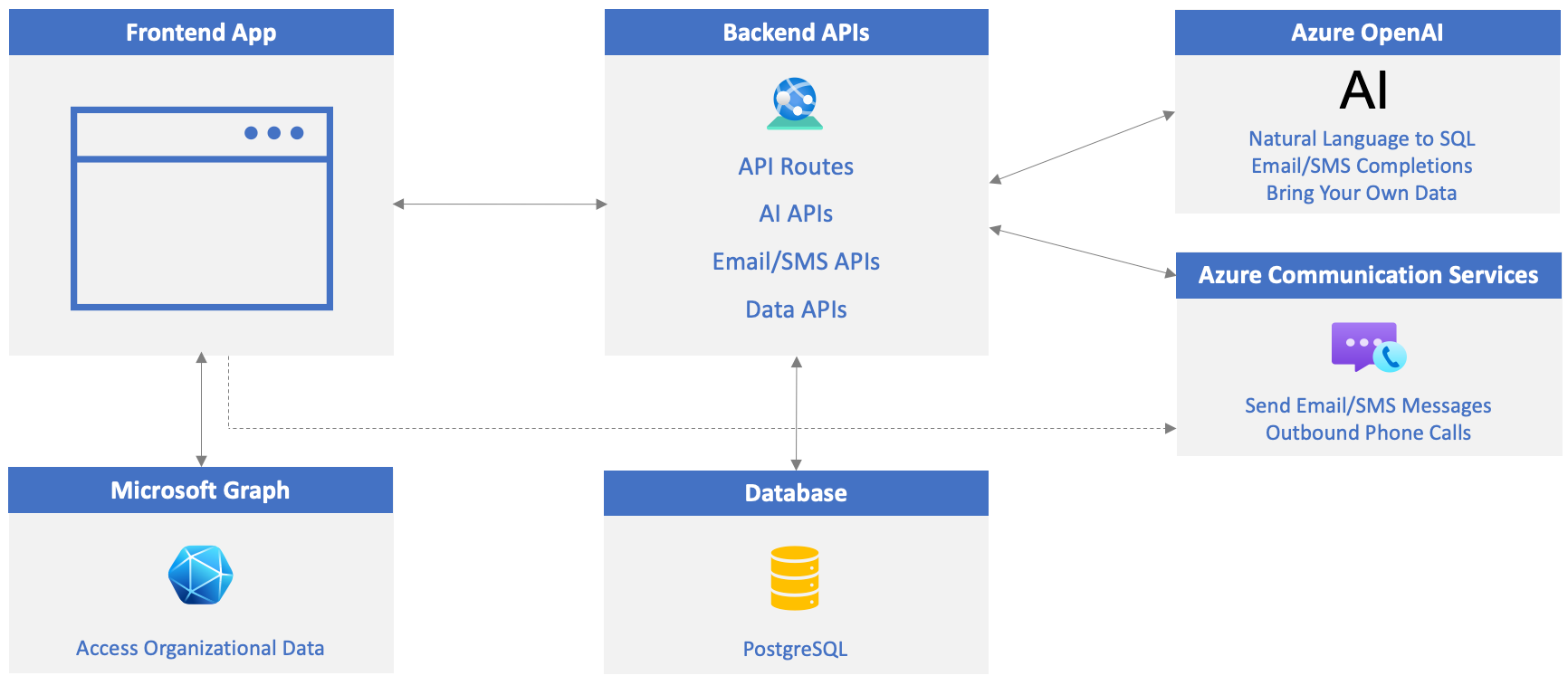

This tutorial demonstrates how Azure OpenAI, Azure Communication Services, and Microsoft Graph/Microsoft Graph Toolkit can be integrated into Line of Business (LOB) applications to enhance user productivity, elevate the user experience, and take LOB apps to the next level.

- AI: Enable users to ask questions in natural language and convert their answers to SQL that can be used to query a database, allow users to define rules that can be used to automatically generate email and SMS messages, and learn how natural language can be used to retrieve data from your own custom data sources. Azure OpenAI is used for these features.

- Communication: Enable in-app phone calling to customers and Email/SMS functionality using Azure Communication Services.

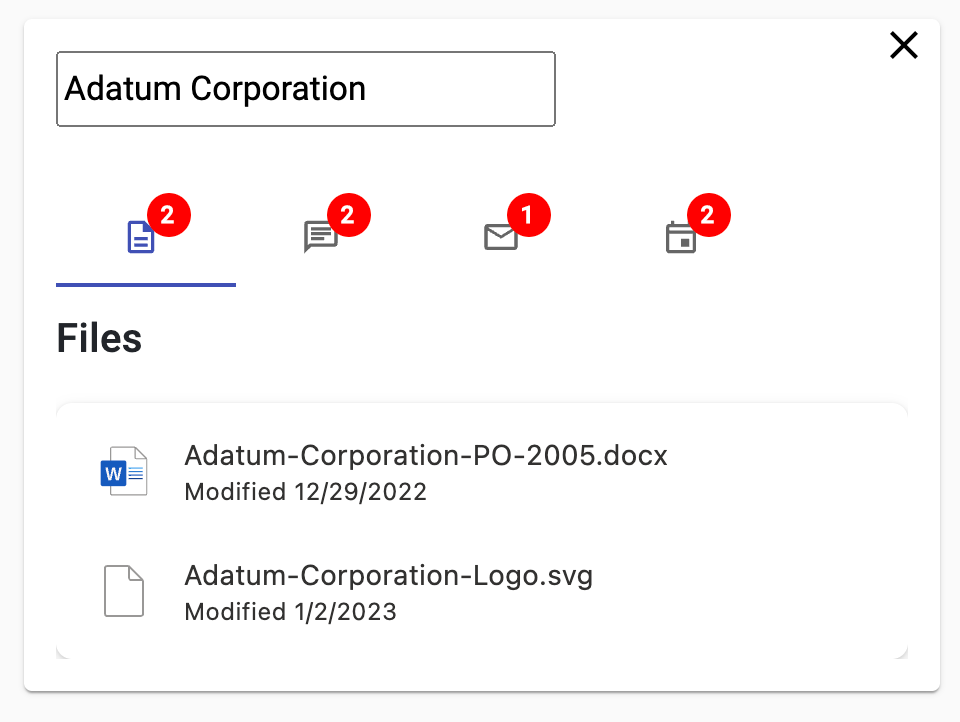

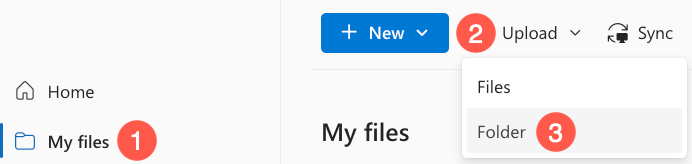

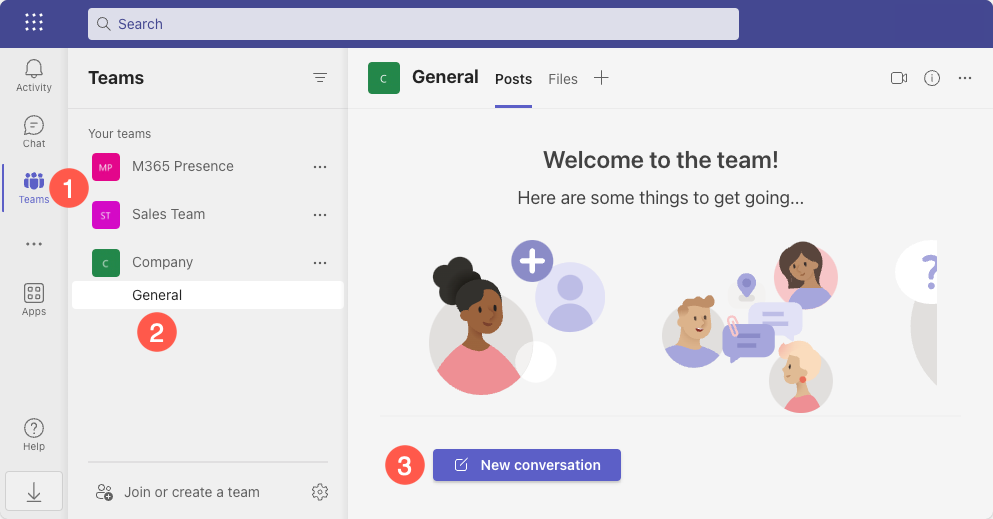

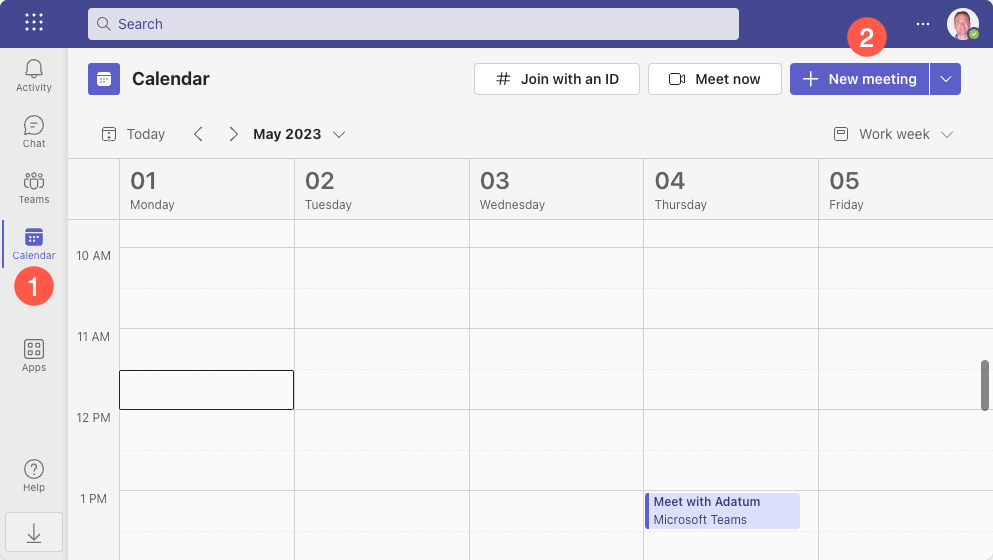

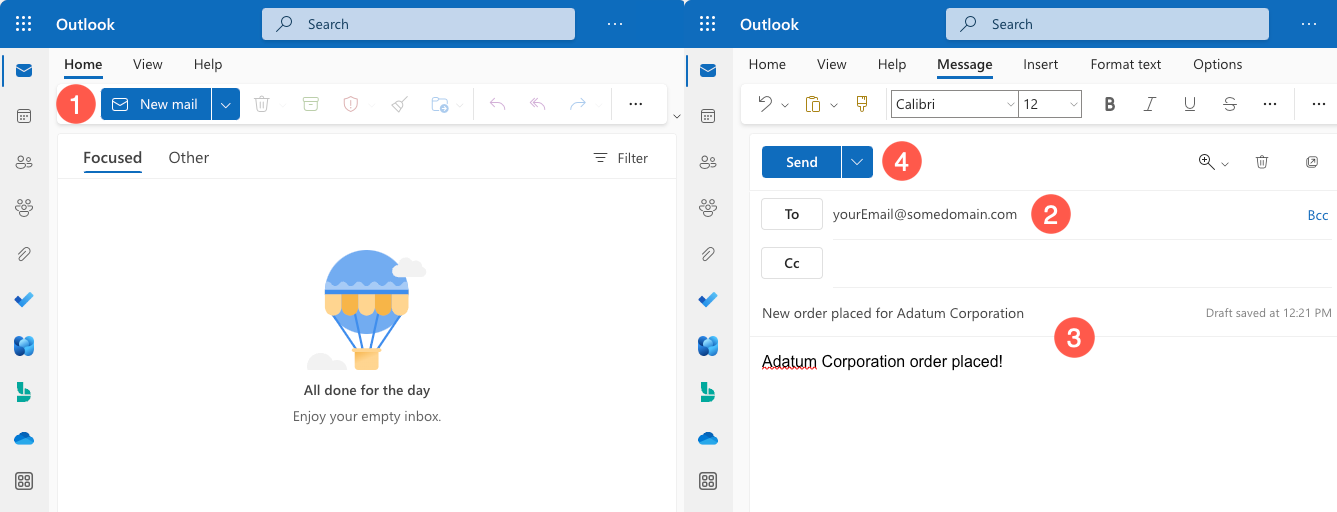

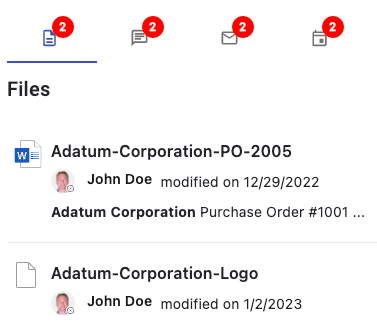

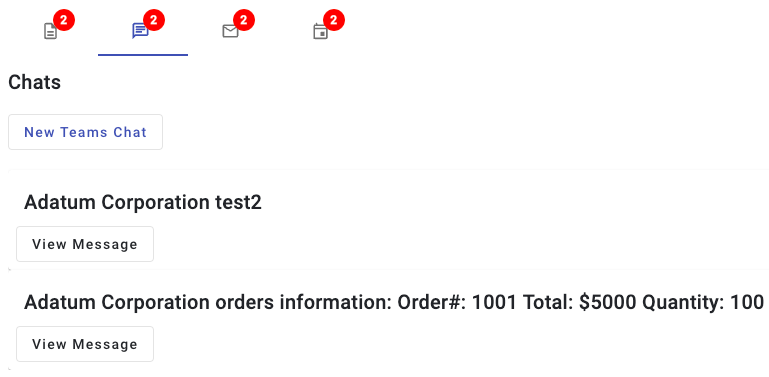

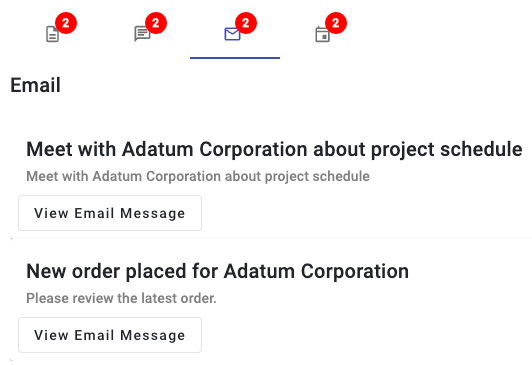

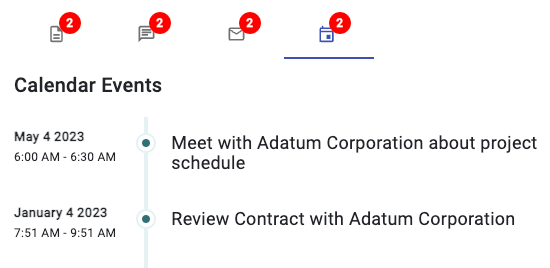

- Organizational Data: Pull in related organizational data that users may need (documents, chats, emails, calendar events) as they work with customers to avoid context switching. Providing access to this type of organizational data reduces the need for the user to switch to Outlook, Teams, OneDrive, other custom apps, their phone, etc. since the specific data and functionality they need is provided directly in the app. Microsoft Graph and Microsoft Graph Toolkit are used for this feature.

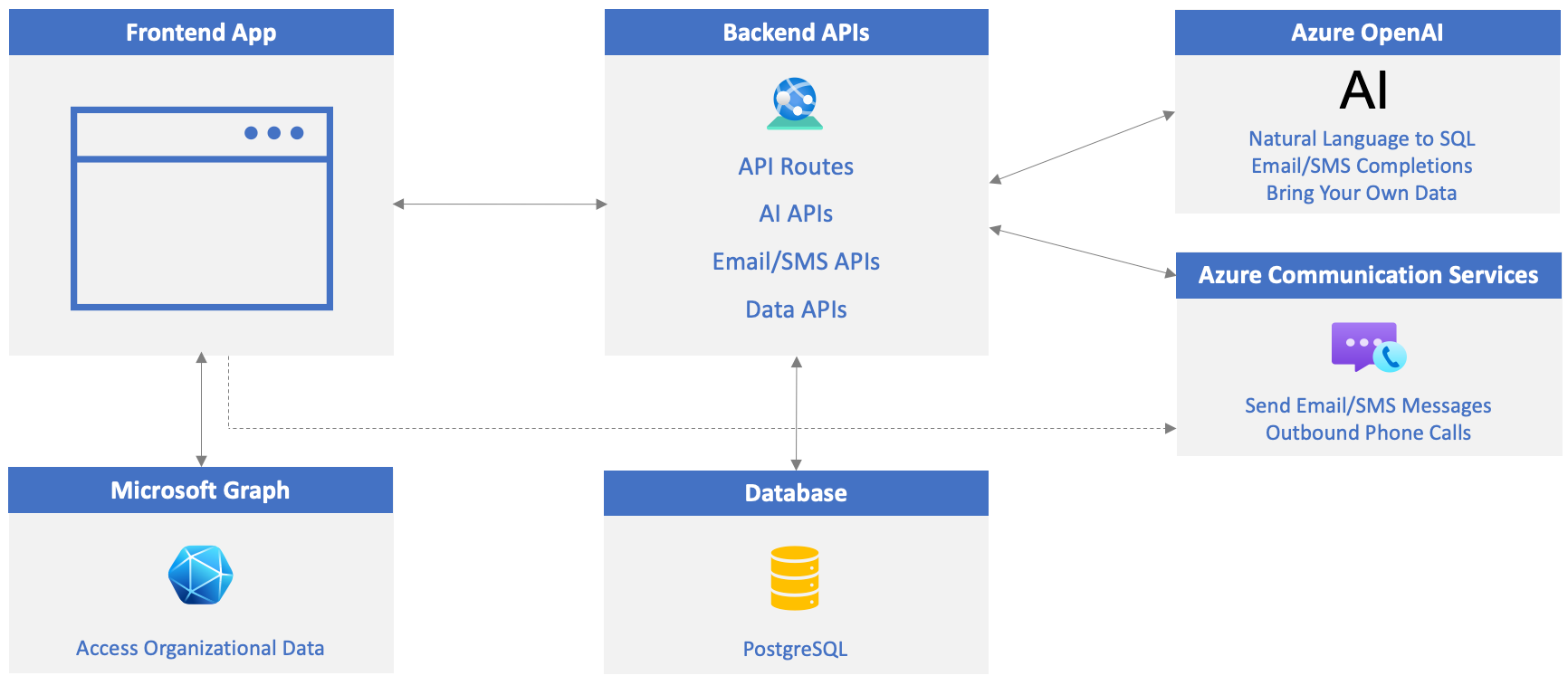

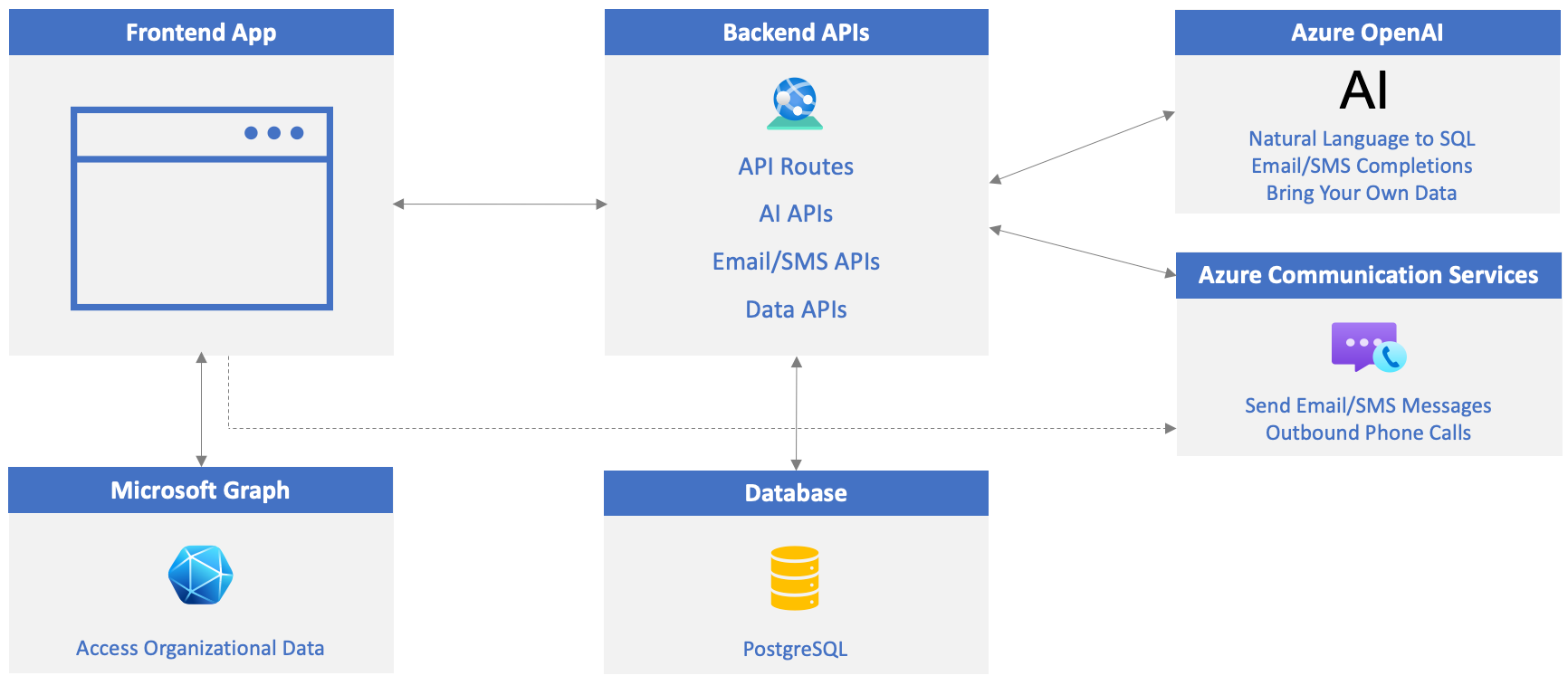

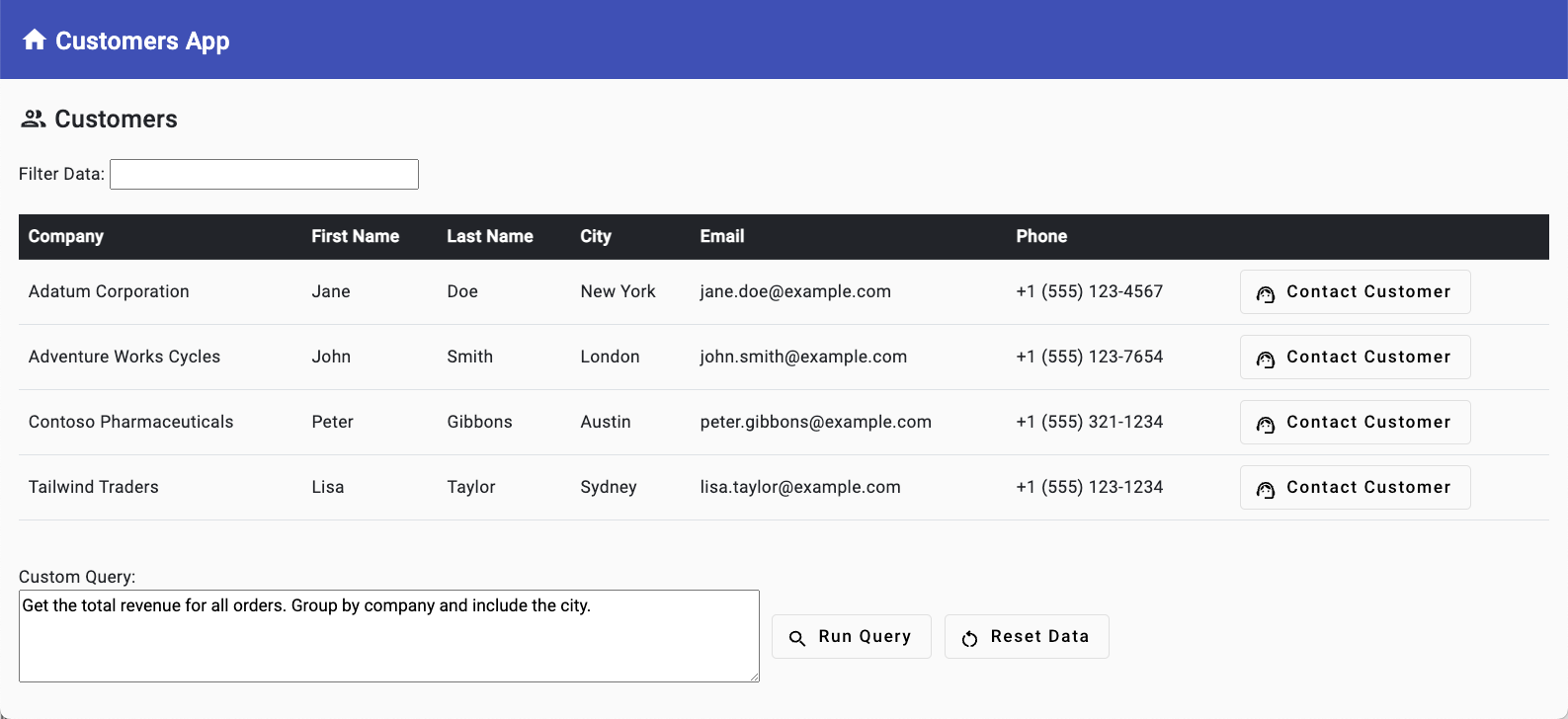

The application is a simple customer management app that allows users to manage their customers and related data. It consists of a front-end built using TypeScript that calls back-end APIs to retrieve data, interact with AI functionality, send email/SMS messages, and pull in organizational data. Here's an overview of the application solution that you'll walk through in this tutorial:

The tutorial will walk you through the process of setting up the required Azure and Microsoft 365 resources. It'll also walk you through the code that is used to implement the AI, communication, and organizational data features. While you won't be required to copy and paste code, some of the exercises will have you modify code to try out different scenarios.

What You'll Build in this Tutorial

Choose Your Own Adventure

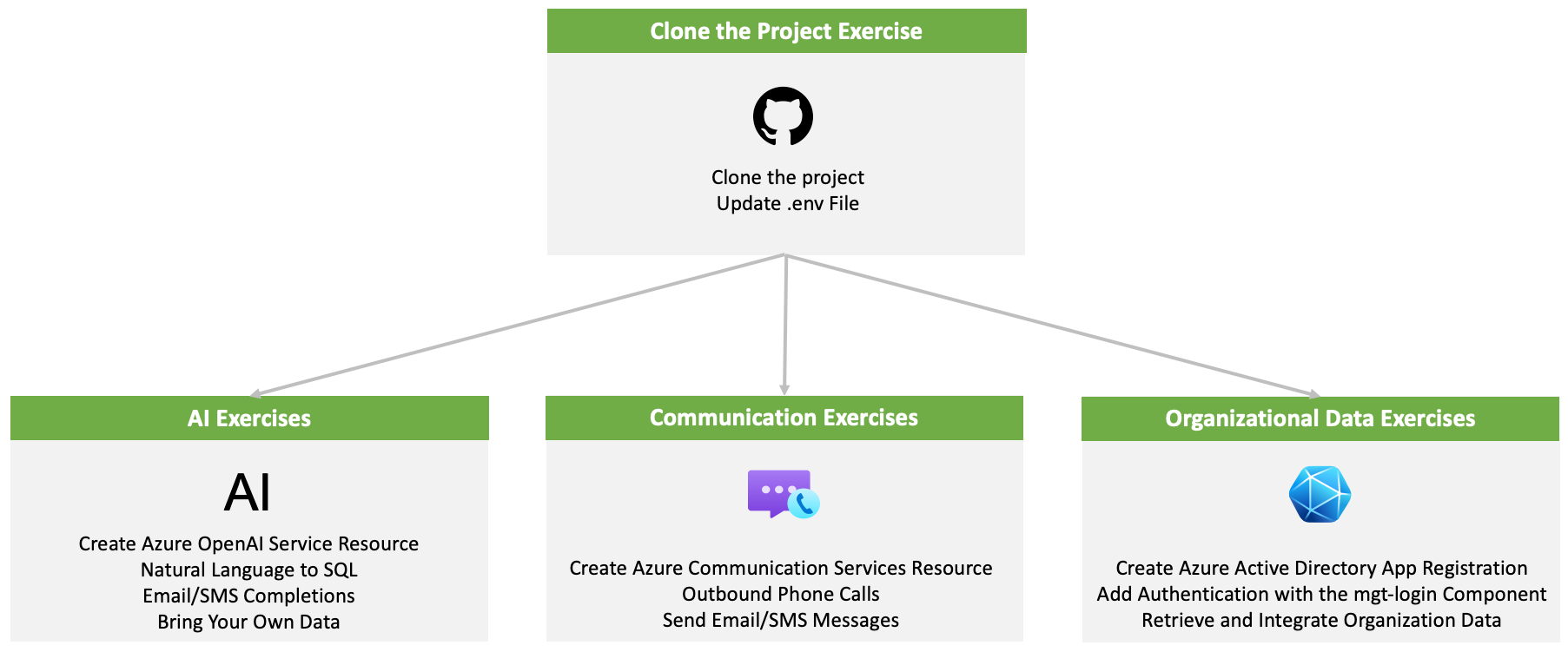

You can complete the entire tutorial from start to finish or complete specific topics of interest you. The tutorial is broken down into the following topic areas:

- Clone the Project Exercise (required exercise).

- AI Exercises: Create an Azure OpenAI resource and use it to convert natural language to SQL, generate email/SMS messages, and work with your own data and documents.

- Communication Exercises: Create an Azure Communication Services resource and use it to make phone calls from the app and send email/SMS messages.

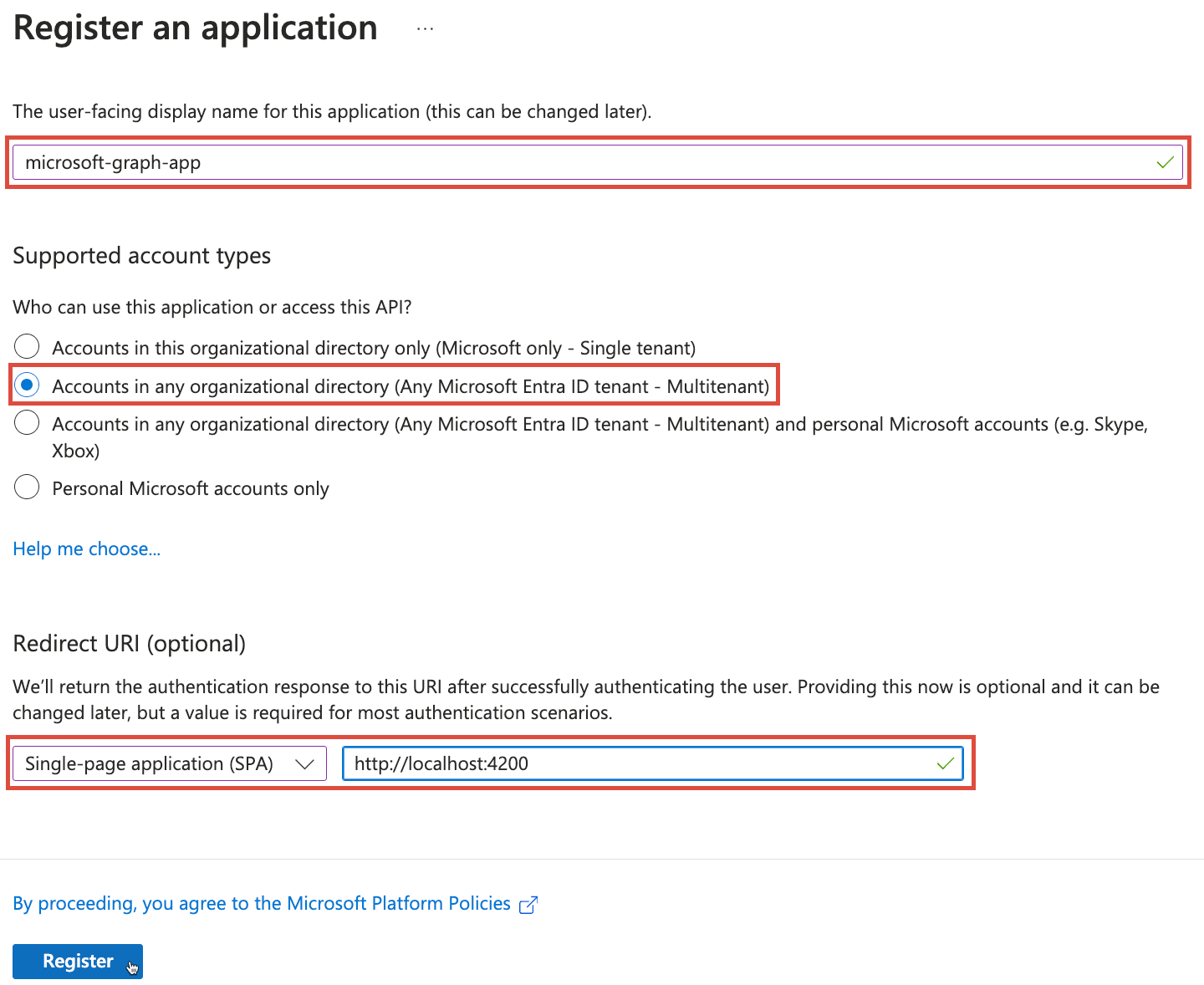

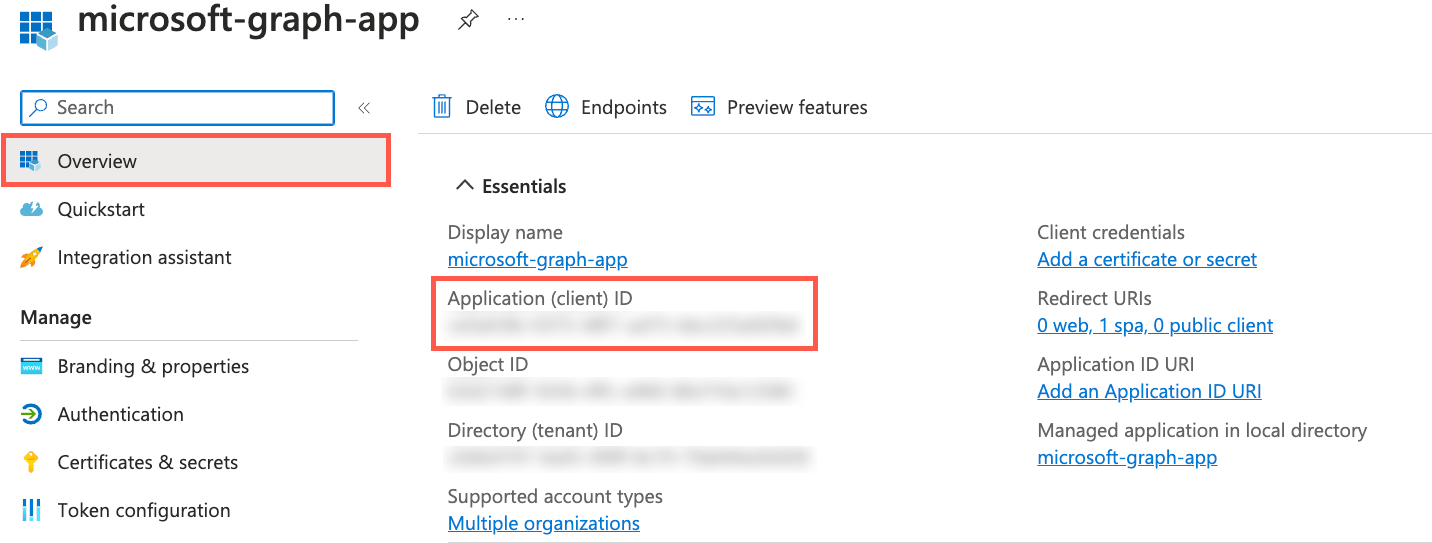

- Organizational Data Exercises: Create a Microsoft Entra ID app registration so that Microsoft Graph and Microsoft Graph Toolkit can be used to authenticate and pull organizational data into the application.

Prerequisites

- Node - Node 16+ and npm 7+ will be used for this project

- git

- Visual Studio Code (although Visual Studio Code is recommended, any editor can be used)

- Azure subscription

- Microsoft 365 developer tenant

- Docker Desktop or another OCI (Open Container Initiative) compliant container runtime such as Podman, or nerdctl capable of running a container.

Microsoft Cloud Technologies used in this Tutorial

- Microsoft Entra ID

- Azure Communication Services

- Azure OpenAI Service

- Microsoft Graph

- Microsoft Graph Toolkit

Clone the Project

The code project used in this tutorial is available at https://github.com/microsoft/MicrosoftCloud. The project's repository includes both client-side and server-side code required to run the project, enabling you to explore the integrated features related to artificial intelligence (AI), communication, and organizational data. Additionally, the project serves as resource to guide you in incorporating similar features into your own applications.

In this exercise you will:

- Clone the GitHub repository.

- Add an .env file into the project and update it.

Before proceeding, ensure that you have all of the prerequisites installed and configured as outlined in the Prerequisites section of this tutorial.

Clone the GitHub Repository and Create an .env File

Run the following command to clone the Microsoft Cloud GitHub Repository to your machine.

git clone https://github.com/microsoft/MicrosoftCloudOpen the MicrosoftCloud/samples/openai-acs-msgraph folder in Visual Studio Code.

Note

Although we'll use Visual Studio Code throughout this tutorial, any code editor can be used to work with the sample project.

Notice the following folders and files:

- client: Client-side application code.

- server: Server-side API code.

- docker-compose.yml: Used to run a local PostgreSQL database.

Rename the .env.example in the root of the project to .env.

Open the .env file and take a moment to look through the keys that are included:

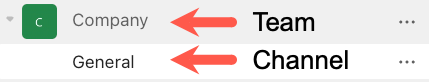

ENTRAID_CLIENT_ID= TEAM_ID= CHANNEL_ID= OPENAI_API_KEY= OPENAI_ENDPOINT= OPENAI_API_VERSION=2023-06-01-preview OPENAI_MODEL=gpt-35-turbo POSTGRES_USER= POSTGRES_PASSWORD= ACS_CONNECTION_STRING= ACS_PHONE_NUMBER= ACS_EMAIL_ADDRESS= CUSTOMER_EMAIL_ADDRESS= CUSTOMER_PHONE_NUMBER= API_PORT=3000 AZURE_COGNITIVE_SEARCH_ENDPOINT= AZURE_COGNITIVE_SEARCH_KEY= AZURE_COGNITIVE_SEARCH_INDEX=Update the following values in .env. These values will be used by the API server to connect to the local PostgreSQL database.

POSTGRES_USER=web POSTGRES_PASSWORD=web-passwordNow that you have the project in place, let's try out some of the application features and learn how they're built. Select the Next button below to continue or jump to a specific exercise using the table of contents.

AI: Create an Azure OpenAI Resource and Deploy a Model

To get started using Azure OpenAI in your applications, you need to create an Azure OpenAI Service and deploy a model that can be used to perform tasks such as converting natural language to SQL, generating email/SMS message content, and more.

In this exercise you will:

- Create an Azure OpenAI Service resource.

- Deploy a model.

- Update the .env file with values from your Azure OpenAI Service resource.

Create an Azure OpenAI Service Resource

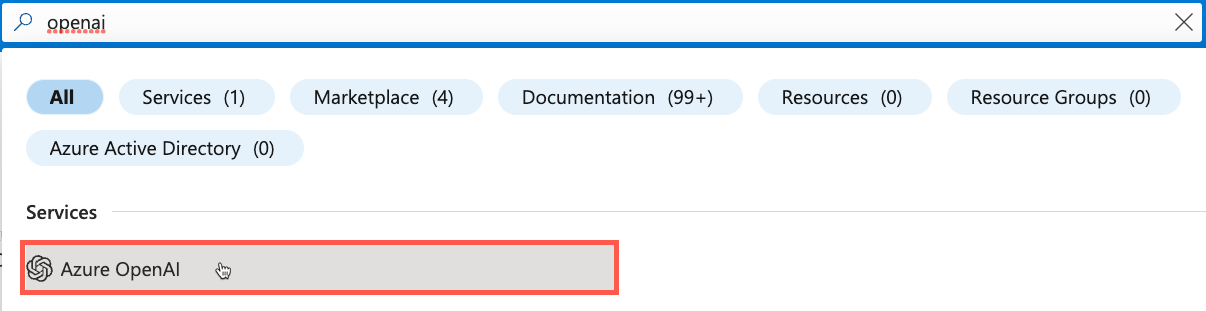

Visit the Azure portal in your browser and sign in.

Type openai in the search bar at the top of the portal page and select Azure OpenAI from the options that appear.

Select Create in the toolbar.

Note

If you see a message about completing an application form to enable Azure OpenAI on your subscription, select the Click here to request access to Azure OpenAI service link and complete the form. Once you've completed the form, you'll need to wait for the Azure OpenAI team to approve your request. After receiving your approval notice, you can go back through this exercise and create the resource.

While this tutorial focuses on Azure OpenAI, if you have an OpenAI API key and would like to use that while you're waiting for access to Azure OpenAI, you can skip this section and go directly to the Update the Project's .env File section below. Assign your OpenAI API key to

OPENAI_API_KEYin the .env file (you can ignore any other.envinstructions related to OpenAI). Once you have access to Azure OpenAI, revisit this exercise, create the resource and model, and update the .env file with the values from your Azure OpenAI resource.Perform the following tasks:

- Select your Azure subscription.

- Select the resource group to use (create a new one if needed).

- Select the region you'd like to use.

- Enter the resource name. It must be a unique value.

- Select the Standard S0 pricing tier.

Select Next until you get to the Review + submit screen. Select Create.

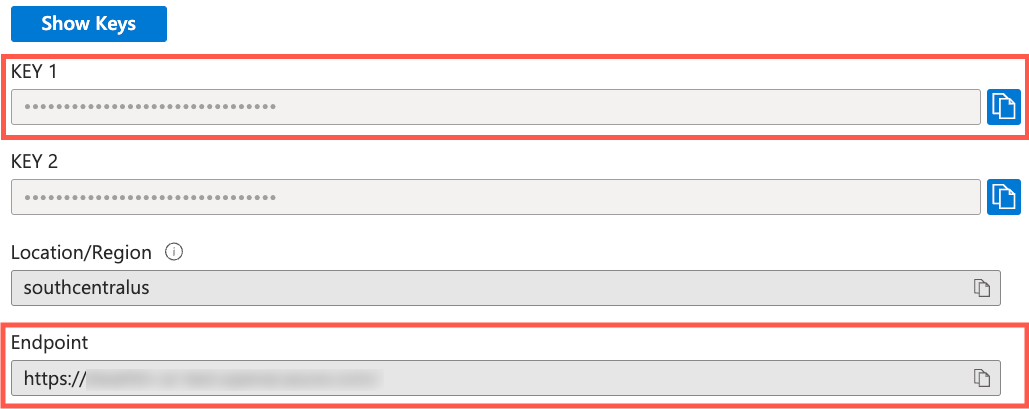

Once your Azure OpenAI resource is created, navigate to it and select Keys and Endpoint in the Resource Management section.

Locate the KEY 1 and Endpoint values. You'll use both values in the next section so copy them to a local file.

Select Model deployments in the Resource Management section.

Select the Manage Deployments button to go to Azure OpenAI Studio.

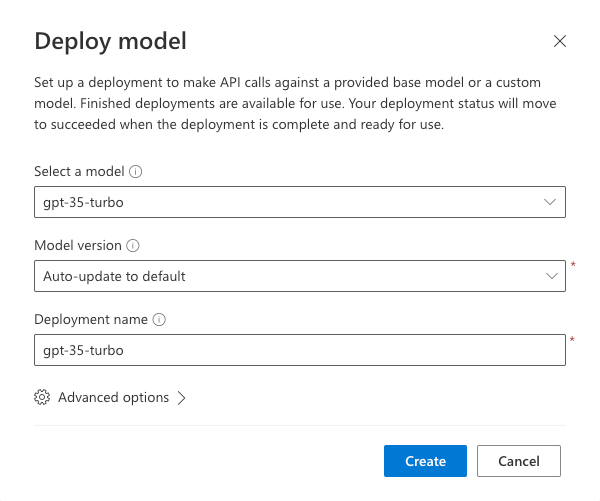

Select Create new deployment in the toolbar.

Enter the following values:

- Model: gpt-35-turbo.

- Model version: Auto-update to default.

- Deployment name: gpt-35-turbo.

Note

Azure OpenAI supports several different types of models. Each model can be used to handle different scenarios.

Select Create.

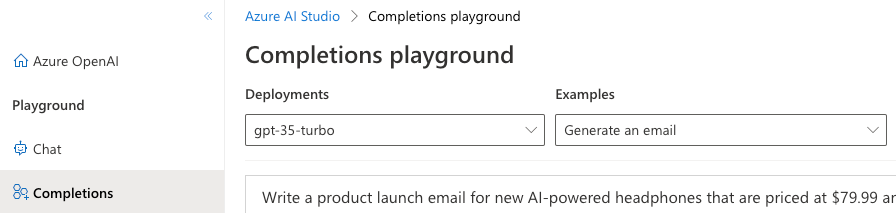

Once the model is deployed, select Completions in the Playground section.

Select the gpt-35-turbo model from the Deployments dropdown. Select Generate an email from the Examples dropdown.

Take a moment to read through the prompt text that's provided. Select Generate to see the text that the model generates.

Warning

If you get an error message about the model not being ready, wait a few minutes and try again. It can take a few minutes for the model to be fully deployed and ready to use.

If you get an error saying, "The completion operation does not work with the specified model.", this normally means you selected a newer model version rather than the default version. Select Deployments and delete the model you created earlier. Create a new gpt-35-turbo model deployment, ensure that you select Auto-update to default for the Model version, give it a name of gpt-35-turbo, and wait for the model to be fully deployed. Once it's deployed, go back to the Playground and try the completion again.

Select Regenerate multiple times. Note that the text is different each time.

To the right of the screen you'll see properties listed such as Temperature. Change the Temperature value to 0 and select Regenerate again. Read through the email text that is generated.

Select Regenerate one final time and note that the email text is the same as the text that was generated previously.

Note

Lowering the temperature means that the model will produce more repetitive and deterministic responses. Increasing the temperature will result in more unexpected or creative responses.

Update the Project's .env File

Go back to Visual Studio Code and open the

.envfile at the root of the project.Copy the KEY 1 value from your Azure OpenAI resource and assign it to

OPENAI_API_KEYin the .env file located in the root of the openai-acs-msgraph folder:OPENAI_API_KEY=<KEY_1_VALUE>Copy the *Endpoint value and assign it to

OPENAI_ENDPOINTin the .env file. Remove the/character from the end of the value if it's present.OPENAI_ENDPOINT=<ENDPOINT_VALUE>Note

You'll see that values for

OPENAI_MODELandOPENAI_API_VERSIONare already set in the .env file. The model value is set to gpt-35-turbo which should match the model name you created earlier in this exercise. The API version is set to a supported value defined in the Azure OpenAI reference documentation.Save the .env file.

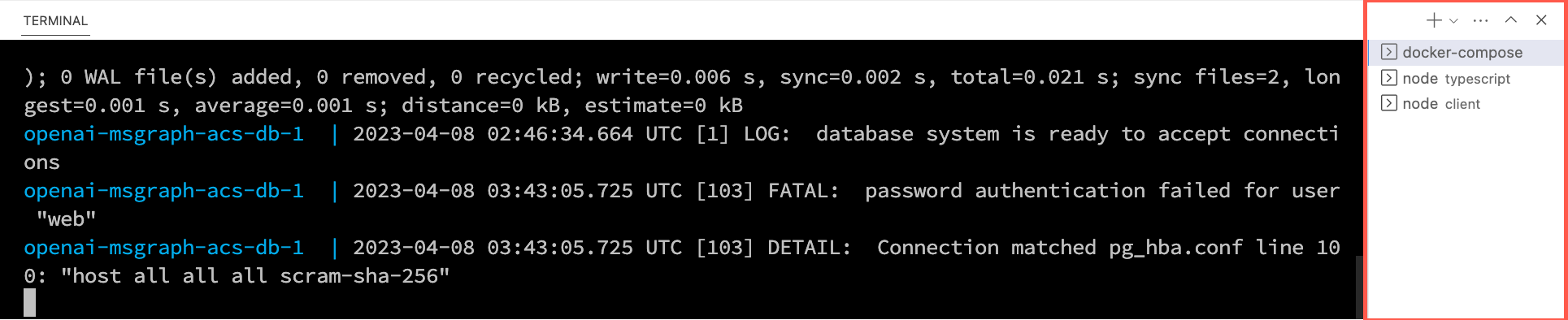

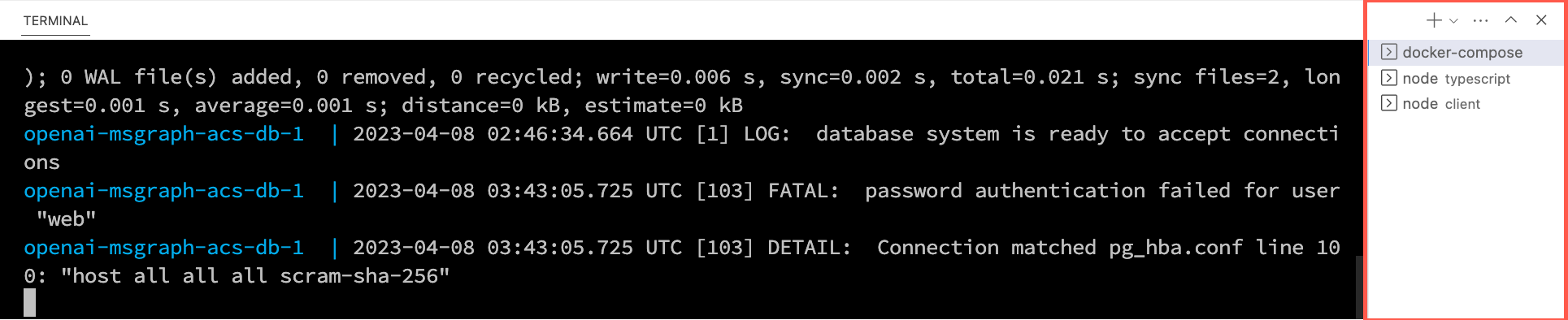

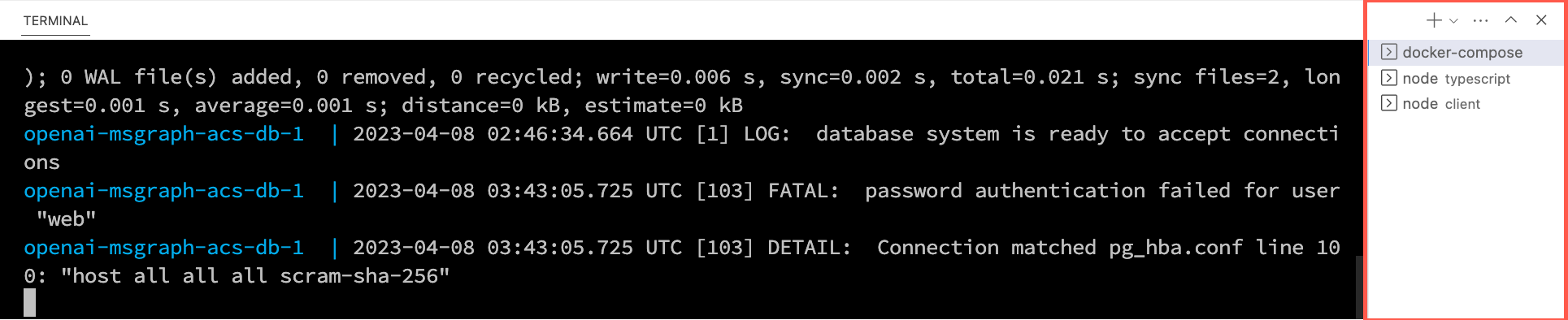

Start the Application Services

It's time to start up your application services including the database, API server, and web server.

In the following steps you'll create three terminal windows in Visual Studio Code.

Right-click on the .env file in the Visual Studio Code file list and select Open in Integrated Terminal. Ensure that your terminal is at the root of the project - openai-acs-msgraph - before continuing.

Choose from one of the following options to start the PostgreSQL database:

If you have Docker Desktop installed and running, run

docker-compose upin the terminal window and press Enter.If you have Podman with podman-compose installed and running, run

podman-compose upin the terminal window and press Enter.To run the PostgreSQL container directly using either Docker Desktop, Podman, nerdctl, or another container runtime you have installed, run the following command in the terminal window:

Mac, Linux, or Windows Subsystem for Linux (WSL):

[docker | podman | nerdctl] run --name postgresDb -e POSTGRES_USER=web -e POSTGRES_PASSWORD=web-password -e POSTGRES_DB=CustomersDB -v $(pwd)/data:/var/lib/postgresql/data -p 5432:5432 postgresWindows with PowerShell:

[docker | podman] run --name postgresDb -e POSTGRES_USER=web -e POSTGRES_PASSWORD=web-password -e POSTGRES_DB=CustomersDB -v ${PWD}/data:/var/lib/postgresql/data -p 5432:5432 postgres

Once the database container starts, press the + icon in the Visual Studio Code Terminal toolbar to create a second terminal window.

cdinto the server/typescript folder and run the following commands to install the dependencies and start the API server.npm installnpm start

Press the + icon again in the Visual Studio Code Terminal toolbar to create a third terminal window.

cdinto the client folder and run the following commands to install the dependencies and start the web server.npm installnpm start

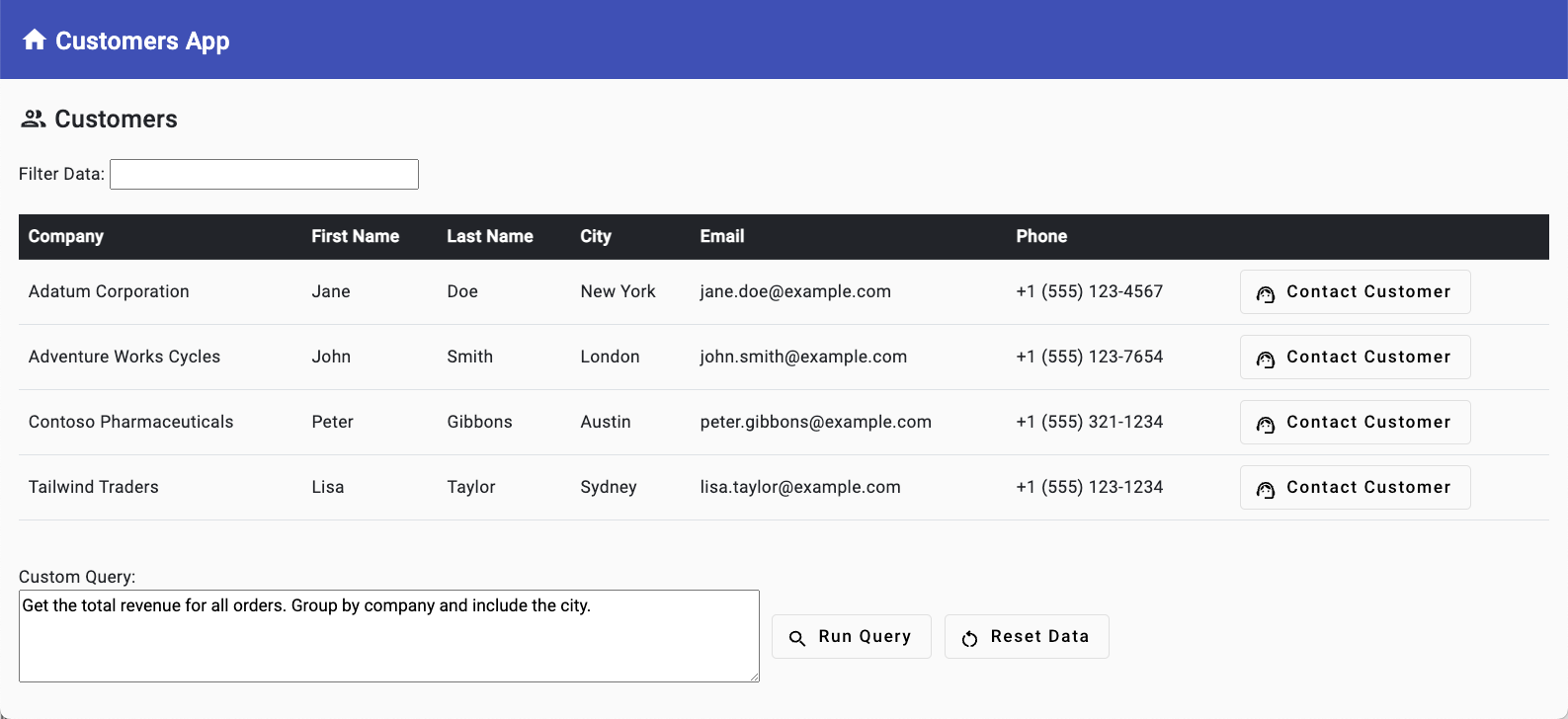

A browser will launch and you'll be taken to http://localhost:4200.

AI: Natural Language to SQL

The quote "Just because you can doesn't mean you should" is a useful guide when thinking about AI capabilities. For example, Azure OpenAI's natural language to SQL feature allows users to make database queries in plain English, which can be a powerful tool to enhance their productivity. However, powerful doesn't always mean appropriate or safe. This exercise will demonstrate how to use this AI feature while also discussing important considerations to keep in mind before deciding to implement it.

Here's an example of a natural language query that can be used to retrieve data from a database:

Get the the total revenue for all companies in London.

With the proper prompts, Azure OpenAI will convert this query to SQL that can be used to return results from the database. As a result, non-technical users including business analysts, marketers, and executives can more easily retrieve valuable information from databases without grappling with intricate SQL syntax or relying on constrained datagrids and filters. This streamlined approach can boost productivity by eliminating the need for users to seek assistance from technical experts.

This exercise provides a starting point that will help you understand how natural language to SQL works, introduce you to some important considerations, get you thinking about pros and cons, and show you the code to get started.

In this exercise, you will:

- Use GPT prompts to convert natural language to SQL.

- Experiment with different GPT prompts.

- Use the generated SQL to query the PostgreSQL database started earlier.

- Return query results from PostgreSQL and display them in the browser.

Let's start by experimenting with different GPT prompts that can be used to convert natural language to SQL.

Using the Natural Language to SQL Feature

In the previous exercise you started the database, APIs, and application. You also updated the

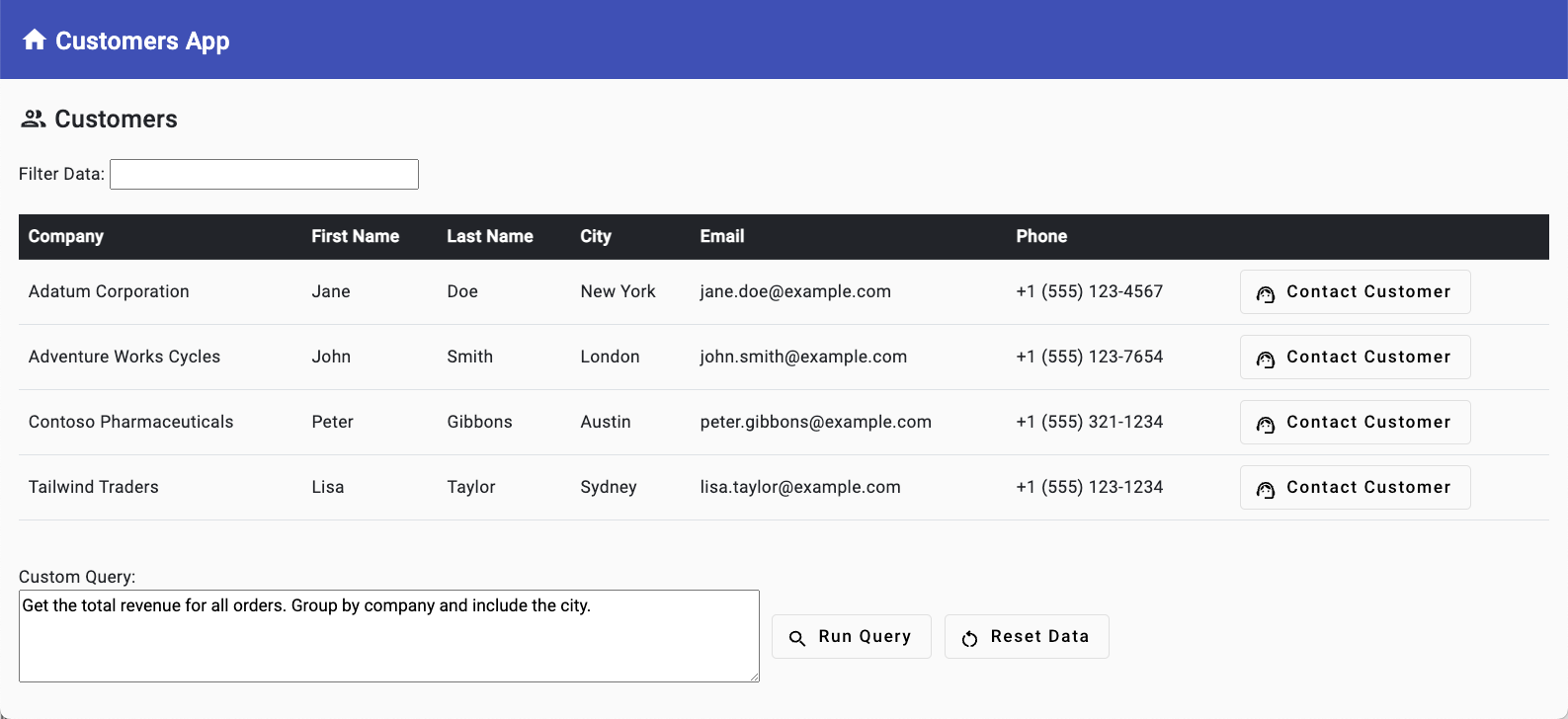

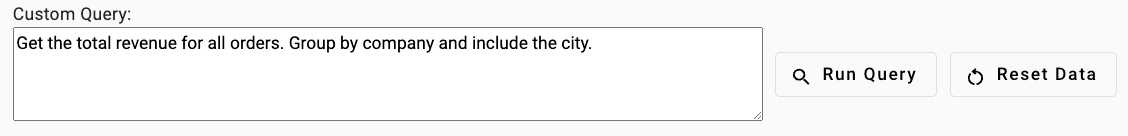

.envfile. If you didn't complete those steps, follow the instructions at the end of the exercise before continuing.Go back to the browser (http://localhost:4200) and locate the Custom Query section of the page below the datagrid. Notice that a sample query value is already included: Get the total revenue for all orders. Group by company and include the city.

Select the Run Query button. This will pass the user's natural language query to Azure OpenAI which will convert it to SQL. The SQL query will then be used to query the database and return any potential results.

Run the following Custom Query:

Get the total revenue for Adventure Works Cycles. Include the contact information as well.View the terminal window running the API server in Visual Studio Code and notice it displays the SQL query returned from Azure OpenAI. The JSON data is used by the server-side APIs to query the PostgreSQL database. Any string values included in the query are added as parameter values to prevent SQL injection attacks:

{ "sql": "SELECT c.company, c.city, c.email, SUM(o.total) AS revenue FROM customers c INNER JOIN orders o ON c.id = o.customer_id WHERE c.company = $1 GROUP BY c.company, c.city, c.email", "paramValues": ["Adventure Works Cycles"] }Go back to the browser and select Reset Data to view all of the customers again in the datagrid.

Exploring the Natural Language to SQL Code

Tip

If you're using Visual Studio Code, you can open files directly by selecting:

- Windows/Linux: Ctrl + P

- Mac: Cmd + P

Then type the name of the file you want to open.

Note

The goal of this exercise is to show what's possible with natural language to SQL functionality and demonstrate how to get started using it. As mentioned earlier, it's important to discuss if this type of AI is appropriate for your organization before proceeding with any implementation. It's also imperative to plan for proper prompt rules and database security measures to prevent unauthorized access and protect sensitive data.

Now that you've seen the natural language to SQL feature in action, let's examine how it is implemented.

Open the server/apiRoutes.ts file and locate the

generateSqlroute. This API route is called by the client-side application running in the browser and used to generate SQL from a natural language query. Once the SQL query is retrieved, it's used to query the database and return results.router.post('/generateSql', async (req, res) => { const userPrompt = req.body.prompt; if (!userPrompt) { return res.status(400).json({ error: 'Missing parameter "prompt".' }); } try { // Call Azure OpenAI to convert the user prompt into a SQL query const sqlCommandObject = await getSQLFromNLP(userPrompt); let result: any[] = []; // Execute the SQL query if (sqlCommandObject && !sqlCommandObject.error) { result = await queryDb(sqlCommandObject) as any[]; } else { result = [ { query_error : sqlCommandObject.error } ]; } res.json(result); } catch (e) { console.error(e); res.status(500).json({ error: 'Error generating or running SQL query.' }); } });Notice the following functionality in the

generateSqlroute:- It retrieves the user query value from

req.body.queryand assigns it to a variable nameduserQuery. This value will be used in the GPT prompt. - It calls a

getSQLFromNLP()function to convert natural language to SQL. - It passes the generated SQL to a function named

queryDbthat executes the SQL query and returns results from the database.

- It retrieves the user query value from

Open the server/openAI.ts file in your editor and locate the

getSQLFromNLP()function. This function is called by thegeneratesqlroute and is used to convert natural language to SQL.async function getSQLFromNLP(userPrompt: string): Promise<QueryData> { // Get the high-level database schema summary to be used in the prompt. // The db.schema file could be generated by a background process or the // schema could be dynamically retrieved. const dbSchema = await fs.promises.readFile('db.schema', 'utf8'); const systemPrompt = ` Assistant is a natural language to SQL bot that returns only a JSON object with the SQL query and the parameter values in it. The SQL will query a PostgreSQL database. PostgreSQL tables, with their columns: ${dbSchema} Rules: - Convert any strings to a PostgreSQL parameterized query value to avoid SQL injection attacks. - Always return a JSON object with the SQL query and the parameter values in it. - Return a JSON object. Do NOT include any text outside of the JSON object. - Example JSON object to return: { "sql": "", "paramValues": [] } User: "Display all company reviews. Group by company." Assistant: { "sql": "SELECT * FROM reviews", "paramValues": [] } User: "Display all reviews for companies located in cities that start with 'L'." Assistant: { "sql": "SELECT r.* FROM reviews r INNER JOIN customers c ON r.customer_id = c.id WHERE c.city LIKE 'L%'", "paramValues": [] } User: "Display revenue for companies located in London. Include the company name and city." Assistant: { "sql": "SELECT c.company, c.city, SUM(o.total) AS revenue FROM customers c INNER JOIN orders o ON c.id = o.customer_id WHERE c.city = $1 GROUP BY c.company, c.city", "paramValues": ["London"] } User: "Get the total revenue for Adventure Works Cycles. Include the contact information as well." Assistant: { "sql": "SELECT c.company, c.city, c.email, SUM(o.total) AS revenue FROM customers c INNER JOIN orders o ON c.id = o.customer_id WHERE c.company = $1 GROUP BY c.company, c.city, c.email", "paramValues": ["Adventure Works Cycles"] } - Convert any strings to a PostgreSQL parameterized query value to avoid SQL injection attacks. - Do NOT include any text outside of the JSON object. Do not provide any additional explanations or context. Just the JSON object is needed. `; let queryData: QueryData = { sql: '', paramValues: [], error: '' }; let results = ''; try { results = await callOpenAI(systemPrompt, userPrompt); if (results) { console.log('results', results); const parsedResults = JSON.parse(results); queryData = { ...queryData, ...parsedResults }; if (isProhibitedQuery(queryData.sql)) { queryData.sql = ''; queryData.error = 'Prohibited query.'; } } } catch (error) { console.log(error); if (isProhibitedQuery(results)) { queryData.sql = ''; queryData.error = 'Prohibited query.'; } else { queryData.error = results; } } return queryData; }- A

userPromptparameter is passed into the function. TheuserPromptvalue is the natural language query entered by the user in the browser. - A

systemPromptdefines the type of AI assistant to be used and rules that should be followed. This helps Azure OpenAI understand the database structure, what rules to apply, and how to return the generated SQL query and parameters. - A function named

callOpenAI()is called and thesystemPromptanduserPromptvalues are passed to it. - The results are checked to ensure no prohibited values are included in the generated SQL query. If prohibited values are found, the SQL query is set to an empty string.

- A

Let's walk through the system prompt in more detail:

const systemPrompt = ` Assistant is a natural language to SQL bot that returns only a JSON object with the SQL query and the parameter values in it. The SQL will query a PostgreSQL database. PostgreSQL tables, with their columns: ${dbSchema} Rules: - Convert any strings to a PostgreSQL parameterized query value to avoid SQL injection attacks. - Always return a JSON object with the SQL query and the parameter values in it. - Return a JSON object. Do NOT include any text outside of the JSON object. - Example JSON object to return: { "sql": "", "paramValues": [] } User: "Display all company reviews. Group by company." Assistant: { "sql": "SELECT * FROM reviews", "paramValues": [] } User: "Display all reviews for companies located in cities that start with 'L'." Assistant: { "sql": "SELECT r.* FROM reviews r INNER JOIN customers c ON r.customer_id = c.id WHERE c.city LIKE 'L%'", "paramValues": [] } User: "Display revenue for companies located in London. Include the company name and city." Assistant: { "sql": "SELECT c.company, c.city, SUM(o.total) AS revenue FROM customers c INNER JOIN orders o ON c.id = o.customer_id WHERE c.city = $1 GROUP BY c.company, c.city", "paramValues": ["London"] } User: "Get the total revenue for Adventure Works Cycles. Include the contact information as well." Assistant: { "sql": "SELECT c.company, c.city, c.email, SUM(o.total) AS revenue FROM customers c INNER JOIN orders o ON c.id = o.customer_id WHERE c.company = $1 GROUP BY c.company, c.city, c.email", "paramValues": ["Adventure Works Cycles"] } - Convert any strings to a PostgreSQL parameterized query value to avoid SQL injection attacks. - Do NOT include any text outside of the JSON object. Do not provide any additional explanations or context. Just the JSON object is needed. `;The type of AI assistant to be used is defined. In this case a "natural language to SQL bot".

Table names and columns in the database are defined. The high-level schema included in the prompt can be found in the server/db.schema file and looks like the following.

- customers (id, company, city, email) - orders (id, customer_id, date, total) - order_items (id, order_id, product_id, quantity, price) - reviews (id, customer_id, review, date, comment)Tip

You may consider creating read-only views that only contain the data users are allowed to query using natural language to SQL.

A rule is defined to convert any string values to a parameterized query value to avoid SQL injection attacks.

A rule is defined to always return a JSON object (and nothing else) with the SQL query and the parameter values in it.

An example is given for the type of JSON object to return.

Example user prompts and the expected SQL query and parameter values are provided. This is referred to as "few-shot" learning. Although LLMs are trained on large amounts of data, they can be adapted to new tasks with only a few examples. An alternative approach is "zero-shot" learning where no example is provided and the model is expected to generate the correct SQL query and parameter values.

Two critical rules are repeated again at the bottom of the system prompt to avoid "recency bias".

Tip

Learn more about recency bias in the Azure OpenAI documentation.

The

getSQLFromNLP()function sends the system and user prompts to a function namedcallOpenAI()which is also located in the server/openAI.ts file. ThecallOpenAI()function determines if the Azure OpenAI service or OpenAI service should be called by checking environment variables. If a key, endpoint, and model are available in the environment variables then Azure OpenAI is called, otherwise OpenAI is called.function callOpenAI(systemPrompt: string, userPrompt: string, temperature = 0, useBYOD = false) { const isAzureOpenAI = OPENAI_API_KEY && OPENAI_ENDPOINT && OPENAI_MODEL; if (isAzureOpenAI && useBYOD) { return getAzureOpenAIBYODCompletion(systemPrompt, userPrompt, temperature); } if (isAzureOpenAI) { return getAzureOpenAICompletion(systemPrompt, userPrompt, temperature); } return getOpenAICompletion(systemPrompt, userPrompt, temperature); }Note

Although we'll focus on Azure OpenAI throughout this tutorial, if you only supply an

OPENAI_API_KEYvalue in the .env file, the application will use OpenAI instead. If you choose to use OpenAI instead of Azure OpenAI you may see different results in some cases.Locate the

getAzureOpenAICompletion()function.async function getAzureOpenAICompletion(systemPrompt: string, userPrompt: string, temperature: number): Promise<string> { checkRequiredEnvVars(['OPENAI_API_KEY', 'OPENAI_ENDPOINT', 'OPENAI_MODEL']); const fetchUrl = `${OPENAI_ENDPOINT}/openai/deployments/${OPENAI_MODEL}/chat/completions?api-version=${OPENAI_API_VERSION}`; const messageData: ChatGPTData = { max_tokens: 1024, temperature, messages: [ { role: 'system', content: systemPrompt }, { role: 'user', content: userPrompt } ] }; const headersBody: OpenAIHeadersBody = { method: 'POST', headers: { 'Content-Type': 'application/json', 'api-key': OPENAI_API_KEY }, body: JSON.stringify(messageData), }; const completion = await fetchAndParse(fetchUrl, headersBody); console.log(completion); let content = (completion.choices[0]?.message?.content?.trim() ?? '') as string; console.log('Azure OpenAI Output: \n', content); if (content && content.includes('{') && content.includes('}')) { content = extractJson(content); } console.log('After parse: \n', content); return content; } function checkRequiredEnvVars(requiredEnvVars: string[]) { for (const envVar of requiredEnvVars) { if (!process.env[envVar]) { throw new Error(`Missing ${envVar} in environment variables.`); } } } async function fetchAndParse(url: string, headersBody: Record<string, any>): Promise<any> { try { const response = await fetch(url, headersBody); return await response.json(); } catch (error) { console.error(`Error fetching data from ${url}:`, error); throw error; } }This function does the following:

Accepts

systemPrompt,userPrompt, andtemperatureparameters.systemPrompt: Lets the Azure OpenAI model know what role it should play and what rules to follow.userPrompt: User information entered into the application such as natural language or rules that will be used by the model to generate the output.temperature: Determines how creative the model should be when generating a response. A higher value means the model will take more risks.

Ensures that a valid Azure OpenAI API key, endpoint, ,and model are available by calling

checkRequiredEnvVars().Creates a

fetchUrlvalue that is used to call Azure OpenAI's REST API and embeds the endpoint, model, and API version values from the environment variables into the URL.Creates a

messageDataobject that includesmax_token,temperature, andmessagesto send to Azure OpenAI.max_tokens: The maximum number of tokens to generate in the completion. The token count of your prompt plus max_tokens can't exceed the model's context length. Older models have a context length of 2,048 tokens while newer ones support 4,096, 8,192, or even 32,768 tokens depending on the model being used.temperature: What sampling temperature to use. A higher values means the model will take more risks. Try 0.9 for more creative applications, and 0 for ones with a well-defined answer.messages: Represents the messages to generate chat completions for, in the chat format. In this example two messages are passed in: one for the system and one for the user. The system message defines the overall behavior and rules that will be used, while the user message defines the prompt text provided by the user.

Calls

fetchAndParse()to send thefetchUrlandheadersBodyvalues to Azure OpenAI.Processes the response by retrieving the

completion.choices[0].message.contentvalue. If the response contains the expected results, the code extracts the JSON object from the response and returns it.Note

You can learn more about these parameters and others in the Azure OpenAI reference documentation.

Comment out the following lines in the

getSQLFromNLP()function:// if (isProhibitedQuery(queryData.sql)) { // queryData.sql = ''; // }Save openAI.ts. The API server will automatically rebuild the TypeScript code and restart the server.

Go back to the browser and enter Select all table names from the database into the Custom Query input. Select Run Query. Are table names displayed?

Go back to the

getSQLFromNLP()function in server/openAI.ts and add the following rule into theRules:section of the system prompt and then save the file.- Do not allow the SELECT query to return table names, function names, or procedure names.Go back to the browser and perform the following tasks:

- Enter Select all table names from the database into the Custom Query input. Select Run Query. Are table names displayed?

- Enter Select all function names from the database. into the Custom Query input and select Run Query again. Are function names displayed?

QUESTION: Why is this still working after adding a rule saying that table names, function names, and procedure names aren't allowed?

ANSWER: This is due to the "only JSON" rule. If the rules were more flexible and didn't require a JSON object to be returned, you may see a message about Azure OpenAI being unable to perform the task.

Note

It's important to note that OpenAI models can return unexpected results on occasion that may not match the rules you've defined. It's important to plan for that in your code.

Take out the following rule from

systemPromptand save the file.- Only return a JSON object. Do NOT include any text outside of the JSON object. Do not provide any additional explanations or context. Just the JSON object is needed.Run Select all table names from the database query again.

Notice the message now displayed in the browser. Azure OpenAI is unable to perform the task because of the the following rule. Since we removed the "only JSON" rule, the response can provide additional details about why the task can't be performed.

- Do not allow the SELECT query to return table names, function names, or procedure names.You can see that AI may generate unexpected results even if you have specific rules in place. This is why you need to plan your prompt text and rules carefully, but also plan to add a post-processing step into your code to handle cases where you receive unexpected results.

Go back to server/openAI.ts and locate the

isProhibitedQuery()function. This is an example of post-processing code that can be run after Azure OpenAI returns results. Notice that it sets thesqlproperty to an empty string if prohibited keywords are returned in the generated SQL query. This ensures that if unexpected results are returned from Azure OpenAI, the SQL query will not be run against the database.function isProhibitedQuery(query: string): boolean { if (!query) return false; const prohibitedKeywords = [ 'insert', 'update', 'delete', 'drop', 'truncate', 'alter', 'create', 'replace', 'information_schema', 'pg_catalog', 'pg_tables', 'pg_namespace', 'pg_class', 'table_schema', 'table_name', 'column_name', 'column_default', 'is_nullable', 'data_type', 'udt_name', 'character_maximum_length', 'numeric_precision', 'numeric_scale', 'datetime_precision', 'interval_type', 'collation_name', 'grant', 'revoke', 'rollback', 'commit', 'savepoint', 'vacuum', 'analyze' ]; const queryLower = query.toLowerCase(); return prohibitedKeywords.some(keyword => queryLower.includes(keyword)); }Note

It's important to note that this is only demo code. There may be other prohibited keywords required to cover your specific use cases if you choose to convert natural language to SQL. This is a feature that you must plan for and use with care to ensure that only valid SQL queries are returned and run against the database. In addition to prohibited keywords, you will also need to factor in security as well.

Go back to server/openAI.ts and uncomment the following code in the

getSQLFromNLP()function. Save the file.if (isProhibitedQuery(queryData.sql)) { queryData.sql = ''; }Remove the following rule from

systemPromptand save the file.- Do not allow the SELECT query to return table names, function names, or procedure names.Go back to the browser, enter Select all table names from the database into the Custom Query input again and select the Run Query button.

Do any table results display? Even without the rule in place, the

isProhibitedQuery()post-processing code prohibits that type of query from being run against the database.As discussed earlier, integrating natural language to SQL in line of business applications can be quite beneficial to users, but it does come with its own set of considerations.

Advantages:

User-friendliness: This feature can make database interaction more accessible to users without technical expertise, reducing the need for SQL knowledge and potentially speeding up operations.

Increased productivity: Business analysts, marketers, executives, and other non-technical users can retrieve valuable information from databases without having to rely on technical experts, thereby increasing efficiency.

Broad application: By using advanced language models, applications can be designed to cater to a wide range of users and use-cases.

Considerations:

Security: One of the biggest concerns is security. If users can interact with databases using natural language, there needs to be robust security measures in place to prevent unauthorized access or malicious queries. You may consider implementing a read-only mode to prevent users from modifying data.

Data Privacy: Certain data might be sensitive and should not be easily accessible, so you'll need to ensure proper safeguards and user permissions are in place.

Accuracy: While natural language processing has improved significantly, it's not perfect. Misinterpretation of user queries could lead to inaccurate results or unexpected behavior. You'll need to plan for how unexpected results will be handled.

Efficiency: There are no guarantees that the SQL returned from a natural language query will be efficient. In some cases, additional calls to Azure OpenAI may be required if post-processing rules detect issues with SQL queries.

Training and User Adaptation: Users need to be trained to formulate their queries correctly. While it's easier than learning SQL, there can still be a learning curve involved.

A few final points to consider before moving on to the next exercise:

- Remember that, "Just because you can doesn't mean you should" applies here. Use extreme caution and careful planning before integrating natural language to SQL into an application. It's important to understand the potential risks and to plan for them.

- Before using this type of technology, discuss potential scenarios with your team, database administrators, security team, stakeholders, and any other relevant parties to ensure that it's appropriate for your organization. It's important to discuss if natural language to SQL meets security, privacy, and any other requirements your organization may have in place.

- Security should be a primary concern and built into the planning, development, and deployment process.

- While natural language to SQL can be very powerful, careful planning must go into it to ensure prompts have required rules and that post-processing functionality is included. Plan for additional time to implement and test this type of functionality and to account for scenarios where unexpected results are returned.

- With Azure OpenAI, customers get the security capabilities of Microsoft Azure while running the same models as OpenAI. Azure OpenAI offers private networking, regional availability, and responsible AI content filtering. Learn more about Data, privacy, and security for Azure OpenAI Service.

You've now seen how to use Azure OpenAI to convert natural language to SQL and learned about the pros and cons of implementing this type of functionality. In the next exercise, you'll learn how email and SMS messages can be generated using Azure OpenAI.

AI: Generating Completions

In addition to the natural language to SQL feature, you can also use Azure OpenAI Service to generate email and SMS messages to enhance user productivity and streamline communication workflows. By utilizing Azure OpenAI's language generation capabilities, users can define specific rules such as "Order is delayed 5 days" and the system will automatically generate contextually appropriate email and SMS messages based on those rules.

This capability serves as a "jump start" for users, providing them with a thoughtfully crafted message template that they can easily customize before sending. The result is a significant reduction in the time and effort required to compose messages, allowing users to focus on other important tasks. Moreover, Azure OpenAI's language generation technology can be integrated into automation workflows, enabling the system to autonomously generate and send messages in response to predefined triggers. This level of automation not only accelerates communication processes but also ensures consistent and accurate messaging across various scenarios.

In this exercise, you will:

- Experiment with different GPT prompts.

- Use GPT prompts to generate completions for email and SMS messages.

- Explore code that enables GPT completions.

- Learn about the importance of prompt engineering and including rules in your prompts.

Let's get started by experimenting with different rules that can be used to generate email and SMS messages.

Using the GPT Completions Feature

In a previous exercise you started the database, APIs, and application. You also updated the

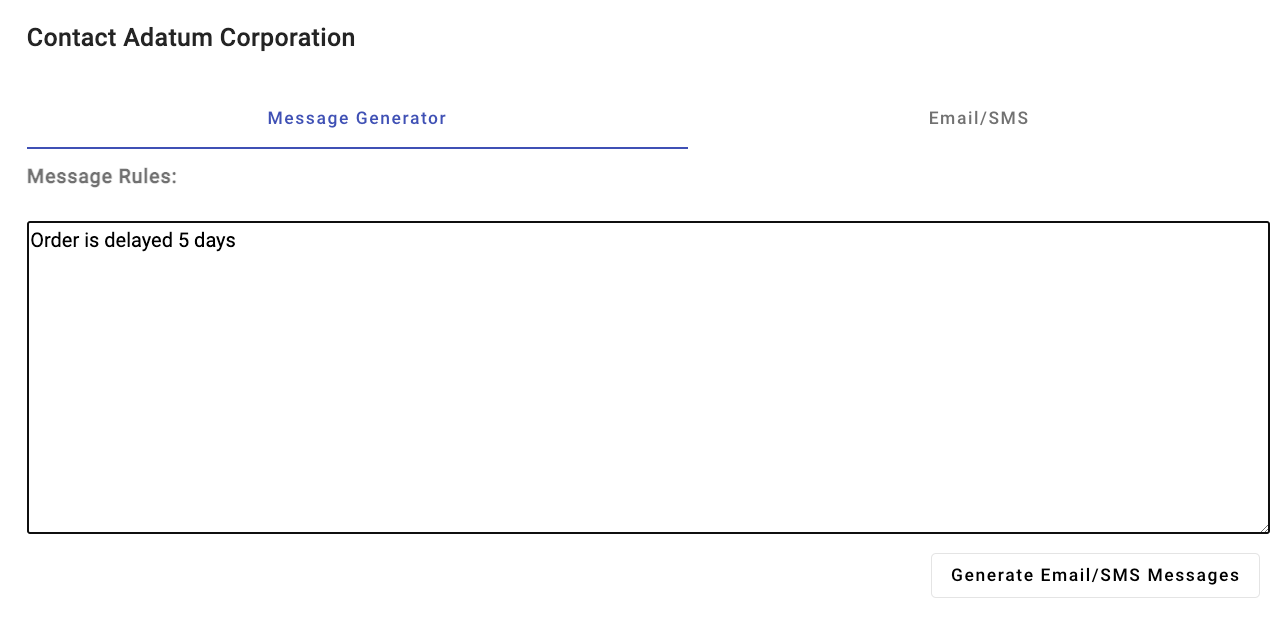

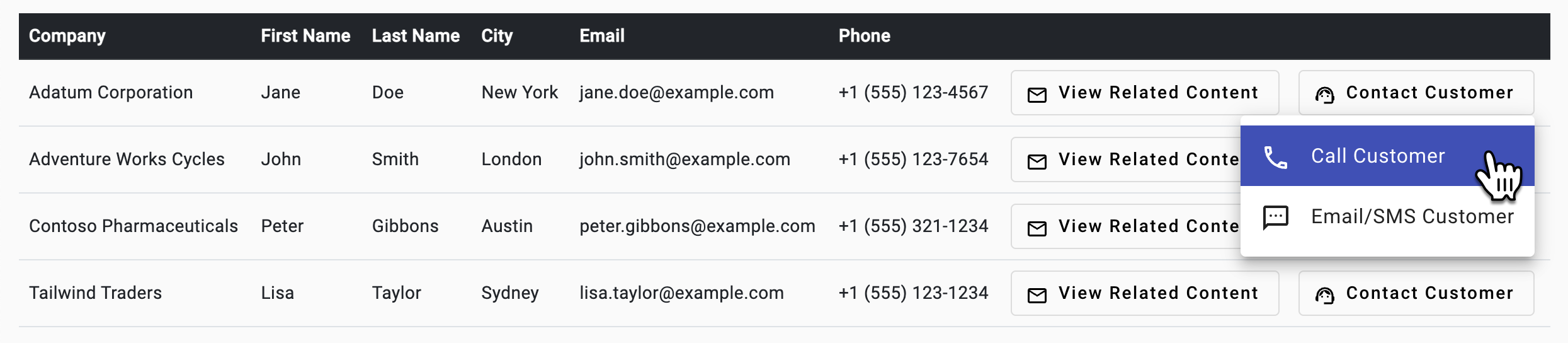

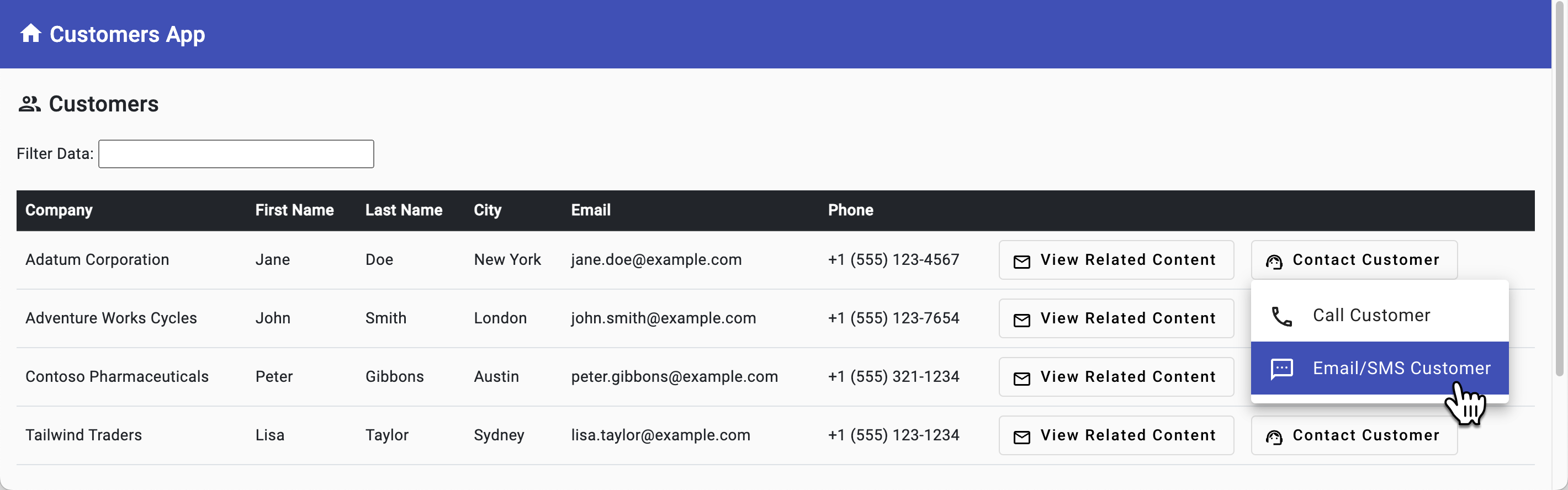

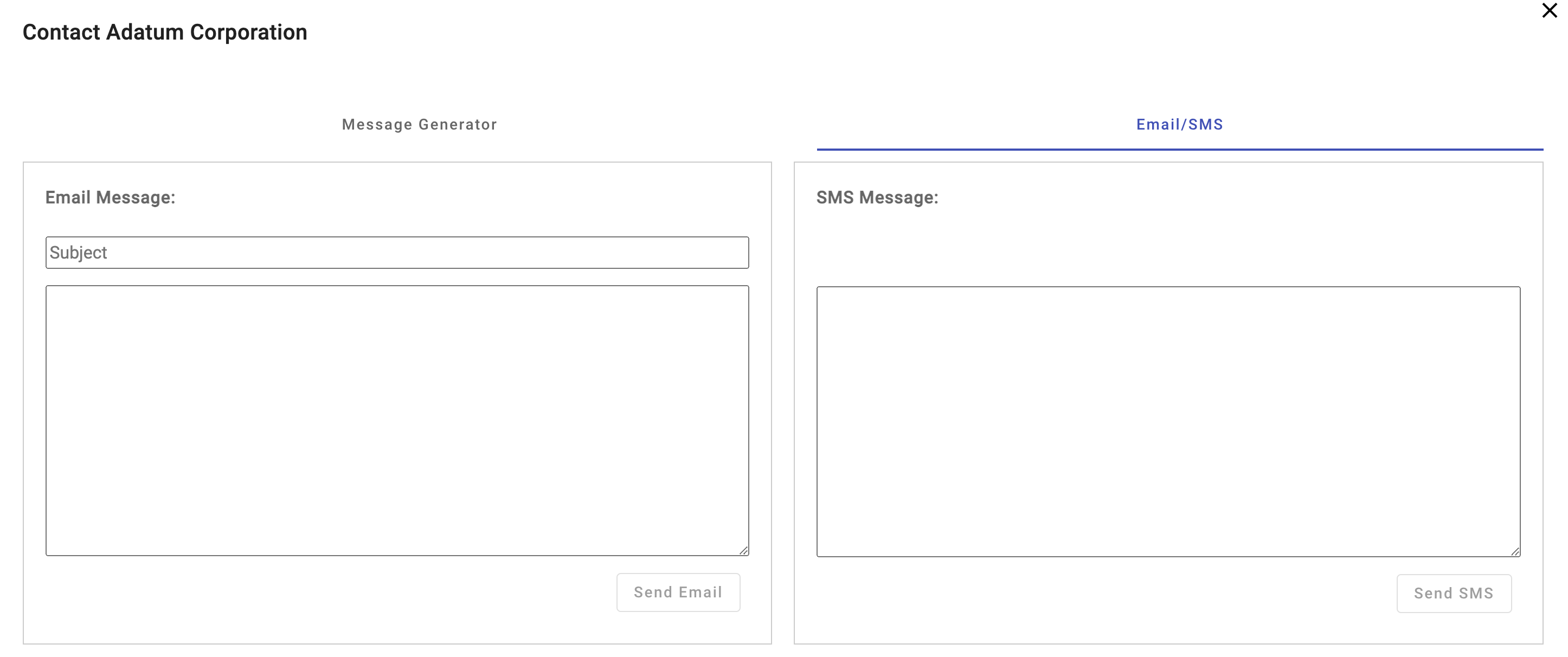

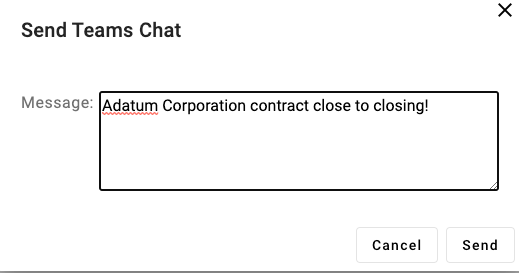

.envfile. If you didn't complete those steps, follow the instructions at the end of the exercise before continuing.Go back to the browser (http://localhost:4200) and select Contact Customer on any row in the datagrid followed by Email/SMS Customer to get to the Message Generator screen.

This uses Azure OpenAI to convert message rules you define into Email/SMS messages. Perform the following tasks:

Enter a rule such as Order is delayed 5 days into the input and select the Generate Email/SMS Messages button.

You will see a subject and body generated for the email and a short message generated for the SMS.

Note

Because Azure Communication Services isn't enabled yet, you won't be able to send the email or SMS messages.

Close the email/SMS dialog window in the browser. Now that you've seen this feature in action, let's examine how it's implemented.

Exploring the GPT Completions Code

Tip

If you're using Visual Studio Code, you can open files directly by selecting:

- Windows/Linux: Ctrl + P

- Mac: Cmd + P

Then type the name of the file you want to open.

Open the server/apiRoutes.ts file and locate the

completeEmailSmsMessagesroute. This API is called by front-end portion of the app when the Generate Email/SMS Messages button is selected. It retrieves the user prompt, company, and contact name values from the body and passes them to thecompleteEmailSMSMessages()function in the server/openAI.ts file. The results are then returned to the client.router.post('/completeEmailSmsMessages', async (req, res) => { const { prompt, company, contactName } = req.body; if (!prompt || !company || !contactName) { return res.status(400).json({ status: false, error: 'The prompt, company, and contactName parameters must be provided.' }); } let result; try { // Call OpenAI to get the email and SMS message completions result = await completeEmailSMSMessages(prompt, company, contactName); } catch (e: unknown) { console.error('Error parsing JSON:', e); } res.json(result); });Open the server/openAI.ts file and locate the

completeEmailSMSMessages()function.async function completeEmailSMSMessages(prompt: string, company: string, contactName: string) { console.log('Inputs:', prompt, company, contactName); const systemPrompt = ` Assistant is a bot designed to help users create email and SMS messages from data and return a JSON object with the email and SMS message information in it. Rules: - Generate a subject line for the email message. - Use the User Rules to generate the messages. - All messages should have a friendly tone and never use inappropriate language. - SMS messages should be in plain text format and NO MORE than 160 characters. - Start the message with "Hi <Contact Name>,\n\n". Contact Name can be found in the user prompt. - Add carriage returns to the email message to make it easier to read. - End with a signature line that says "Sincerely,\nCustomer Service". - Return a valid JSON object with the emailSubject, emailBody, and SMS message values in it: { "emailSubject": "", "emailBody": "", "sms": "" } - The sms property value should be in plain text format and NO MORE than 160 characters. - Only return a valid JSON object. Do NOT include any text outside of the JSON object. Do not provide any additional explanations or context. Just the JSON object is needed. `; const userPrompt = ` User Rules: ${prompt} Contact Name: ${contactName} `; let content: EmailSmsResponse = { status: true, email: '', sms: '', error: '' }; let results = ''; try { results = await callOpenAI(systemPrompt, userPrompt, 0.5); if (results) { const parsedResults = JSON.parse(results); content = { ...content, ...parsedResults, status: true }; } } catch (e) { console.log(e); content.status = false; content.error = results; } return content; }This function has the following features:

systemPromptis used to define that an AI assistant capable of generating email and SMS messages is required. ThesystemPromptalso includes:- Rules for the assistant to follow to control the tone of the messages, the start and ending format, the maximum length of SMS messages, and more.

- Information about data that should be included in the response - a JSON object in this case and only a JSON object.

- Two critical rules are repeated again at the bottom of the system prompt to avoid "recency bias".

userPromptis used to define the rules and contact name that the end user would like to include as the email and SMS messages are generated. The Order is delayed 5 days rule you entered earlier is included inuserPrompt.- The function calls the

callOpenAI()function you explored earlier to generate the email and SMS completions.

Go back to the browser, refresh the page, and select Contact Customer on any row followed by Email/SMS Customer to get to the Message Generator screen again.

Enter the following rules into the Message Generator input:

- Order is ahead of schedule.

- Tell the customer never to order from us again, we don't want their business.

Select Generate Email/SMS Messages and note the message. The

All messages should have a friendly tone and never use inappropriate language.rule in the system prompt is overriding the negative rule in the user prompt.Go back to server/openAI.ts* in your editor and remove the

All messages should have a friendly tone and never use inappropriate language.rule from the prompt in thecompleteEmailSMSMessages()function. Save the file.Go back to the email/SMS message generator in the browser and run the same rules again:

- Order is ahead of schedule.

- Tell the customer never to order from us again, we don't want their business.

Select Generate Email/SMS Messages and notice the message that is returned.

What is happening in these scenarios? When using Azure OpenAI, content filtering is applied to ensure that appropriate language is always used. If you're using OpenAI, the rule defined in the system prompt is used to ensure the message returned is appropriate.

Note

This illustrates the importance of engineering your prompts with the right information and rules to ensure proper results are returned. Read more about this process in the Introduction to prompt engineering documentation.

Undo the changes you made to

systemPromptincompleteEmailSMSMessages(), save the file, and re-run it again but only use theOrder is ahead of schedule.rule (don't include the negative rule). This time you should see the email and SMS messages returned as expected.A few final points to consider before moving on to the next exercise:

- It's important to have a human in the loop to review generated messages. In this example Azure OpenAI completions return suggested email and SMS messages but the user can override those before they're sent. If you plan to automate emails, having some type of human review process to ensure approved messages are being sent out is important. View AI as being a copilot, not an autopilot.

- Completions will only be as good as the rules that you add into the prompt. Take time to test your prompts and the completions that are returned. Invite other project stakeholders to review the completions as well.

- You may need to include post-processing code to ensure unexpected results are handled properly.

- Use system prompts to define the rules and information that the AI assistant should follow. Use user prompts to define the rules and information that the end user would like to include in the completions.

AI: Bring Your Own Data

The integration of Azure OpenAI Natural Language Processing (NLP) and completion capabilities offers significant potential for enhancing user productivity. By leveraging appropriate prompts and rules, an AI assistant can efficiently generate various forms of communication, such as email messages, SMS messages, and more. This functionality leads to increased user efficiency and streamlined workflows.

While this feature is quite powerful on its own, there may be cases where users need to generate completions based on your company's custom data. For example, you might have a collection of product manuals that may be challenging for users to navigate when they're assisting customers with installation issues. Alternatively, you might maintain a comprehensive set of Frequently Asked Questions (FAQs) related to healthcare benefits that can prove challenging for users to read through and get the answers they need. In these cases and many others, Azure OpenAI Service enables you to leverage your own data to generate completions, ensuring a more tailored and contextually accurate response to user questions.

Here's a quick overview of how the "bring your own data" feature works from the Azure OpenAI documentation.

Note

One of the key features of Azure OpenAI on your data is its ability to retrieve and utilize data in a way that enhances the model's output. Azure OpenAI on your data, together with Azure Cognitive Search, determines what data to retrieve from the designated data source based on the user input and provided conversation history. This data is then augmented and resubmitted as a prompt to the OpenAI model, with retrieved information being appended to the original prompt. Although retrieved data is being appended to the prompt, the resulting input is still processed by the model like any other prompt. Once the data has been retrieved and the prompt has been submitted to the model, the model uses this information to provide a completion.

In this exercise, you will:

- Create a custom data source using Azure AI Studio.

- Deploy an embedding model using Azure AI Studio.

- Upload custom documents.

- Start a chat session in the Chat playground to experiment with generating completions based upon your own data.

- Explore code that uses Azure Cognitive Search and Azure OpenAI to generate completions based upon your own data.

Let's get started by deploying an embedding model and adding a custom data source in Azure AI Studio.

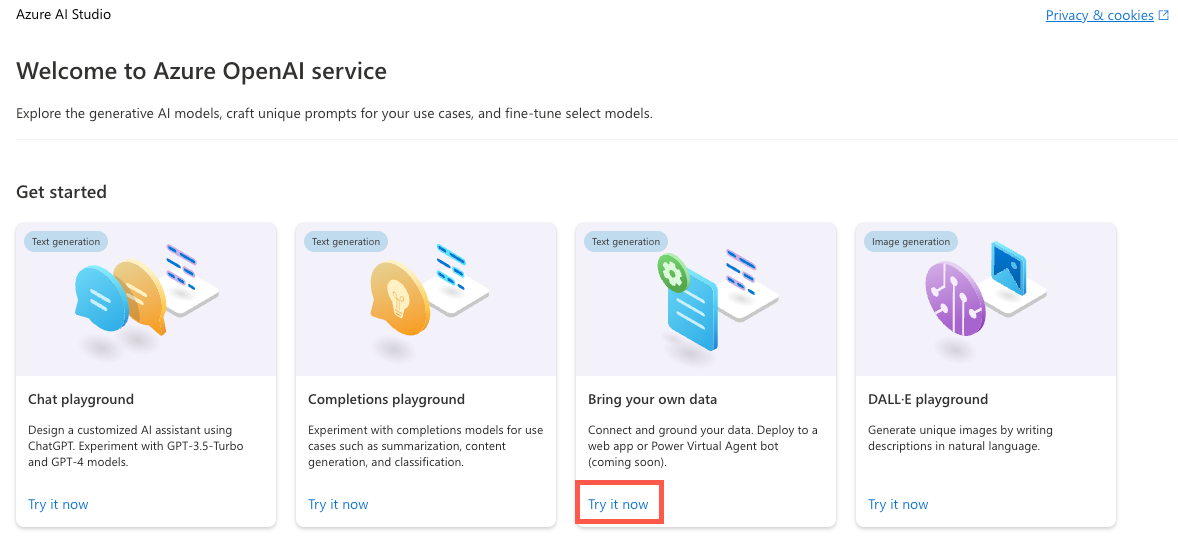

Adding a Custom Data Source to Azure AI Studio

Navigate to Azure OpenAI Studio and sign in with credentials that have access to your Azure OpenAI resource.

Select Deployments from the navigation menu.

Select Create new deployment and enter the following values:

- Model: text-embedding-ada-002.

- Model version: Default.

- Deployment name: text-embedding-ada-002.

After the model is created, select Azure OpenAI from the navigation menu to go to the welcome screen.

Locate the Bring your own data tile on the welcome screen and select Try it now.

Select Upload files from the Select data source dropdown.

Under the Select Azure Blob storage resource dropdown, select Create a new Azure Blob storage resource.

This will take you to the Azure portal where you can perform the following tasks:

- Enter a unique name for the storage account such as byodstorage[Your Last Name].

- Select a region that's close to your location.

- Select Review followed by Create.

Once the blob storage resource is created, go back to the Azure AI Studio dialog and select your newly created blob storage resource from the Select Azure Blob storage resource dropdown. If you don't see it listed, select the refresh icon next to the dropdown.

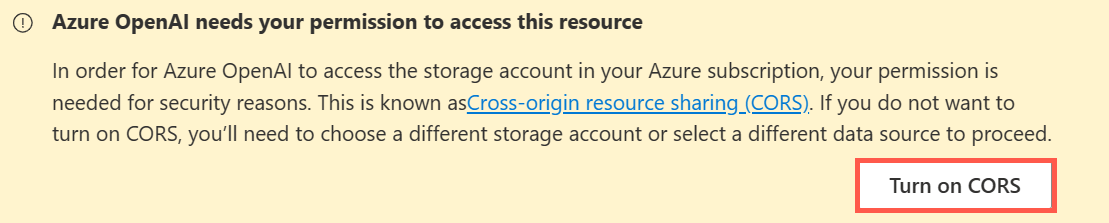

Cross-origin resource sharing (CORS) needs to be turned on in order for your storage account to be accessed. Select Turn on CORS in the Azure AI Studio dialog.

Under the Select Azure Cognitive Search resource dropdown, select Create a new Azure Cognitive Search resource.

This will take you back to the Azure portal where you can perform the following tasks:

- Enter a unique name for the Cognitive Search resource such as byodsearch[Your Last Name].

- Select a region that's close to your location.

- In the Pricing tier section, select Change Pricing Tier and select Basic followed by Select. The free tier isn't supported, so you'll clean up the Cognitive Search resource at the end of this tutorial.

- Select Review followed by Create.

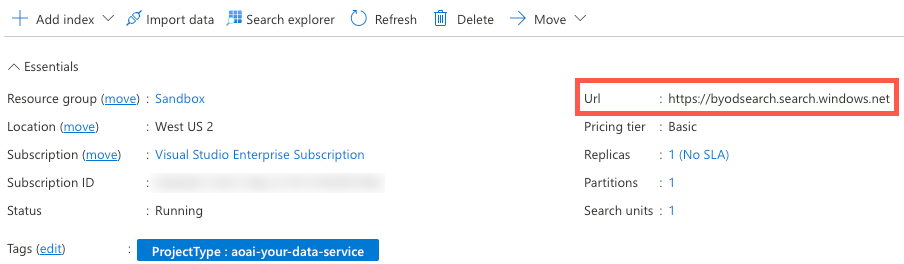

Once the Cognitive Search resource is created, go to the resource Overview page and copy the Url value to a local file.

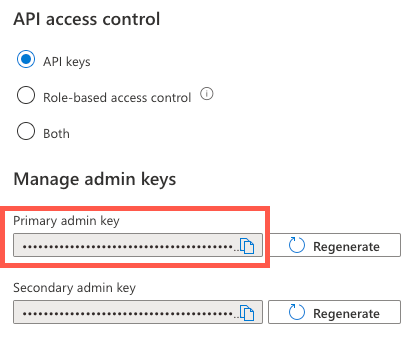

Select Keys in the left navigation menu and copy the Primary admin key value to a local file. You'll need these values later in the exercise.

Select Semantic ranker in the left navigation menu and ensure that Free is selected.

Note

To check if semantic ranker is available in a specific region, Check the Products Available by Region page on the Azure web site to see if your region is listed.

Go back to the Azure AI Studio Add Data dialog and select your newly created search resource from the Select Azure Cognitive Search resource dropdown. If you don't see it listed, select the refresh icon next to the dropdown.

Enter a value of byod-search-index for the Enter the index name value.

Select the Add vector search to this search resource checkbox.

In the Select an embedding model dropdown, select the text-embedding-ada-002 model you created earlier.

Select the checkbox followed by Next.

In the Upload files dialog, select Browse for a file.

Navigate to the project's customer documents folder (located at the root of the project) and select the following files:

- Clock A102 Installation Instructions.docx

- Company FAQs.docx

Note

This feature currently supports the following file formats for local index creation: .txt, .md, .html, .pdf, .docx, and .pptx.

Select Upload files. The files will be uploaded into a fileupload-byod-search-index container in the blob storage resource you created earlier.

Select Next to go to the Data management dialog.

In the Search type dropdown, select Hybrid + semantic.

Note

This option provides support for keyword and vector search. Once results are returned, a secondary ranking process is applied to the result set using deep learning models which improves the search relevance for the user. To learn more about semantic search, view the Semantic search in Azure Cognitive Search documentation.

Select the checkboxes to acknowledge the costs associated with using semantic search and vector embeddings.

Select Next, review the details, and select Save and close.

Now that your custom data has been uploaded, the data will be indexed and available to use in the Chat playground. This process may take a few minutes. Once it's completed, continue to the next section.

Using Your Custom Data Source in the Chat Playground

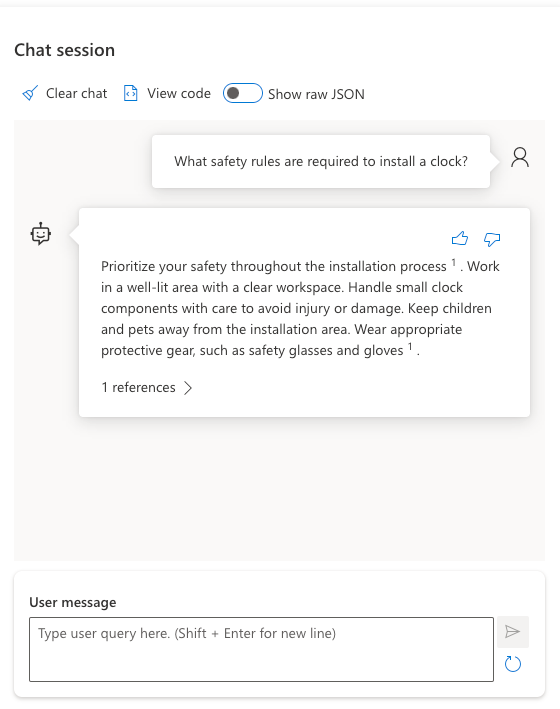

Locate the Chat session section of the page in Azure AI Studio and enter the following User message:

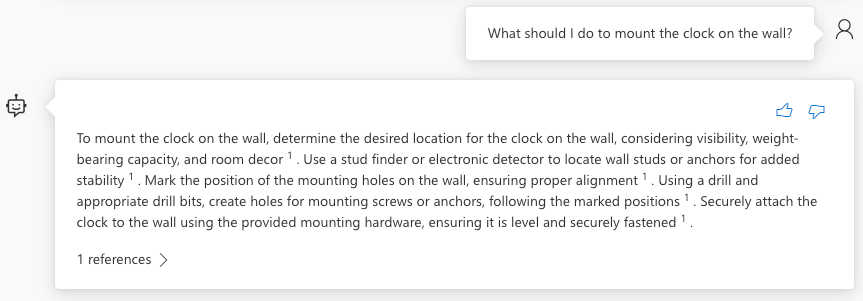

What safety rules are required to install a clock?You should see a result similar to the following displayed:

Expand the 1 references section in the chat response and notice that the Clock A102 Installation Instructions.docx file is listed and that you can select it to view the document.

Enter the following User message:

What should I do to mount the clock on the wall?You should see a result similar to the following displayed:

Now let's experiment with the Company FAQs document. Enter the following text into the User message field:

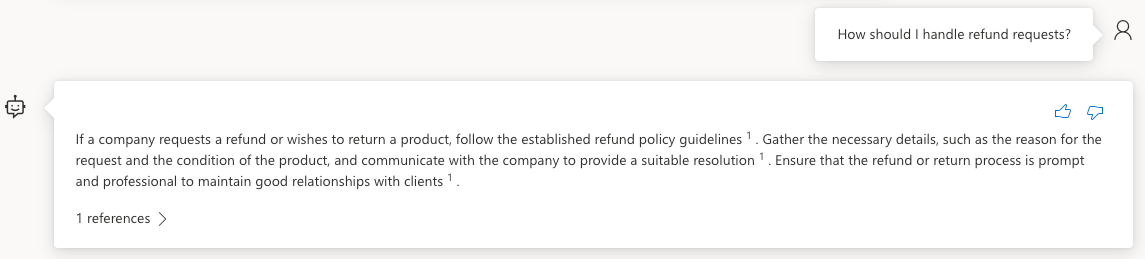

What is the company's policy on vacation time?You should see that no information was found for that request.

Enter the following text into the User message field:

How should I handle refund requests?You should see a result similar to the following displayed:

Expand the 1 references section in the chat response and notice that the Company FAQs.docx file is listed and that you can select it to view the document.

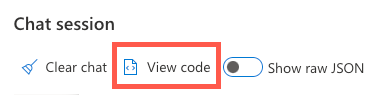

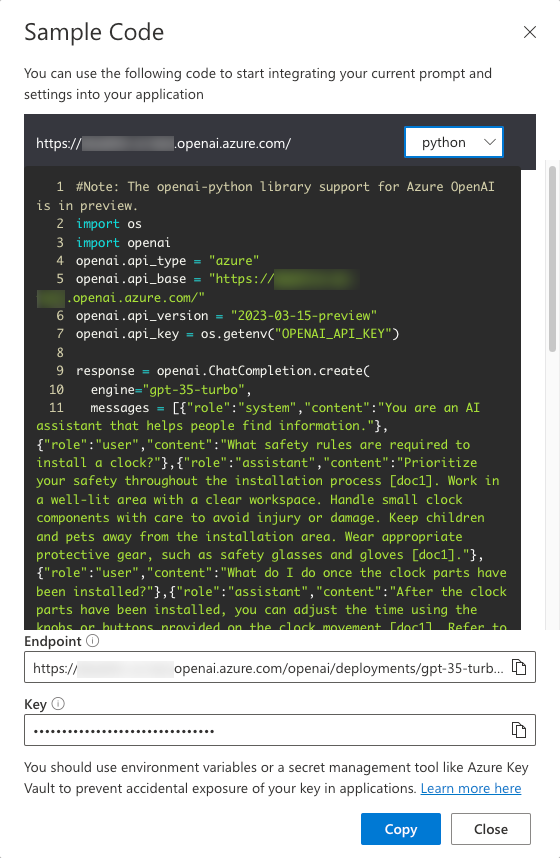

Select View code at the top of the Chat session section.

Note that you can switch between different languages, view the endpoint, and access the endpoint's key. Close the Sample Code dialog window.

Turn on the Show raw JSON toggle in the *Chat session. Notice the chat session starts with a message similar to the following:

{ "role": "system", "content": "You are an AI assistant that helps people find information." }Now that you've created a custom data source and experimented with it in the Chat playground, let's see how you can use it in the project's application.

Using the Bring Your Own Data Feature in the Application

Go back to the project in Visual Studio Code and open the .env file. Update the following values with your Cognitive Services endpoint, key, and index name. You copied the endpoint and key to a local file earlier in this exercise.

AZURE_COGNITIVE_SEARCH_ENDPOINT=<COGNITIVE_SERVICES_ENDPOINT_VALUE> AZURE_COGNITIVE_SEARCH_KEY=<COGNITIVE_SERVICES_KEY_VALUE> AZURE_COGNITIVE_SEARCH_INDEX=byod-search-indexIn a previous exercise you started the database, APIs, and application. You also updated the

.envfile. If you didn't complete those steps, follow the instructions at the end of the earlier exercise before continuing.Once the application has loaded in the browser, select the Chat Help icon in the upper-right of the application.

The following text should appear in the chat dialog:

How should I handle refund requests?Select the Get Help button. You should see results returned from the Company FAQs.docx document that you uploaded earlier in Azure AI Studio. If you'd like to read through the document, you can find it in the customer documents folder at the root of the project.

Change the text to the following and select the Get Help button:

What safety rules are required to install a clock?You should see results returned from the Clock A102 Installation Instructions.docx document that you uploaded earlier in Azure AI Studio. This document is also available in the customer documents folder at the root of the project.

Exploring the Code

Tip

If you're using Visual Studio Code, you can open files directly by selecting:

- Windows/Linux: Ctrl + P

- Mac: Cmd + P

Then type the name of the file you want to open.

Go back to the project source code in Visual Studio Code.

Open the server/apiRoutes.ts file and locate the

completeBYODroute. This API is called when the Get Help button is selected in the Chat Help dialog. It retrieves the user prompt from the request body and passes it to thecompleteBYOD()function in the server/openAI.ts file. The results are then returned to the client.router.post('/completeBYOD', async (req, res) => { const { prompt } = req.body; if (!prompt) { return res.status(400).json({ status: false, error: 'The prompt parameter must be provided.' }); } let result; try { // Call OpenAI to get custom "bring your own data" completion result = await completeBYOD(prompt); } catch (e: unknown) { console.error('Error parsing JSON:', e); } res.json(result); });Open the server/openAI.ts file and locate the

completeBYOD()function.async function completeBYOD(userPrompt: string): Promise<string> { const systemPrompt = 'You are an AI assistant that helps people find information.'; // Pass that we're using Cognitive Search along with Azure OpenAI. return await callOpenAI(systemPrompt, userPrompt, 0, true); }This function has the following features:

- The

userPromptparameter contains the information the user typed into the chat help dialog. - the

systemPromptvariable defines that an AI assistant designed to help people find information will be used. callOpenAI()is used to call the Azure OpenAI API and return the results. It passes thesystemPromptanduserPromptvalues as well as the following parameters:temperature- The amount of creativity to include in the response. The user needs consistent (less creative) answers in this case so the value is set to 0.useBYOD- A boolean value that indicates whether or not to use Cognitive Search along with Azure OpenAI. In this case, it's set totrueso Cognitive Search functionality will be used.

- The

The

callOpenAI()function accepts auseBYODparameter that is used to determine which OpenAI function to call. In this case, it setsuseBYODtotrueso thegetAzureOpenAIBYODCompletion()function will be called.function callOpenAI(systemPrompt: string, userPrompt: string, temperature = 0, useBYOD = false) { const isAzureOpenAI = OPENAI_API_KEY && OPENAI_ENDPOINT && OPENAI_MODEL; if (isAzureOpenAI && useBYOD) { // Azure OpenAI + Cognitive Search: Bring Your Own Data return getAzureOpenAIBYODCompletion(systemPrompt, userPrompt, temperature); } if (isAzureOpenAI) { // Azure OpenAI return getAzureOpenAICompletion(systemPrompt, userPrompt, temperature); } // OpenAI return getOpenAICompletion(systemPrompt, userPrompt, temperature); }Locate the

getAzureOpenAIBYODCompletion()function in server/openAI.ts. It's quite similar to thegetAzureOpenAICompletion()function you examined earlier, but is shown as a separate function to highlight a few key differences that are unique to the "bring your own data" scenario available in Azure OpenAI.The

fetchUrlvalue includes anextensionssegment in the URL whereas the URL for the standard Azure OpenAI API does not.const fetchUrl = `${OPENAI_ENDPOINT}/openai/deployments/${OPENAI_MODEL}/extensions/chat/completions?api-version=${OPENAI_API_VERSION}`;A

dataSourcesproperty is added to themessageDataobject sent to Azure OpenAI. ThedataSourcesproperty contains the Cognitive Search resource'sendpoint,key, andindexNamevalues that were added to the.envfile earlier in this exercise.const messageData: ChatGPTData = { max_tokens: 1024, temperature, messages: [ { role: 'system', content: systemPrompt }, { role: 'user', content: userPrompt } ], // Adding BYOD data source so that Cognitive Search is used with Azure OpenAI dataSources: [ { type: 'AzureCognitiveSearch', parameters: { endpoint: AZURE_COGNITIVE_SEARCH_ENDPOINT, key: AZURE_COGNITIVE_SEARCH_KEY, indexName: AZURE_COGNITIVE_SEARCH_INDEX } } ] };The

headersBodyobject includeschatpgpt_urlandchatgpt_keyproperties that are used to call Azure OpenAI once the Cognitive Search results are obtained.const headersBody: OpenAIHeadersBody = { method: 'POST', headers: { 'Content-Type': 'application/json', 'api-key': OPENAI_API_KEY, chatgpt_url: fetchUrl.replace('extensions/', ''), chatgpt_key: OPENAI_API_KEY }, body: JSON.stringify(messageData), };The response returned by Azure OpenAI includes two messsages with roles of

toolandassistant. The sample application uses the second message with aroleofassistantto provide the user the information they requested. In cases where you want to provide additional information about the documents used to create the response (as you saw earlier in the Azure AI Studio playground), you can use the first message which includes theurlto the document(s).{ "id": "12345678-1a2b-3c4e5f-a123-12345678abcd", "model": "", "created": 1684304924, "object": "chat.completion", "choices": [ { "index": 0, "messages": [ { "role": "tool", "content": "{\"citations\": [{\"content\": \"\\nCognitive Services are cloud-based artificial intelligence (AI) services...\", \"id\": null, \"title\": \"What is Cognitive Services\", \"filepath\": null, \"url\": null, \"metadata\": {\"chunking\": \"orignal document size=250. Scores=0.4314117431640625 and 1.72564697265625.Org Highlight count=4.\"}, \"chunk_id\": \"0\"}], \"intent\": \"[\\\"Learn about Azure Cognitive Services.\\\"]\"}", "end_turn": false }, { "role": "assistant", "content": " \nAzure Cognitive Services are cloud-based artificial intelligence (AI) services that help developers build cognitive intelligence into applications without having direct AI or data science skills or knowledge. [doc1]. Azure Machine Learning is a cloud service for accelerating and managing the machine learning project lifecycle. [doc1].", "end_turn": true } ] } ] }

The following code is used in

getAzureOpenAIBYODCompletion()to access the messages. Although citations aren't being used in this example, they're logged to the console so you can see the type of data that's returned.const completion = await fetchAndParse(fetchUrl, headersBody); console.log(completion); if (completion.error) { console.error('Azure OpenAI BYOD Error: \n', completion.error); return completion.error.message; } const citations = (completion.choices[0]?.messages[0]?.content?.trim() ?? '') as string; console.log('Azure OpenAI BYOD Citations: \n', citations); let content = (completion.choices[0]?.messages[1]?.content?.trim() ?? '') as string; console.log('Azure OpenAI BYOD Output: \n', content); return content;A few final points to consider before moving on to the next exercise:

- The "bring your own data" feature of Azure OpenAI is currently in preview. It's not recommended to use it in production applications at this time.

- The sample application uses a single index in Azure Cognitive Search. You can use multiple indexes and data sources with Azure OpenAI. The

dataSourcesproperty in thegetAzureOpenAIBYODCompletion()function can be updated to include multiple data sources as needed. - Security must be carefully evaluated with this type of scenario. Users should't be able to ask questions and get results from documents that they aren't able to access.

Now that you've learned about Azure OpenAI, prompts, completions, and how you can use your own data, let's move on to the next exercise to learn how communication features can be used to enhance the application. If you'd like to learn more about Azure OpenAI, view the Get started with Azure OpenAI Service training content. Additional information about using your own data with Azure OpenAI can be found in the Azure OpenAI on your data documentation.

Communication: Creating an Azure Communication Services Resource

Effective communication is essential for successful custom business applications. By using Azure Communication Services (ACS), you can add features such as phone calls, live chat, audio/video calls, and email and SMS messaging to your applications. Earlier, you learned how Azure OpenAI can generate completions for email and SMS messages. Now, you'll learn how to send the messages. Together, ACS and OpenAI can enhance your applications by simplifying communication, improving interactions, and boosting business productivity.

In this exercise, you will:

- Create an Azure Communication Services (ACS) resource.

- Add a toll-free phone number with calling and SMS capabilities.

- Connect an email domain.

- Update the project's .env file with values from your ACS resource.

Create an Azure Communication Services Resource

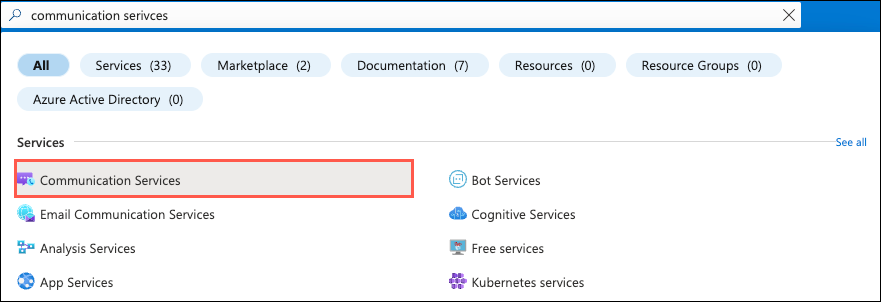

Visit the Azure portal in your browser and sign in if you haven't already.

Type communication services in the search bar at the top of the page and select Communication Services from the options that appear.

Select Create in the toolbar.

Perform the following tasks:

- Select your Azure subscription.

- Select the resource group to use (create a new one if one doesn't exist).

- Enter an ACS resource name. It must be a unique value.

- Select a data location.

Select Review + Create followed by Create.

You've successfully created a new Azure Communication Services resource! Next, you'll enable phone calling and SMS capabilities. You'll also connect an email domain to the resource.

Enable Phone Calling and SMS Capabilities

Add a phone number and ensure that the phone number has calling capabilities enabled. You'll use this phone number to call out to a phone from the app.

Select

Phone numbersfrom the Resource menu.Select

+ Getin the toolbar (or select the Get a number button) and enter the following information:- Country or region:

United States - Use case: Select

An application will be making calls or sending SMS mesages - Number type: Toll-free

Note

A credit card is required on your Azure subscription to create the toll-free number. If you don't have a card on file, feel free to skip adding a phone number and jump to the next section of the exercise that connects an email domain. You can still use the app, but won't be able to call out to a phone number.

- Calling:

Make calls - SMS:

Send and receive SMS

- Country or region:

Select Next: Numbers.

Select a Prefix (for example

877) and leave the Quantity at 1. Select Search.Once a toll-free number is displayed, select Next: Summary.

Review the details and select Place order to add the phone number to your ACS resource.

Once the phone number is created, select it to get to the Features panel. Ensure that the following values are set:

- In the Calling section, select

Make calls. - In the SMS section, select

Send and receive SMS. - Select Save.

- In the Calling section, select

Copy the phone number value into a file for later use.

Connect an Email Domain

Perform the following tasks to create a connected email domain for your ACS resource so that you can send email. This will be used to send email from the app.

- Select Domains from the Resource menu.

- Select Connect domain from the toolbar.

- Select your Subscription and Resource group.

- Under the Email Service dropdown, select

Add an email service. - Give the email service a name such as

acs-demo-email-service. - Select

Review + createfollowed byCreate. - Once the deployment completes, select

Go to resource, and select1-click addto add a free Azure subdomain. - After the subdomain is added (it'll take a few moments to be deployed), select it.

- Once you're on the AzureManagedDomain screen, select MailFrom addresses from the Resource menu.

- Copy the MailFrom value to a file. You'll use it later as you update the .env file.

- Go back to your Azure Communication Services resource and select

Domainsfrom the Resource menu. - Select

Add domainand enter theMailFromvalue from the previous step (ensure you select the correct subscription, resource group, and email service). Select theConnectbutton.

Update the .env File

Now that your ACS phone number (with calling and SMS enabled) and email domain are ready, update the following keys/values in the .env file in your project:

ACS_CONNECTION_STRING=<ACS_CONNECTION_STRING> ACS_PHONE_NUMBER=<ACS_PHONE_NUMBER> ACS_EMAIL_ADDRESS=<ACS_EMAIL_ADDRESS> CUSTOMER_EMAIL_ADDRESS=<EMAIL_ADDRESS_TO_SEND_EMAIL_TO> CUSTOMER_PHONE_NUMBER=<UNITED_STATES_BASED_NUMBER_TO_SEND_SMS_TO>ACS_CONNECTION_STRING: Theconnection stringvalue from the Keys section of your ACS resource.ACS_PHONE_NUMBER: Assign your toll-free number to theACS_PHONE_NUMBERvalue.ACS_EMAIL_ADDRESS: Assign your email "MailTo" address value.CUSTOMER_EMAIL_ADDRESS: Assign an email address you'd like email to be sent to from the app (since the customer data in the app's database is only sample data). You can use a personal email address.CUSTOMER_PHONE_NUMBER: You'll need to provide a United States based phone number (as of today) due to additional verification that is required in other countries for sending SMS messages. If you don't have a US-based number, you can leave it empty.

Start/Restart the Application and API Servers

Perform one of the following steps based on the exercises you completed up to this point:

If you started the database, API server, and web server in an earlier exercise, you need to stop the API server and web server and restart them to pick up the .env file changes. You can leave the database running.

Locate the terminal windows running the API server and web server and press CTRL + C to stop them. Start them again by typing

npm startin each terminal window and pressing Enter. Continue to the next exercise.If you haven't started the database, API server, and web server yet, complete the following steps:

In the following steps you'll create three terminal windows in Visual Studio Code.

Right-click on the .env file in the Visual Studio Code file list and select Open in Integrated Terminal. Ensure that your terminal is at the root of the project - openai-acs-msgraph - before continuing.

Choose from one of the following options to start the PostgreSQL database:

If you have Docker Desktop installed and running, run

docker-compose upin the terminal window and press Enter.If you have Podman with podman-compose installed and running, run

podman-compose upin the terminal window and press Enter.To run the PostgreSQL container directly using either Docker Desktop, Podman, nerdctl, or another container runtime you have installed, run the following command in the terminal window:

Mac, Linux, or Windows Subsystem for Linux (WSL):

[docker | podman | nerdctl] run --name postgresDb -e POSTGRES_USER=web -e POSTGRES_PASSWORD=web-password -e POSTGRES_DB=CustomersDB -v $(pwd)/data:/var/lib/postgresql/data -p 5432:5432 postgresWindows with PowerShell:

[docker | podman] run --name postgresDb -e POSTGRES_USER=web -e POSTGRES_PASSWORD=web-password -e POSTGRES_DB=CustomersDB -v ${PWD}/data:/var/lib/postgresql/data -p 5432:5432 postgres

Once the database container starts, press the + icon in the Visual Studio Code Terminal toolbar to create a second terminal window.

cdinto the server/typescript folder and run the following commands to install the dependencies and start the API server.npm installnpm start

Press the + icon again in the Visual Studio Code Terminal toolbar to create a third terminal window.

cdinto the client folder and run the following commands to install the dependencies and start the web server.npm installnpm start

A browser will launch and you'll be taken to http://localhost:4200.

Communication: Making a Phone Call

Integrating Azure Communication Services' phone calling capabilities into a custom Line of Business (LOB) application offers several key benefits to businesses and their users:

- Enables seamless and real-time communication between employees, customers, and partners, directly from within the LOB application, eliminating the need to switch between multiple platforms or devices.

- Enhances the user experience and improves overall operational efficiency.

- Facilitates rapid problem resolution, as users can quickly connect with relevant support teams or subject matter experts quickly and easily.

In this exercise, you will:

- Explore the phone calling feature in the application.

- Walk through the code to learn how the phone calling feature is built.

Using the Phone Calling Feature

In the previous exercise you created an Azure Communication Services (ACS) resource and started the database, web server, and API server. You also updated the following values in the .env file.

ACS_CONNECTION_STRING=<ACS_CONNECTION_STRING> ACS_PHONE_NUMBER=<ACS_PHONE_NUMBER> ACS_EMAIL_ADDRESS=<ACS_EMAIL_ADDRESS> CUSTOMER_EMAIL_ADDRESS=<EMAIL_ADDRESS_TO_SEND_EMAIL_TO> CUSTOMER_PHONE_NUMBER=<UNITED_STATES_BASED_NUMBER_TO_SEND_SMS_TO>Ensure you've completed the previous exercise before continuing.

Go back to the browser (http://localhost:4200), locate the datagrid, and select Contact Customer followed by Call Customer in the first row.

A phone call component will be added into the header. Enter your phone number (ensure it starts with + and includes the country code) and select Call. You will be prompted to allow access to your microphone.

Select Hang Up to end the call. Select Close to close the phone call component.

Exploring the Phone Calling Code

Tip

If you're using Visual Studio Code, you can open files directly by selecting:

- Windows/Linux: Ctrl + P

- Mac: Cmd + P

Then type the name of the file you want to open.

Open the customers-list.component.ts file. The full path to the file is client/src/app/customers-list/customers-list.component.ts.

Note that

openCallDialog()sends aCustomerCallmessage and the customer phone number using an event bus.openCallDialog(data: Phone) { this.eventBus.emit({ name: Events.CustomerCall, value: data }); }Note

The event bus code can be found in the eventbus.service.ts file if you're interested in exploring it more. The full path to the file is client/src/app/core/eventbus.service.ts.

The header component's

ngOnInit()function subscribes to theCustomerCallevent sent by the event bus and displays the phone call component. You can find this code in header.component.ts.ngOnInit() { this.subscription.add( this.eventBus.on(Events.CustomerCall, (data: Phone) => { this.callVisible = true; // Show phone call component this.callData = data; // Set phone number to call }) ); }Open phone-call.component.ts. Take a moment to expore the code. The full path to the file is client/src/app/phone-call/phone-call.component.ts. Note the following key features:

- Retrieves an Azure Communication Services access token by calling the

acsService.getAcsToken()function inngOnInit(); - Adds a "phone dialer" to the page. You can see the dialer by clicking on the phone number input in the header.

- Starts and ends a call using the

startCall()andendCall()functions respectively.

- Retrieves an Azure Communication Services access token by calling the

Before looking at the code that makes the phone call, let's examine how the ACS access token is retrieved and how phone calling objects are created. Locate the

ngOnInit()function in phone-call.component.ts.async ngOnInit() { if (ACS_CONNECTION_STRING) { this.subscription.add( this.acsService.getAcsToken().subscribe(async (user: AcsUser) => { const callClient = new CallClient(); const tokenCredential = new AzureCommunicationTokenCredential(user.token); this.callAgent = await callClient.createCallAgent(tokenCredential); }) ); } }This function performs the following actions:

- Retrieves an ACS userId and access token by calling the

acsService.getAcsToken()function. - Once the access token is retrieved, the code performs the following actions:

- Creates a new instance of

CallClientandAzureCommunicationTokenCredentialusing the access token. - Creates a new instance of

CallAgentusing theCallClientandAzureCommunicationTokenCredentialobjects. Later you'll see thatCallAgentis used to start and end a call.

- Creates a new instance of

- Retrieves an ACS userId and access token by calling the

Open acs.services.ts and locate the

getAcsToken()function. The full path to the file is client/src/app/core/acs.service.ts. The function makes an HTTP GET request to the/acstokenroute exposed by the API server.getAcsToken(): Observable<AcsUser> { return this.http.get<AcsUser>(this.apiUrl + 'acstoken') .pipe( catchError(this.handleError) ); }An API server function named