QuickStart: Analyze image content

Get started with the Content Studio, REST API, or client SDKs to do basic image moderation. The Azure AI Content Safety service provides you with AI algorithms for flagging objectionable content. Follow these steps to try it out.

Note

The sample data and code may contain offensive content. User discretion is advised.

Prerequisites

- An Azure subscription - Create one for free

- Once you have your Azure subscription, create a Content Safety resource in the Azure portal to get your key and endpoint. Enter a unique name for your resource, select your subscription, and select a resource group, supported region (see Region availability), and supported pricing tier. Then select Create.

- The resource takes a few minutes to deploy. After it finishes, select go to resource. In the left pane, under Resource Management, select Subscription Key and Endpoint. The endpoint and either of the keys are used to call APIs.

- cURL installed

Analyze image content

The following section walks through a sample image moderation request with cURL.

Prepare a sample image

Choose a sample image to analyze, and download it to your device.

We support JPEG, PNG, GIF, BMP, TIFF, or WEBP image formats. The maximum size for image submissions is 4 MB, and image dimensions must be between 50 x 50 pixels and 2,048 x 2,048 pixels. If your format is animated, we'll extract the first frame to do the detection.

You can input your image by one of two methods: local filestream or blob storage URL.

Local filestream (recommended): Encode your image to base64. You can use a website like codebeautify to do the encoding. Then save the encoded string to a temporary location.

Blob storage URL: Upload your image to an Azure Blob Storage account. Follow the blob storage quickstart to learn how to do this. Then open Azure Storage Explorer and get the URL to your image. Save it to a temporary location.

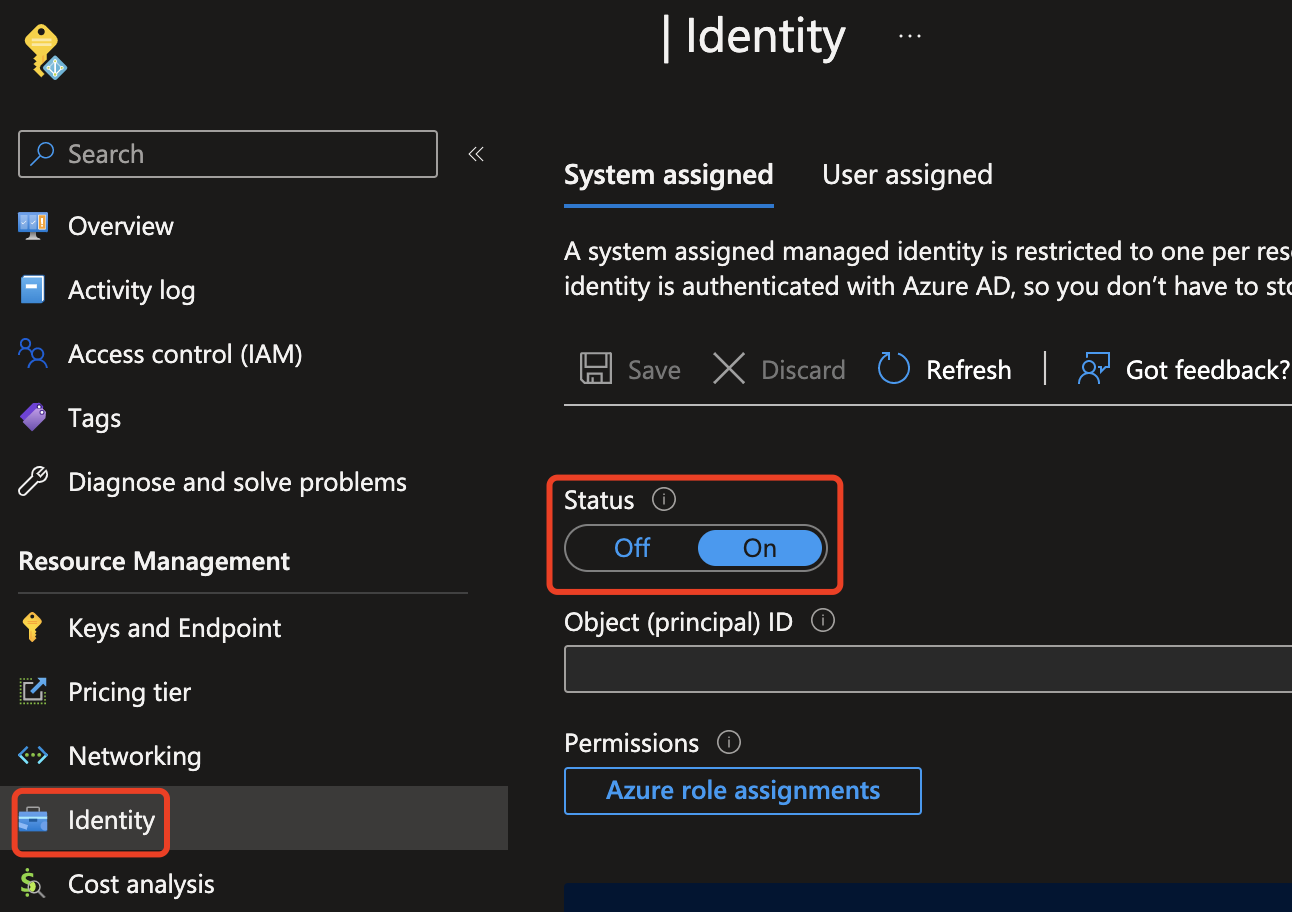

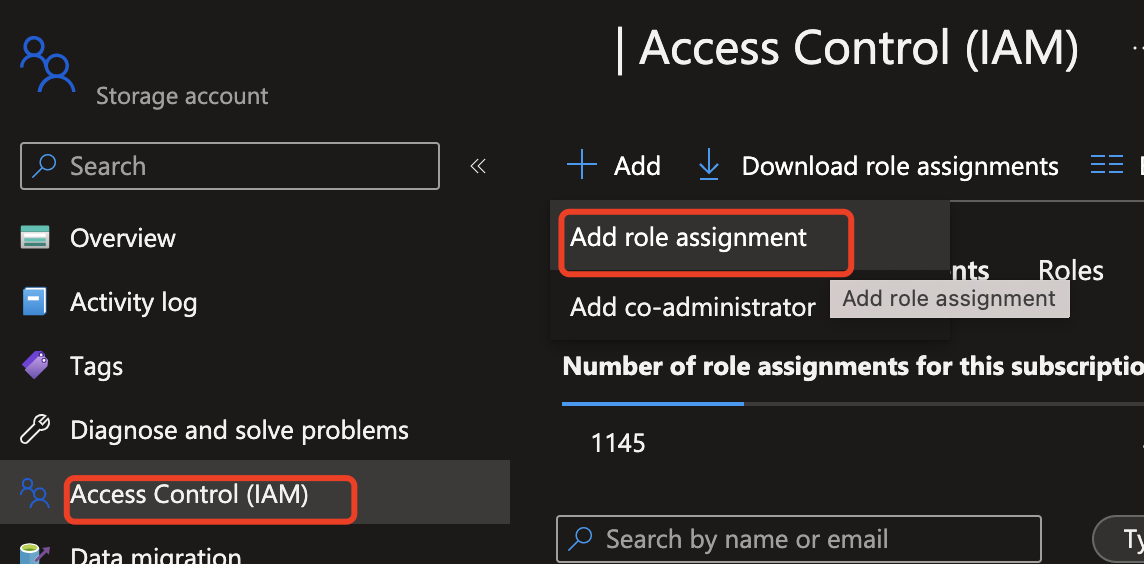

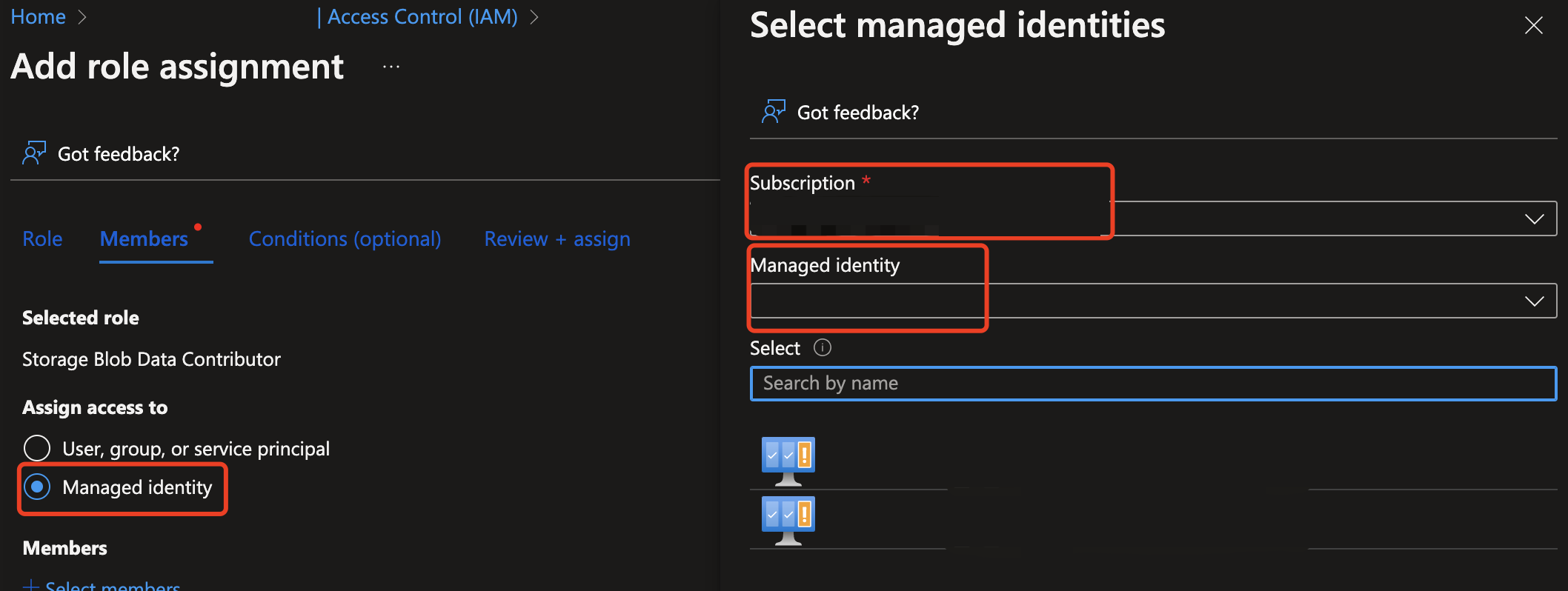

Next, you need to give your Content Safety resource access to read from the Azure Storage resource. Enable system-assigned Managed identity for the Azure AI Content Safety instance and assign the role of Storage Blob Data Contributor/Owner/Reader to the identity:

Enable managed identity for the Azure AI Content Safety instance.

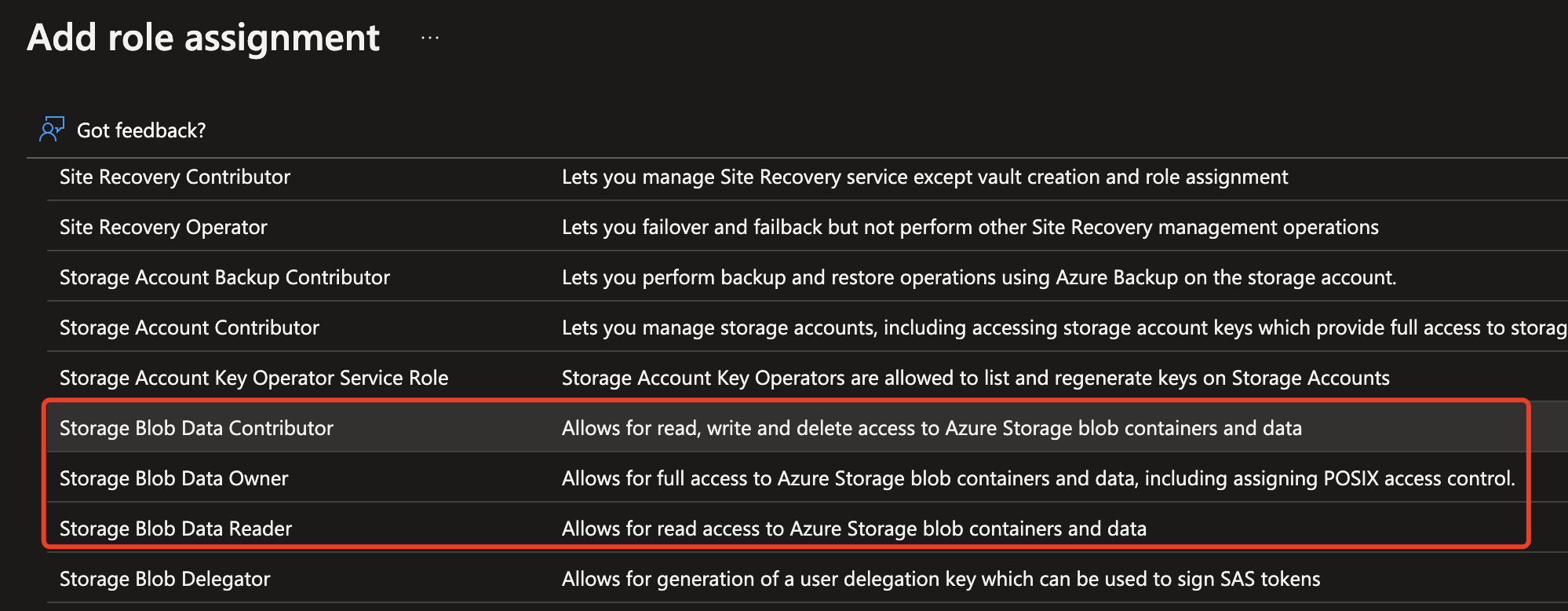

Assign the role of Storage Blob Data Contributor/Owner/Reader to the Managed identity. Any roles highlighted below should work.

Analyze image content

Paste the command below into a text editor, and make the following changes.

- Substitute the

<endpoint>with your resource endpoint URL. - Replace

<your_subscription_key>with your key. - Populate the

"image"field in the body with either a"content"field or a"blobUrl"field. For example:{"image": {"content": "<base_64_string>"}or{"image": {"blobUrl": "<your_storage_url>"}.

curl --location --request POST '<endpoint>/contentsafety/image:analyze?api-version=2023-10-01' \

--header 'Ocp-Apim-Subscription-Key: <your_subscription_key>' \

--header 'Content-Type: application/json' \

--data-raw '{

"image": {

"content": "<base_64_string>"

},

"categories": [

"Hate", "SelfHarm", "Sexual", "Violence"

],

"outputType": "FourSeverityLevels"

}'

Note

If you're using a blob storage URL, the request body will look like this:

{

"image": {

"blobUrl": "<your_storage_url>"

}

}

Open a command prompt window and run the cURL command.

The below fields must be included in the URL:

| Name | Required? | Description | Type |

|---|---|---|---|

| API Version | Required | This is the API version to be checked. Current version is: api-version=2023-10-01. Example: <endpoint>/contentsafety/image:analyze?api-version=2023-10-01 |

String |

The parameters in the request body are defined in this table:

| Name | Required? | Description | Type |

|---|---|---|---|

| content | Required | The content or blob URL of the image. I can be either base64-encoded bytes or a blob URL. If both are given, the request is refused. The maximum allowed size of the image is 2048 pixels x 2048 pixels, and the maximum file size is 4 MB. The minimum size of the image is 50 pixels x 50 pixels. | String |

| categories | Optional | This is assumed to be an array of category names. See the Harm categories guide for a list of available category names. If no categories are specified, all four categories are used. We use multiple categories to get scores in a single request. | String |

| outputType | Optional | Image moderation API only supports "FourSeverityLevels". Output severities in four levels. The value can be 0,2,4,6 |

String |

Output

You should see the image moderation results displayed as JSON data in the console. For example:

{

"categoriesAnalysis": [

{

"category": "Hate",

"severity": 2

},

{

"category": "SelfHarm",

"severity": 0

},

{

"category": "Sexual",

"severity": 0

},

{

"category": "Violence",

"severity": 0

}

]

}

The JSON fields in the output are defined here:

| Name | Description | Type |

|---|---|---|

| categoriesAnalysis | Each output class that the API predicts. Classification can be multi-labeled. For example, when an image is uploaded to the image moderation model, it could be classified as both sexual content and violence. Harm categories | String |

| Severity | The severity level of the flag in each harm category. Harm categories | Integer |

Reference documentation | Library source code | Package (NuGet) | Samples

Prerequisites

- An Azure subscription - Create one for free

- The Visual Studio IDE with workload .NET desktop development enabled. Or if you don't plan on using Visual Studio IDE, you need the current version of .NET Core.

- .NET Runtime installed.

- Once you have your Azure subscription, create a Content Safety resource in the Azure portal to get your key and endpoint. Enter a unique name for your resource, select your subscription, and select a resource group, supported region (see Region availability), and supported pricing tier. Then select Create.

- The resource takes a few minutes to deploy. After it finishes, Select go to resource. In the left pane, under Resource Management, select Subscription Key and Endpoint. The endpoint and either of the keys are used to call APIs.

Set up application

Create a new C# application.

Open Visual Studio, and under Get started select Create a new project. Set the template filters to C#/All Platforms/Console. Select Console App (command-line application that can run on .NET on Windows, Linux and macOS) and choose Next. Update the project name to ContentSafetyQuickstart and choose Next. Select .NET 6.0 or above, and choose Create to create the project.

Install the client SDK

Once you've created a new project, install the client SDK by right-clicking on the project solution in the Solution Explorer and selecting Manage NuGet Packages. In the package manager that opens select Browse and search for Azure.AI.ContentSafety. Select Install.

Create environment variables

In this example, you'll write your credentials to environment variables on the local machine running the application.

Tip

Don't include the key directly in your code, and never post it publicly. See the Azure AI services security article for more authentication options like Azure Key Vault.

To set the environment variable for your key and endpoint, open a console window and follow the instructions for your operating system and development environment.

- To set the

CONTENT_SAFETY_KEYenvironment variable, replaceYOUR_CONTENT_SAFETY_KEYwith one of the keys for your resource. - To set the

CONTENT_SAFETY_ENDPOINTenvironment variable, replaceYOUR_CONTENT_SAFETY_ENDPOINTwith the endpoint for your resource.

setx CONTENT_SAFETY_KEY 'YOUR_CONTENT_SAFETY_KEY'

setx CONTENT_SAFETY_ENDPOINT 'YOUR_CONTENT_SAFETY_ENDPOINT'

After you add the environment variables, you might need to restart any running programs that will read the environment variables, including the console window.

Analyze image content

From the project directory, open the Program.cs file that was created previously. Paste in the following code.

using System;

using Azure.AI.ContentSafety;

namespace Azure.AI.ContentSafety.Dotnet.Sample

{

class ContentSafetySampleAnalyzeImage

{

public static void AnalyzeImage()

{

// retrieve the endpoint and key from the environment variables created earlier

string endpoint = Environment.GetEnvironmentVariable("CONTENT_SAFETY_ENDPOINT");

string key = Environment.GetEnvironmentVariable("CONTENT_SAFETY_KEY");

ContentSafetyClient client = new ContentSafetyClient(new Uri(endpoint), new AzureKeyCredential(key));

// Example: analyze image

string imagePath = @"sample_data\image.png";

ContentSafetyImageData image = new ContentSafetyImageData(BinaryData.FromBytes(File.ReadAllBytes(imagePath)));

var request = new AnalyzeImageOptions(image);

Response<AnalyzeImageResult> response;

try

{

response = client.AnalyzeImage(request);

}

catch (RequestFailedException ex)

{

Console.WriteLine("Analyze image failed.\nStatus code: {0}, Error code: {1}, Error message: {2}", ex.Status, ex.ErrorCode, ex.Message);

throw;

}

Console.WriteLine("Hate severity: {0}", response.Value.CategoriesAnalysis.FirstOrDefault(a => a.Category == ImageCategory.Hate)?.Severity ?? 0);

Console.WriteLine("SelfHarm severity: {0}", response.Value.CategoriesAnalysis.FirstOrDefault(a => a.Category == ImageCategory.SelfHarm)?.Severity ?? 0);

Console.WriteLine("Sexual severity: {0}", response.Value.CategoriesAnalysis.FirstOrDefault(a => a.Category == ImageCategory.Sexual)?.Severity ?? 0);

Console.WriteLine("Violence severity: {0}", response.Value.CategoriesAnalysis.FirstOrDefault(a => a.Category == ImageCategory.Violence)?.Severity ?? 0);

}

static void Main()

{

AnalyzeImage();

}

}

}

Create a sample_data folder in your project directory, and add an image.png file into it.

Build and run the application by selecting Start Debugging from the Debug menu at the top of the IDE window (or press F5).

Reference documentation | Library source code | Package (PyPI) | Samples |

Prerequisites

- An Azure subscription - Create one for free

- Once you have your Azure subscription, create a Content Safety resource in the Azure portal to get your key and endpoint. Enter a unique name for your resource, select your subscription, and select a resource group, supported region (see Region availability), and supported pricing tier. Then select Create.

- The resource takes a few minutes to deploy. After it finishes, Select go to resource. In the left pane, under Resource Management, select Subscription Key and Endpoint. The endpoint and either of the keys are used to call APIs.

- Python 3.8 or later

- Your Python installation should include pip. You can check if you have pip installed by running

pip --versionon the command line. Get pip by installing the latest version of Python.

- Your Python installation should include pip. You can check if you have pip installed by running

Create environment variables

In this example, you'll write your credentials to environment variables on the local machine running the application.

Tip

Don't include the key directly in your code, and never post it publicly. See the Azure AI services security article for more authentication options like Azure Key Vault.

To set the environment variable for your key and endpoint, open a console window and follow the instructions for your operating system and development environment.

- To set the

CONTENT_SAFETY_KEYenvironment variable, replaceYOUR_CONTENT_SAFETY_KEYwith one of the keys for your resource. - To set the

CONTENT_SAFETY_ENDPOINTenvironment variable, replaceYOUR_CONTENT_SAFETY_ENDPOINTwith the endpoint for your resource.

setx CONTENT_SAFETY_KEY 'YOUR_CONTENT_SAFETY_KEY'

setx CONTENT_SAFETY_ENDPOINT 'YOUR_CONTENT_SAFETY_ENDPOINT'

After you add the environment variables, you might need to restart any running programs that will read the environment variables, including the console window.

Analyze image content

The following section walks through a sample request with the Python SDK.

Open a command prompt, navigate to your project folder, and create a new file named quickstart.py.

Run this command to install the Azure AI Content Safety client library:

python -m pip install azure-ai-contentsafetyCopy the following code into quickstart.py:

import os from azure.ai.contentsafety import ContentSafetyClient from azure.ai.contentsafety.models import AnalyzeImageOptions, ImageData, ImageCategory from azure.core.credentials import AzureKeyCredential from azure.core.exceptions import HttpResponseError def analyze_image(): endpoint = os.environ.get('CONTENT_SAFETY_ENDPOINT') key = os.environ.get('CONTENT_SAFETY_KEY') image_path = os.path.join("sample_data", "image.jpg") # Create an Azure AI Content Safety client client = ContentSafetyClient(endpoint, AzureKeyCredential(key)) # Build request with open(image_path, "rb") as file: request = AnalyzeImageOptions(image=ImageData(content=file.read())) # Analyze image try: response = client.analyze_image(request) except HttpResponseError as e: print("Analyze image failed.") if e.error: print(f"Error code: {e.error.code}") print(f"Error message: {e.error.message}") raise print(e) raise hate_result = next(item for item in response.categories_analysis if item.category == ImageCategory.HATE) self_harm_result = next(item for item in response.categories_analysis if item.category == ImageCategory.SELF_HARM) sexual_result = next(item for item in response.categories_analysis if item.category == ImageCategory.SEXUAL) violence_result = next(item for item in response.categories_analysis if item.category == ImageCategory.VIOLENCE) if hate_result: print(f"Hate severity: {hate_result.severity}") if self_harm_result: print(f"SelfHarm severity: {self_harm_result.severity}") if sexual_result: print(f"Sexual severity: {sexual_result.severity}") if violence_result: print(f"Violence severity: {violence_result.severity}") if __name__ == "__main__": analyze_image()Replace

"sample_data"and"image.jpg"with the path and filename of the local you want to use.Then run the application with the

pythoncommand on your quickstart file.python quickstart.py

Reference documentation | Library source code | Artifact (Maven) | Samples

Prerequisites

- An Azure subscription - Create one for free

- The current version of the Java Development Kit (JDK)

- The Gradle build tool, or another dependency manager.

- Once you have your Azure subscription, create a Content Safety resource in the Azure portal to get your key and endpoint. Enter a unique name for your resource, select your subscription, and select a resource group, supported region (see Region availability), and supported pricing tier. Then select Create.

- The resource takes a few minutes to deploy. After it finishes, Select go to resource. In the left pane, under Resource Management, select Subscription Key and Endpoint. The endpoint and either of the keys are used to call APIs.

Set up application

Create a new Gradle project.

In a console window (such as cmd, PowerShell, or Bash), create a new directory for your app, and navigate to it.

mkdir myapp && cd myapp

Run the gradle init command from your working directory. This command will create essential build files for Gradle, including build.gradle.kts, which is used at runtime to create and configure your application.

gradle init --type basic

When prompted to choose a DSL, select Kotlin.

From your working directory, run the following command to create a project source folder:

mkdir -p src/main/java

Navigate to the new folder and create a file called ContentSafetyQuickstart.java.

Also create a src/resources folder at the root of your project, and add a sample image to it.

Install the client SDK

This quickstart uses the Gradle dependency manager. You can find the client library and information for other dependency managers on the Maven Central Repository.

Locate build.gradle.kts and open it with your preferred IDE or text editor. Then copy in the following build configuration. This configuration defines the project as a Java application whose entry point is the class ContentSafetyQuickstart. It imports the Azure AI Vision library.

plugins {

java

application

}

application {

mainClass.set("ContentSafetyQuickstart")

}

repositories {

mavenCentral()

}

dependencies {

implementation(group = "com.azure", name = "azure-ai-contentsafety", version = "1.0.0")

}

Create environment variables

In this example, you'll write your credentials to environment variables on the local machine running the application.

Tip

Don't include the key directly in your code, and never post it publicly. See the Azure AI services security article for more authentication options like Azure Key Vault.

To set the environment variable for your key and endpoint, open a console window and follow the instructions for your operating system and development environment.

- To set the

CONTENT_SAFETY_KEYenvironment variable, replaceYOUR_CONTENT_SAFETY_KEYwith one of the keys for your resource. - To set the

CONTENT_SAFETY_ENDPOINTenvironment variable, replaceYOUR_CONTENT_SAFETY_ENDPOINTwith the endpoint for your resource.

setx CONTENT_SAFETY_KEY 'YOUR_CONTENT_SAFETY_KEY'

setx CONTENT_SAFETY_ENDPOINT 'YOUR_CONTENT_SAFETY_ENDPOINT'

After you add the environment variables, you might need to restart any running programs that will read the environment variables, including the console window.

Analyze image content

Open ContentSafetyQuickstart.java in your preferred editor or IDE and paste in the following code. Replace the source variable with the path to your sample image.

import com.azure.ai.contentsafety.ContentSafetyClient;

import com.azure.ai.contentsafety.ContentSafetyClientBuilder;

import com.azure.ai.contentsafety.models.AnalyzeImageOptions;

import com.azure.ai.contentsafety.models.AnalyzeImageResult;

import com.azure.ai.contentsafety.models.ContentSafetyImageData;

import com.azure.ai.contentsafety.models.ImageCategoriesAnalysis;

import com.azure.core.credential.KeyCredential;

import com.azure.core.util.BinaryData;

import com.azure.core.util.Configuration;

import java.io.IOException;

import java.nio.file.Files;

import java.nio.file.Paths;

public class ContentSafetyQuickstart {

public static void main(String[] args) throws IOException {

// get endpoint and key from environment variables

String endpoint = System.getenv("CONTENT_SAFETY_ENDPOINT");

String key = System.getenv("CONTENT_SAFETY_KEY");

ContentSafetyClient contentSafetyClient = new ContentSafetyClientBuilder()

.credential(new KeyCredential(key))

.endpoint(endpoint).buildClient();

ContentSafetyImageData image = new ContentSafetyImageData();

String cwd = System.getProperty("user.dir");

String source = "/src/samples/resources/image.png";

image.setContent(BinaryData.fromBytes(Files.readAllBytes(Paths.get(cwd, source))));

AnalyzeImageResult response =

contentSafetyClient.analyzeImage(new AnalyzeImageOptions(image));

for (ImageCategoriesAnalysis result : response.getCategoriesAnalysis()) {

System.out.println(result.getCategory() + " severity: " + result.getSeverity());

}

}

}

Navigate back to the project root folder, and build the app with:

gradle build

Then, run it with the gradle run command:

gradle run

Output

Hate severity: 0

SelfHarm severity: 0

Sexual severity: 0

Violence severity: 0

Reference documentation | Library source code | Package (npm) | Samples |

Prerequisites

- An Azure subscription - Create one for free

- The current version of Node.js

- Once you have your Azure subscription, create a Content Safety resource in the Azure portal to get your key and endpoint. Enter a unique name for your resource, select your subscription, and select a resource group, supported region (see Region availability), and supported pricing tier. Then select Create.

- The resource takes a few minutes to deploy. After it finishes, Select go to resource. In the left pane, under Resource Management, select Subscription Key and Endpoint. The endpoint and either of the keys are used to call APIs.

Set up application

Create a new Node.js application. In a console window (such as cmd, PowerShell, or Bash), create a new directory for your app, and navigate to it.

mkdir myapp && cd myapp

Run the npm init command to create a node application with a package.json file.

npm init

Also create a /resources folder at the root of your project, and add a sample image to it.

Install the client SDK

Install the @azure-rest/ai-content-safety npm package:

npm install @azure-rest/ai-content-safety

Also install the dotenv module to use environment variables:

npm install dotenv

Your app's package.json file will be updated with the dependencies.

Create environment variables

In this example, you'll write your credentials to environment variables on the local machine running the application.

Tip

Don't include the key directly in your code, and never post it publicly. See the Azure AI services security article for more authentication options like Azure Key Vault.

To set the environment variable for your key and endpoint, open a console window and follow the instructions for your operating system and development environment.

- To set the

CONTENT_SAFETY_KEYenvironment variable, replaceYOUR_CONTENT_SAFETY_KEYwith one of the keys for your resource. - To set the

CONTENT_SAFETY_ENDPOINTenvironment variable, replaceYOUR_CONTENT_SAFETY_ENDPOINTwith the endpoint for your resource.

setx CONTENT_SAFETY_KEY 'YOUR_CONTENT_SAFETY_KEY'

setx CONTENT_SAFETY_ENDPOINT 'YOUR_CONTENT_SAFETY_ENDPOINT'

After you add the environment variables, you might need to restart any running programs that will read the environment variables, including the console window.

Analyze image content

Create a new file in your directory, index.js. Open it in your preferred editor or IDE and paste in the following code. Replace the image_path variable with the path to your sample image.

const ContentSafetyClient = require("@azure-rest/ai-content-safety").default,

{ isUnexpected } = require("@azure-rest/ai-content-safety");

const { AzureKeyCredential } = require("@azure/core-auth");

const fs = require("fs");

const path = require("path");

// Load the .env file if it exists

require("dotenv").config();

async function main() {

// get endpoint and key from environment variables

const endpoint = process.env["CONTENT_SAFETY_ENDPOINT"];

const key = process.env["CONTENT_SAFETY_KEY"];

const credential = new AzureKeyCredential(key);

const client = ContentSafetyClient(endpoint, credential);

// replace with your own sample image file path

const image_path = path.resolve(__dirname, "./resources/image.jpg");

const imageBuffer = fs.readFileSync(image_path);

const base64Image = imageBuffer.toString("base64");

const analyzeImageOption = { image: { content: base64Image } };

const analyzeImageParameters = { body: analyzeImageOption };

const result = await client.path("/image:analyze").post(analyzeImageParameters);

if (isUnexpected(result)) {

throw result;

}

for (let i = 0; i < result.body.categoriesAnalysis.length; i++) {

const imageCategoriesAnalysisOutput = result.body.categoriesAnalysis[i];

console.log(

imageCategoriesAnalysisOutput.category,

" severity: ",

imageCategoriesAnalysisOutput.severity

);

}

}

main().catch((err) => {

console.error("The sample encountered an error:", err);

});

Run the application with the node command on your quickstart file.

node index.js

Output

Hate severity: 0

SelfHarm severity: 0

Sexual severity: 0

Violence severity: 0

Clean up resources

If you want to clean up and remove an Azure AI services subscription, you can delete the resource or resource group. Deleting the resource group also deletes any other resources associated with it.

Next steps

Configure filters for each category and test on datasets using Content Safety Studio, export the code and deploy.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for