Test your model

Once your model has successfully trained, you can use translations to evaluate the quality of your model. In order to make an informed decision about whether to use our standard model or your custom model, you should evaluate the delta between your custom model BLEU score and our standard model Baseline BLEU. If your models have been trained on a narrow domain, and your training data is consistent with the test data, you can expect a high BLEU score.

BLEU score

BLEU (Bilingual Evaluation Understudy) is an algorithm for evaluating the precision or accuracy of text that has been machine translated from one language to another. Custom Translator uses the BLEU metric as one way of conveying translation accuracy.

A BLEU score is a number between zero and 100. A score of zero indicates a low-quality translation where nothing in the translation matched the reference. A score of 100 indicates a perfect translation that is identical to the reference. It's not necessary to attain a score of 100—a BLEU score between 40 and 60 indicates a high-quality translation.

Model details

Select the Model details blade.

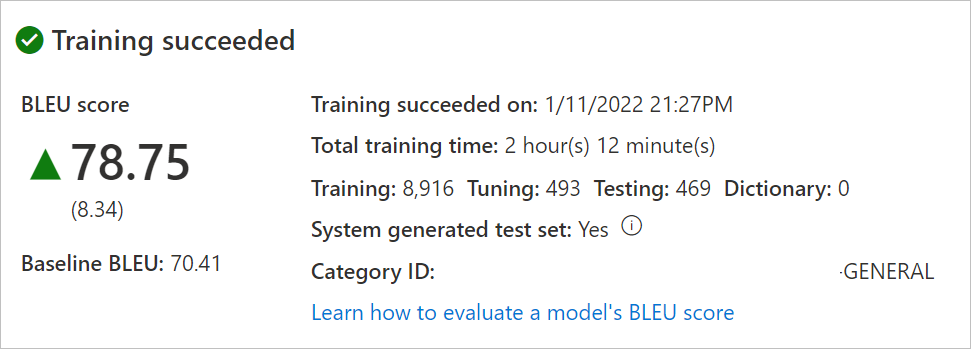

Select the model name. Review the training date/time, total training time, number of sentences used for training, tuning, testing, and dictionary. Check whether the system generated the test and tuning sets. You'll use the

Category IDto make translation requests.Evaluate the model BLEU score. Review the test set: the BLEU score is the custom model score and the Baseline BLEU is the pre-trained baseline model used for customization. A higher BLEU score means there's high translation quality using the custom model.

Test quality of your model's translation

Select Test model blade.

Select model Name.

Human evaluate translation from your Custom model and the Baseline model (our pre-trained baseline used for customization) against Reference (target translation from the test set).

If you're satisfied with the training results, place a deployment request for the trained model.

Next steps

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for