Use the Azure Managed Lustre CSI Driver with Azure Kubernetes Service

This article describes how to plan, install, and use Azure Managed Lustre in Azure Kubernetes Service (AKS) with the Azure Managed Lustre Kubernetes container support interface driver (Azure Managed Lustre CSI driver).

About the Azure Managed Lustre CSI driver for AKS

The Azure Managed Lustre Container Support Interface (CSI) driver for AKS enables you to access Azure Managed Lustre storage as persistent storage volumes from Kubernetes containers deployed in Azure Kubernetes Service (AKS).

Compatible Kubernetes versions

The Azure Managed Lustre CSI driver for AKS is compatible with Azure Kubernetes Service (AKS). Other Kubernetes installations are not currently supported.

AKS Kubernetes versions 1.21 and later are supported. This includes all versions currently available when creating a new AKS cluster.

Important

The Azure Managed Lustre CSI driver currently only works with the Ubuntu Linux OS SKU for node pools of AKS.

Compatible Lustre versions

The Azure Managed Lustre CSI driver for AKS is compatible with Azure Managed Lustre. Other Lustre installations are not currently supported.

The Azure Managed Lustre CSI driver versions 0.1.10 and later are supported with the current version of the Azure Managed Lustre service.

Prerequisites

- An Azure account with an active subscription. Create an account for free.

- A terminal environment with the Azure CLI tools installed. See Get started with Azure CLI

- kubectl, the Kubernetes management tool, is installed in your terminal environment. See Quickstart: Deploy an Azure Kubernetes Service (AKS) cluster using Azure CLI

- Create an Azure Managed Lustre deployment. See Azure Managed Lustre File System documentation

Plan your AKS Deployment

There are several options when deploying Azure Kubernetes Service that affect the operation between AKS and Azure Managed Lustre.

Determine the network type to use with AKS

There are two network types that are compatible with the Ubuntu Linux OS SKU, kubenet and the Azure Container Network Interface (CNI) driver. Both options work with the Azure Managed Lustre CSI driver for AKS but they have different requirements that need to be understood when setting up virtual networking and AKS. See Networking concepts for applications in Azure Kubernetes Service (AKS) for more information on determining the proper selection.

Determine network architecture for interconnectivity of AKS and Azure Managed Lustre

Azure Managed Lustre operates within a private virtual network, your Kubernetes must have network connectivity to the Azure Managed Lustre virtual network. There are two common ways to configure the networking between Azure Managed Lustre and AKS.

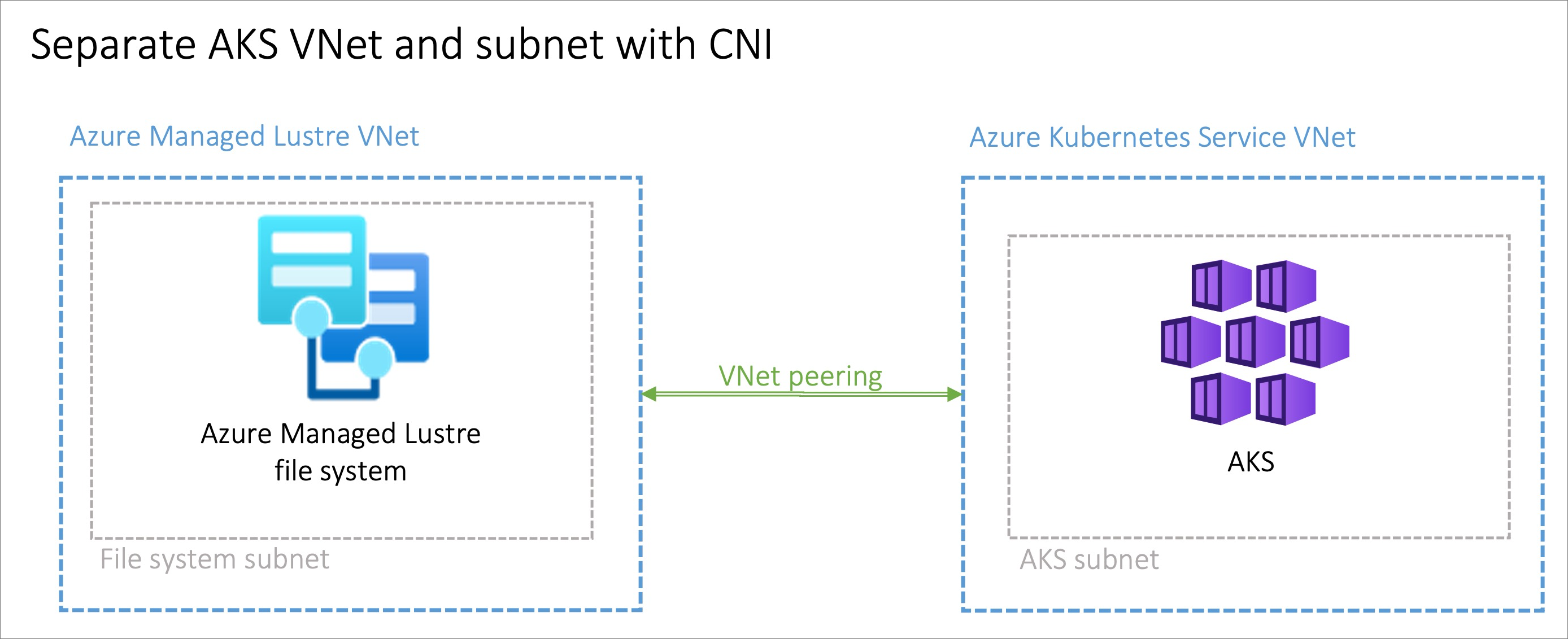

- Install AKS into it's own Virtual Network and create a virtual network peering with the Azure Managed Lustre Virtual Network.

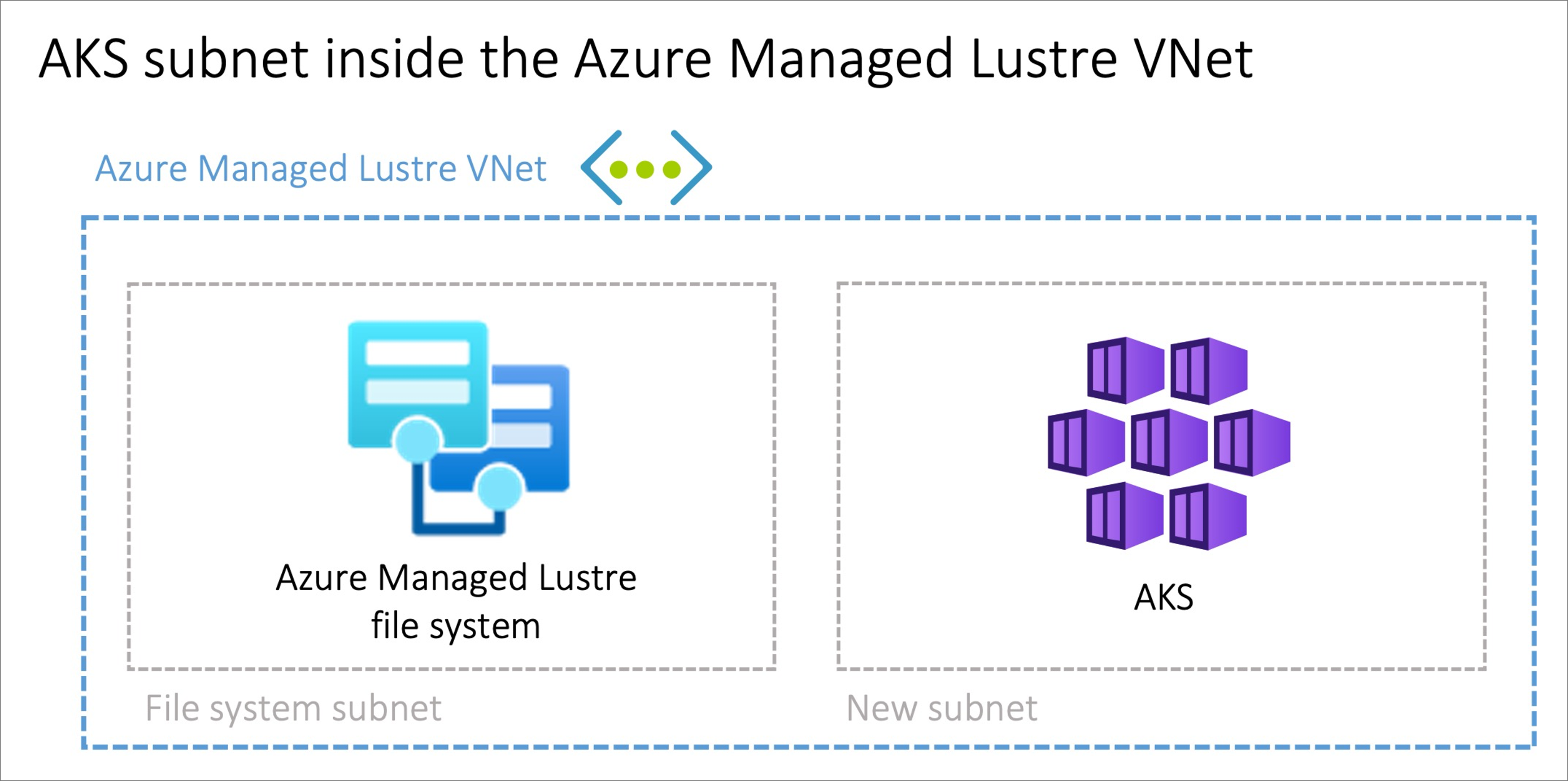

- Use Bring your Own Networking option in AKS to install AKS on a new subnet on the Azure Managed Lustre Virtual Network.

Note

Installing AKS onto the same subnet as Azure Managed Lustre is not recommended.

Peering AKS and Azure Managed Lustre virtual networks

The option to peer two different virtual networks has the advantage of separating the management of the various networks to different privileged roles. Peering can also provide additional flexibility as it can be made across Azure subscriptions or regions. Virtual Network Peering will require coordination between the two networks to avoid choosing conflicting IP network spaces.

Installing AKS into a subnet on the Azure Managed Lustre virtual network

The option to install the AKS cluster into the Azure Managed Lustre virtual network with the Bring Your Own Network feature in AKS can be advantageous where you want scenarios where the network is managed singularly. An additional subnet sized to meet your AKS networking requirements will need to be created in the Azure Managed Lustre virtual network.

There is no privilege separation for network management when provisioning AKS onto the Azure Managed Lustre Network and the AKS service principal will need privileges on the Azure Managed Lustre virtual network.

Setup overview

To enable the Azure Managed Lustre CSI Driver for Kubernetes, perform these steps:

Check the installation by optionally using an echo pod to confirm the driver is working.

The following sections describe each task in greater detail.

Create an Azure Managed Lustre file system

If you haven't already created your Azure Managed Lustre file system cluster, create the cluster now. For instructions, see Create an Azure Managed Lustre file system in the Azure portal. Currently, the driver can only be used with a existing Azure Managed Lustre file system.

Create an AKS Cluster

If you haven't already created your AKS cluster, create a cluster deployment. See Deploy an Azure Kubernetes Service (AKS) cluster.

Create virtual network peering

Note

Skip this network peering step if you installed AKS into a subnet on the Azure Managed Lustre virtual network.

The AKS virtual network is created in a separate resource group from the AKS cluster's resource group. You can find the name of this resource group by going to your AKS cluster in the Azure Portal choosing the Properties blade and finding the Infrastructure resource group. This resource group contains the virtual network that needs to be paired with the Azure Managed Lustre virtual network. It matches the pattern MC_<aks-rg-name>_<aks-cluster-name>_<region>.

Consult Virtual Network Peering to peer the AKS virtual network with your Azure Manages Lustre virtual network.

Tip

Due to the naming of the MC_ resource groups and virtual networks, names of networks can be similar or the same across multiple AKS deployments. When setting up peering pay close attention that you are choosing the AKS networks that you intend to choose.

Connect to the AKS cluster

Connect to the Azure Kubernetes Service cluster by doing these steps:

Open a terminal session with access to the Azure CLI tools and log in to your Azure account.

az loginSign in to the Azure portal.

Find your AKS cluster. Select the Overview blade, then select the Connect button and copy the command for Download cluster credentials.

In your terminal session paste in the command to download the credentials. It will be a command similar to:

az aks get-credentials --subscription <AKS_subscription_id> --resource_group <AKS_resource_group_name> --name <name_of_AKS>Install kubectl if it's not present in your environment.

az aks install-cliVerify that the current context is the AKS cluster you just installed the credentials and that you can connect to it:

kubectl config current-context kubectl get deployments --all-namespaces=true

Install the CSI driver

To install the CSI driver, run the following command:

curl -skSL https://raw.githubusercontent.com/kubernetes-sigs/azurelustre-csi-driver/main/deploy/install-driver.sh | bash

For local installation command samples, see Install Azure Lustre CSI Driver on a Kubernetes cluster.

Create and configure a persistent volume

To create a persistent volume for an existing Azure Managed Lustre file system, do these steps:

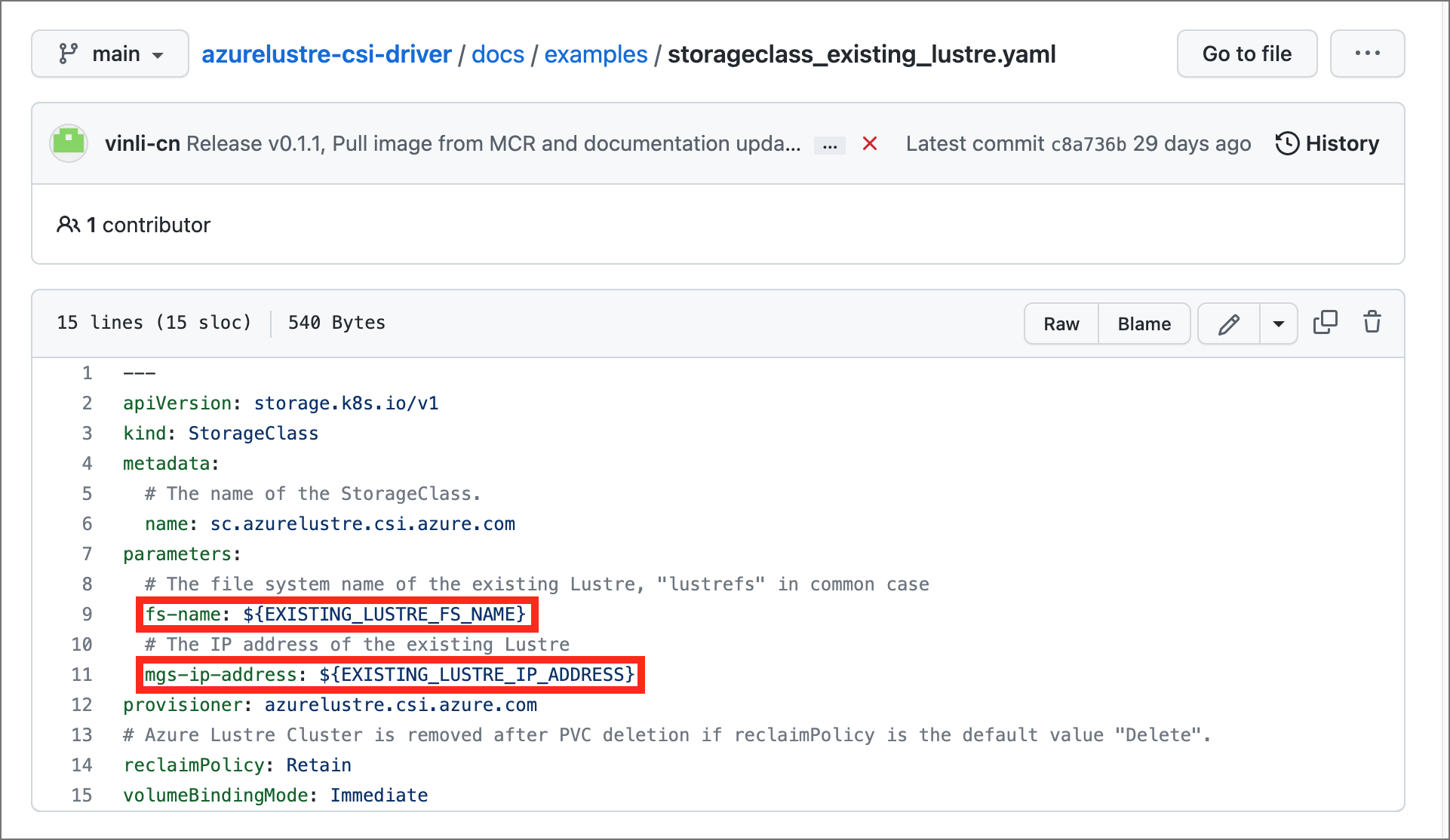

Copy the following configuration files from the /docs/examples/ folder in the azurelustre-csi-driver repository. If you cloned the repository when you installed the CSI driver, you have local copies available already.

- storageclass_existing_lustre.yaml

- pvc_storageclass.yaml

If you don't want to clone the entire repository, you can download each file individually. Open each of the following links, copy the file's contents, and then paste the contents into a local file with the same filename.

In the storageclass_existing_lustre.yaml file, update the internal name of the Lustre cluster and the MSG IP address.

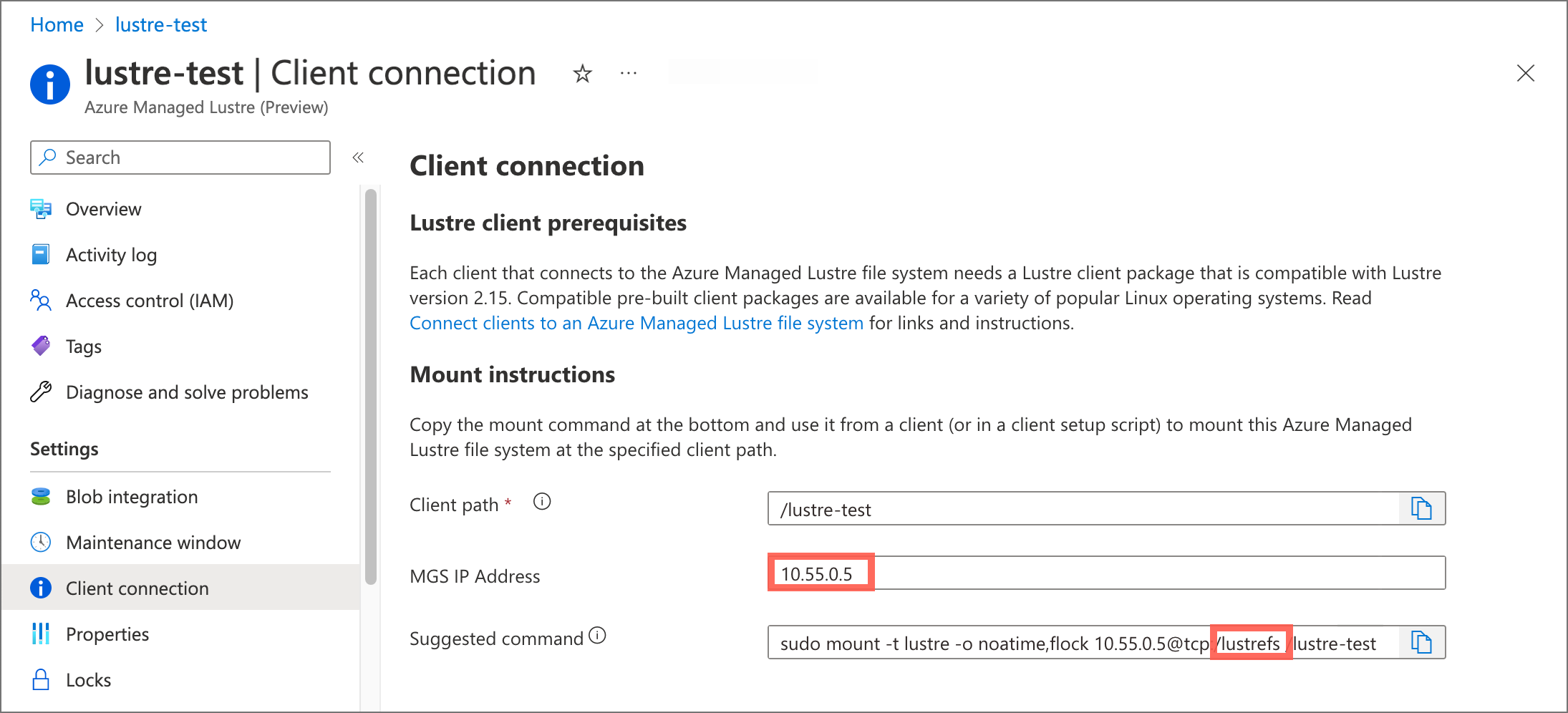

Both settings are displayed in the Azure portal, on the Client connection page for your Azure Lustre file system.

Make these updates:

Replace

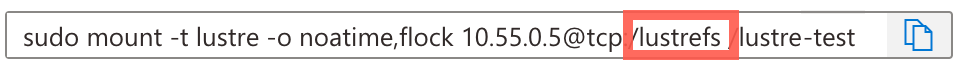

EXISTING_LUSTRE_FS_NAMEwith the system-assigned internal name of the Lustre cluster in your Azure Managed Lustre file system. The internal name is usuallylustrefs. The internal name isn't the name that you gave the file system when you created it.The suggested

mountcommand includes the name highlighted in the following address string.

Replace

EXISTING_LUSTRE_IP_ADDRESSwith the MSG IP Address.

To create the storage class and the persistent volume claim, run the following

kubectlcommand:kubectl create -f storageclass_existing_lustre.yaml kubectl create -f pvc_storageclass.yaml

Check the installation

If you want to check your installation, you can optionally use an echo pod to confirm the driver is working.

To view timestamps in the console during writes, run the following commands:

Add the following code to the echo pod:

while true; do echo $(date) >> /mnt/lustre/outfile; tail -1 /mnt/lustre/outfile; sleep 1; doneTo view timestamps in the console during writes, run the following

kubectlcommand:`kubectl logs -f lustre-echo-date`

Next steps

- Learn how to export files from your file system with an archive job.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for